20 Inference about Categorical Data

In the previous chapter, we talk about the one-sample and two-sample proportion tests, testing either the proportion of some category is equal to, greater than, or less than some value, or whether or not the two proportions of the same category from the two groups are equal, or which one is larger. The idea starts from the binomial distribution where the categorical variable being studied has only two possible categories.

In our daily lives, there are lots of categorical variables that have more than two categories, for example, car brands, eye colors, etc. In this chapter, we are going to learn two specific tests: test of goodness-of-fit and test of independence that generally consider more than two categories and the associated proportions.

Categorical Variable with More Than 2 Categories

Suppose a categorical variable has

| Subject | |||||

|---|---|---|---|---|---|

| 1 | x | ||||

| 2 | x | ||||

| 3 | x | ||||

| x |

So the first guy has black eyes, and the second one has blown eyes, and so on.

With the size

| Total | ||||

|---|---|---|---|---|

For example, if we have

Of course this is not the true proportion or distribution of eye colors, and our goal is to test whether or not the observed distribution follows some hypothesized distribution, for example the eye color distribution of all Americans. Also, we may be interested in whether or not the distributions from the two groups are more or less the same. For example, the eye color distribution of male and female students.

- The count of categories of a categorical variable having more than two categories is associated with the multinomial distribution. The binomial distribution is a special case of the multinomial distribution when

- Here we use

20.1 Test of Goodness of Fit

A citizen in Wauwatosa, WI is curious about the question:

Are the selected jurors racially representative of the population?

Well the idea is that if the jury is representative of the population, once we collect our sample of juries, the sample proportions should reflect the proportions of the population of eligible jurors (i.e. registered voters).

Suppose from the government we learn the distribution of registered voters based on races. We then collect 275 jurors racial information, and see if the racial distribution of the sample is kind of consistent with the registered voter racial distribution. The count information is summarized in the table below.

| White | Black | Hispanic | Asian | Total | |

|---|---|---|---|---|---|

| Representation in juries | 205 | 26 | 25 | 19 | 275 |

| Registered voters | 0.72 | 0.07 | 0.12 | 0.09 | 1.00 |

The first thing we can do is convert the count into proportion or relative frequency, so that the sample proportion and the target proportion can be easily paired and compared.

| White | Black | Hispanic | Asian | Total | |

|---|---|---|---|---|---|

| Representation in juries | 0.745 | 0.095 | 0.091 | 0.069 | 1.00 |

| Registered voters | 0.72 | 0.07 | 0.12 | 0.09 | 1.00 |

While the proportions in the juries do not precisely represent the population proportions, it is unclear whether these data provide convincing evidence that the sample is not representative. If the jurors really were randomly sampled from the registered voters, we might expect small differences due to chance. However, unusually large differences may provide convincing evidence that the juries were not representative. Specifically, we want a test to answer the question

Are the proportions of juries close enough to the proportions of registered voters, so that we are confident saying that the jurors really were randomly sampled from the registered voters?

As the binomial case, the multi-class counts

The test we need is the goodness-of-fit test.

Goodness-of-Fit Test

A goodness-of-fit test tests the hypothesis that the observed frequency distribution fits or conforms to some claim distribution. In the jury example, our observed (relative) frequency distribution is

Here is the question: “If the individuals are randomly selected to serve on a jury, about how many of the 275 people would we expect to be white? How about black?” We ask this question because we want to know how the jury distribution looks like if the distribution does follow the distribution of the registered voters. If what we expect to see is far from what we observe, then the jury is probably not randomly sampled from the registered voters. This matches our testing rationale. We do the testing under the null hypothesis, the scenario that the observed frequency distribution fits or conforms to some claim distribution.

| White | Black | Hispanic | Asian | |

|---|---|---|---|---|

| Registered voters | 0.72 | 0.07 | 0.12 | 0.09 |

According to the claimed distribution,

| White | Black | Hispanic | Asian | Total | |

|---|---|---|---|---|---|

|

Observed Count |

205 | 26 | 25 | 19 | 275 |

|

Expected Count |

198 | 19.25 | 33 | 24.75 | 275 |

| Population Proportion |

0.72 | 0.07 | 0.12 | 0.09 | 1.00 |

While some sampling variation is expected, the observed count and expected count should be similar if there was no bias in selecting the members of the jury. But how similar is similar enough? We want to test whether the differences are strong enough to provide convincing evidence that the jurors were not selected from a random sample of all registered voters.

Goodness-of-Fit Test Example

Before we introduce the test procedure, to have better performance the goodness-of-fit test requires each expected count is as least five. The higher the better.

In words, our hypotheses are

If the true racial distribution of juries is

-

-

-

-

we want to know if the distribution conforms to the claim racial distribution of register voters

-

-

-

-

In general, we can rewrite our hypotheses in mathematical notations:

Note that

The test statistic is a chi-squared statistic from the chi-squared distribution with degrees of freedom

The goodness-of-fit test is a chi-squared test that is always right-tailed. So we reject

Back to our example.

| White | Black | Hispanic | Asian | |

|---|---|---|---|---|

| Observed Count | ||||

| Expected Count | ||||

| Proportion under |

Under

With

Below is an example of how to perform a goodness-of-fit test in R. Because the goodness-of-fit test is a chi-squared test, we use the function chisq.test(). The argument x is the observed count vector of length p is the hypothesized proportion distribution which is a vector of probabilities of the same length as x. The function does not provide the critical value, but it gives us the

obs <- c(205, 26, 25, 19)

pi_0 <- c(0.72, 0.07, 0.12, 0.09)

## Use chisq.test() function

chisq.test(x = obs, p = pi_0)

Chi-squared test for given probabilities

data: obs

X-squared = 5.8896, df = 3, p-value = 0.1171The chi-squared test can be used in two-sided one-sample proportion test. Suppose we are doing the following test

Because there are only two categories, we automatically know the value of

Suppose

chisq.test(x = c(520, 480), p = c(0.5, 0.5))

Chi-squared test for given probabilities

data: c(520, 480)

X-squared = 1.6, df = 1, p-value = 0.2059In the language of the one-sample proportion test, we have

prop.test(x = 520, n = 1000, p = 0.5, alternative = "two.sided",

correct = FALSE)

1-sample proportions test without continuity correction

data: 520 out of 1000, null probability 0.5

X-squared = 1.6, df = 1, p-value = 0.2059

alternative hypothesis: true p is not equal to 0.5

95 percent confidence interval:

0.4890177 0.5508292

sample estimates:

p

0.52 Do you see the two tests are equivalent? They have the same test statistic, degrees of freedom as well as

Below is an example of how to perform a goodness-of-fit test in Python. Because the goodness-of-fit test is a chi-squared test, we use the function chisquare() in scipy.stats. The argument f_obs is the observed count vector of length f_exp is the hypothesized or expected number of observations if the proportion distribution follows the hypothesized or expected proportions. The function does not provide the critical value, but it gives us the

from scipy.stats import chisquare# Observed frequencies

obs = [205, 26, 25, 19]

# Expected proportions

pi_0 = [0.72, 0.07, 0.12, 0.09]

# Convert expected proportions to expected frequencies

expected = [p * sum(obs) for p in pi_0]

# Chi-square goodness-of-fit test

chisquare(f_obs=obs, f_exp=expected)Power_divergenceResult(statistic=5.889610389610387, pvalue=0.11710619130850633)The chi-squared test can be used in two-sided one-sample proportion test. Suppose we are doing the following test

Because there are only two categories, we automatically know the value of

Suppose

# Observed frequencies

obs = [520, 480]

# Expected proportions

expected = [0.5, 0.5]

# Convert expected proportions to expected frequencies

expected_freq = [p * sum(obs) for p in expected]

# Chi-square test

chisq_test_res = chisquare(f_obs=obs, f_exp=expected_freq)

chisq_test_resPower_divergenceResult(statistic=1.6, pvalue=0.20590321073206466)In the language of the one-sample proportion test, we have

from statsmodels.stats.proportion import proportions_ztest, proportion_confint

z_test_stat, pval = proportions_ztest(count=520, nobs=1000, value=0.5,

alternative='two-sided')

pval 0.2055402182226186proportion_confint(count=520, nobs=1000, alpha=0.05, method='normal')(0.48903505011072596, 0.5509649498892741)Do you see the two tests are equivalent? If you look at the proportion test output carefully, you’ll find that the square of the

z_test_stat1.2659242088545843z_test_stat ** 21.6025641025641053chisq_test_res.statistic1.6Both lead to the same conclusion.

20.2 Test of Independence

So far we consider one categorical variable with general

Contingency Table and Expected Count

Contingency Table

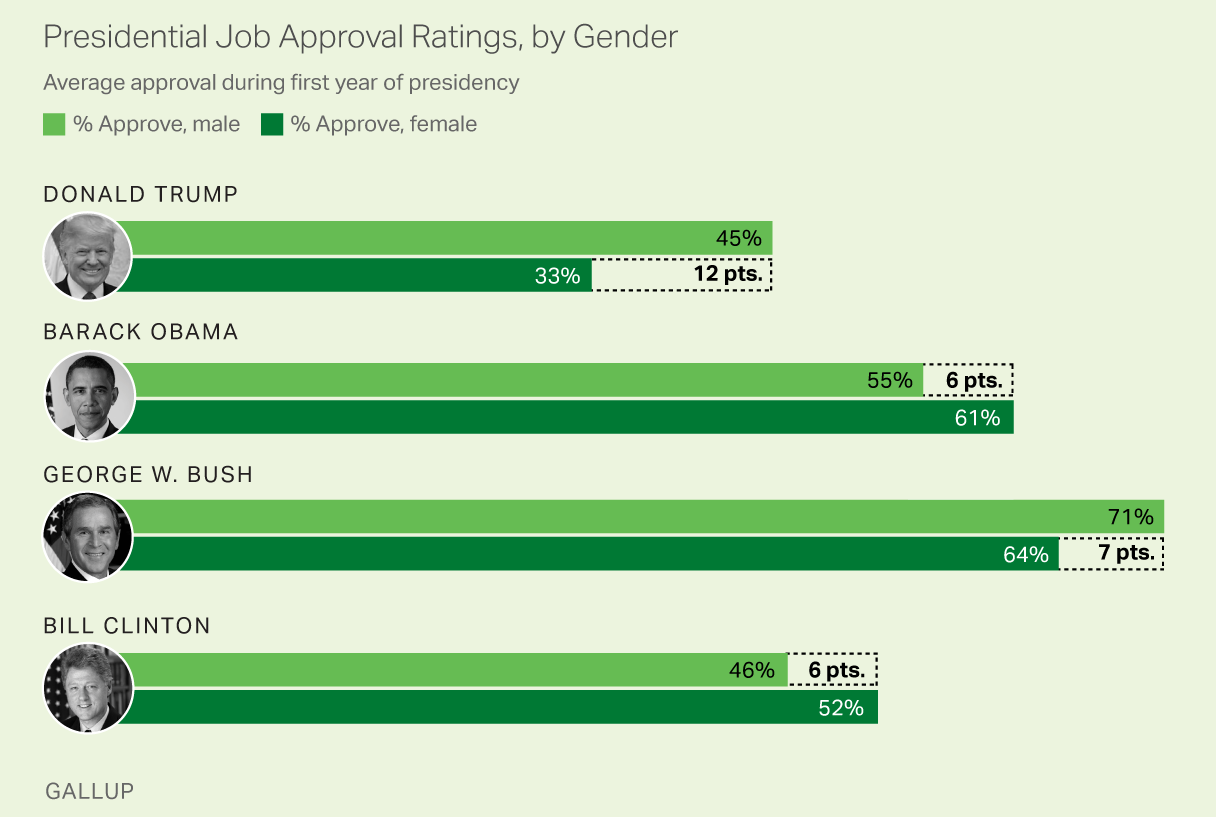

Let’s start with an example. A popular question in politics is “Does the opinion of the President’s job performance depend on gender?” This question may involve two categorical variables such as

Job performance: approve, disapprove, no opinion

Gender: male, female

To answer such question, as every other test, we collect our data and see if there is any sufficient evidence to say the two variables are dependent. When two categorical variables are involved, we usually summarize the data in a contingency table or two-way frequency/count table as shown below.

| Approve | Disapprove | No Opinion | Total | ||

|---|---|---|---|---|---|

| Male | 18 | 22 | 10 | 50 | |

| Female | 23 | 20 | 12 | 55 | |

| Total | 41 | 42 | 22 | 105 |

When we consider a two-way table, we often would like to know, are these variables related in any way? That is, are they dependent (versus independent)? If President’s approval rate has nothing to do with gender, the Job performance distributions in the male and female groups should be similar or consistent. In other words, whether or not the person is male or female does not affect how President’s job performance is viewed.

Expected Count

The idea is similar to goodness-of-fit test. We first calculate the expected count in each cell (Total row and column are excluded) in the contingency table, the count we expect to see under the condition that the two variables are independent. We always do our analysis in the world of null hypothesis that two variables have no relationship.

| Approve | Disapprove | No Opinion | Total | |

|---|---|---|---|---|

| Male | 18 (19.52) | 22 (20) | 10 (10.48) | 50 |

| Female | 23 (21.48) | 20 (22) | 12 (11.52) | 55 |

| Total | 41 | 42 | 22 | 105 |

The expected count for the

We are ready for doing test of independence once all expected counts are obtained.

Test of Independence Procedure

The test of independence requires that every expected count

As we discussed before, we believe the variables are independent unless strong evidence says they are not. So our hypotheses are

The test statistic formula is pretty similar to the test of goodness-of-fit. The test of independence is also a chi-squared test. The chi-squared test statistic is

The chi-squared test is right-tailed, so we reject

Example

| Approve | Disapprove | No Opinion | Total | |

|---|---|---|---|---|

| Male | 18 (19.52) | 22 (20) | 10 (10.48) | 50 |

| Female | 23 (21.48) | 20 (22) | 12 (11.52) | 55 |

| Total | 41 | 42 | 22 | 105 |

The test statistic is

The critical value is

Calculating all the expected counts is tedious, especially when the variables have many categories. In practice we never calculate them by hand, and use computing software to do so.

Below is an example of how to perform the test of independence using R. Since the test of independence is a chi-squared test, we still use the chisq.test() function. This time we need to prepare the contingency table as a matrix, and put the matrix in the x argument in the function. That’s it! R does everything for us. If we save the result as an object like ind_test which is a R list, we can get access to information related to the test, such as the expected counts ind_test$expected.

[,1] [,2] [,3]

[1,] 18 22 10

[2,] 23 20 12## Using chisq.test() function

(ind_test <- chisq.test(x = contingency_table))

Pearson's Chi-squared test

data: contingency_table

X-squared = 0.65019, df = 2, p-value = 0.7225## extract expected counts

ind_test$expected [,1] [,2] [,3]

[1,] 19.52381 20 10.47619

[2,] 21.47619 22 11.52381## critical value

qchisq(0.05, df = (2 - 1) * (3 - 1), lower.tail = FALSE) [1] 5.991465Below is an example of how to perform the test of independence using Python. The function we use is chi2_contingency() in scipy.stats since the test of independence relies on a contingency table. We first prepare the contingency table as a matrix, and put the matrix in the observed argument in the function. That’s it! Python does everything for us. If we save the result as an object like ind_test, we can get access to information related to the test, such as the expected counts ind_test.expected_freq.

import numpy as np

from scipy.stats import chi2_contingency, chi2

contingency_table = np.array([[18, 22, 10], [23, 20, 12]])

contingency_tablearray([[18, 22, 10],

[23, 20, 12]])# Chi-square test of independence

ind_test = chi2_contingency(observed=contingency_table)

ind_test.statistic0.6501914936504742ind_test.pvalue0.7224581772535765ind_test.dof2ind_test.expected_freqarray([[19.52380952, 20. , 10.47619048],

[21.47619048, 22. , 11.52380952]])# Critical value for chi-square distribution

chi2.ppf(0.95, df=(2-1)*(3-1))5.99146454710797920.3 Test of Homogeneity

Test of homogeneity is a generalization of the two-sample proportion test. This test determines if two or more populations (or subgroups of a population) have the same distribution of a single categorical variable having two or more categories.

More to be added.

20.4 Exercises

- A researcher has developed a model for predicting eye color. After examining a random sample of parents, she predicts the eye color of the first child. The table below lists the eye colors of offspring. On the basis of her theory, she predicted that 87% of the offspring would have brown eyes, 8% would have blue eyes, and 5% would have green eyes. Use 0.05 significance level to test the claim that the actual frequencies correspond to her predicted distribution.

| Eye Color | Brown | Blue | Green |

| Frequency | 127 | 21 | 5 |

- In a study of high school students at least 16 years of age, researchers obtained survey results summarized in the accompanying table. Use a 0.05 significance level to test the claim of independence between texting while driving and driving when drinking alcohol. Are these two risky behaviors independent of one another?

| Drove after drinking alcohol? | ||

|---|---|---|

| Yes | No | |

| Texted while driving | 720 | 3027 |

| Did not text while driving | 145 | 4472 |