sample(x = bag_balls, size = 1)

# [1] "red"12 Random Sampling and Sampling Distribution

This chapter talks about sampling. When we sample, simulate, or draw a value of a random variable following some distribution, we get a realized value of that random variable that varies from sample to sample due to its random or stochastic property. Sampling techniques are used frequently in statistical inference and various fields of science, engineering, and mathematics. Here in particular, we talk about Monte Carlo simulation and sampling distribution. Other sampling methods for statistical inference, such as bootstrapping, will be discussed in Chapter 15.

12.1 Monte Carlo Simulation

From Wikipedia, there is no consensus on how Monte Carlo should be defined. In short, Monte Carlo simulation is a repeated random sampling method. Therefore, when using Monte Carlo method, we do not just simulate one single value, but generate numerous realized values of random variables. Also these values are generated with randomness.

Monte Carlo methods can be used to approximate the probability of some event happening. Take categorical data for example, once we collect the Monte Carlo samples, we can calculate the relative frequency of each category in the data, and use their relative frequency as the probability that a specific category happens when a sample is drawn from some target distribution.

Suppose there are 2 red balls and 3 blue ones in a bag. Apparently, if each ball is equally likely to be chosen (picking at random), and if we Do know in advance that 2 reds and 3 blues are in the bag, then the probability that a red ball is drawn is 2/5. The question is

The idea of Monte Carlo simulation is that we repeat the experiment (drawing a ball) a large number of times, then obtain the relative frequency of red ball to approximate the probability of getting a red ball.

Let me first create a vector bag_balls that saves the information about the color of ball in the bag. It’s there, but let’s just don’t see it at this moment.

In R, to randomly sample an element of a vector, we can use the function sample(). For example, the following code shows how to randomly draw a ball from that bag:

In Python, to randomly sample an element of a vector, we can use the function np.random.choice(). For example, the following code shows how to randomly draw a ball from that bag:

np.random.choice(bag_balls, size=1)

# array(['red'], dtype='<U4')It produces one random outcome. This time we get a red ball. We can (put the red ball back in to the bag) draw a ball again by running the code:

sample(x = bag_balls, size = 1)

# [1] "blue"This time we get a blue ball. To do a Monte Carlo simulation, we can use the replicate() function, which allows us to repeat the same job a number of times

The argument n is an integer indicating the number of replications. The argument expr is the expression (usually a function call) to evaluate repeatedly. Here I repeatedly draw a ball 100 times. The object mc_sim contains 100 colors collected from the bag of colored balls.

str(mc_sim)

# chr [1:100] "blue" "blue" "blue" "blue" "blue" "blue" "red" "blue" "red" ...How do we get the relative frequency of category? We can first check the frequency table or frequency distribution using table() function:

# mc_sim

# blue red

# 58 42To get the relative frequency, just divided by the total number of repetitions.

# mc_sim

# blue red

# 0.58 0.42np.random.choice(bag_balls, size=1)

# array(['blue'], dtype='<U4')This time we get a blue ball. To do a Monte Carlo simulation, we repeatedly draw one ball from the bag using a for loop within a list, so that the every draw is saved in a list.

In the code, range(100) creates a sequence of 100 integers from 0 to 99. Then for each integer, we call it i, we do the sampling np.random.choice(bag_balls). Every sampling result is wrapped up in a list [].

mc_sim = [np.random.choice(bag_balls) for i in range(100)]

mc_sim[0:10]

# ['blue', 'red', 'blue', 'blue', 'red', 'red', 'red', 'red', 'red', 'blue']How do we get the relative frequency of category? We can first turn a list into a pd.Series so that we can use the method value_count() to generate the frequency table or frequency distribution:

import pandas as pd

freq_table = pd.Series(mc_sim).value_counts()To get the relative frequency, just divided by the total number of repetitions.

freq_table / 100

# blue 0.63

# red 0.37

# Name: count, dtype: float64So if there are 5 balls in the bag, how many red balls in the bag? Well I would guess there are 2 red balls and 3 blue balls in the bag because the chance of getting a red ball is about 40%. We just demo a Monte Carlo simulation for categorical data.

It is important to know that Monte Carlo works better when the number of repetitions is large. When we repeatedly sample the value only few times, we may unluckily not be able see the entire picture of the target distribution, and get stuck in some part of the distribution. As a result, the Monte Carlo method cannot faithfully show the whole picture, leading to a biased approximation. In our example, notice that the first 6 random draws are all blue. If we just stop there, we may make a conclusion that the balls in the bag only have one color blue because we “never” see any other colors in our experiment. In this case, 6 samples are too small, and the “true color” has not shown. Again, generally it needs sufficiently large Monte Carlo samples to uncover the target distribution. When we repeatedly draw a ball 10,000 times, we have

Note that the relative frequency of red ball is now so close to the true probability 0.4. Now we are more sure that exactly two red balls in the bag!

12.2 Random Number Generation

When we do sampling using a computer, we use a so-called random number generator to generate a random outcome, and sampling results vary from sample to sample. This is why this time you get red, next time you get blue, and you don’t know you will get a blue or red next time you do the random sampling.

Interestingly, a computer cannot generate a pure random outcome, and a pseudorandom number generator is usually used to generate a sequence of numbers whose properties approximate the properties of sequences of truly random numbers. Because of this, we can actually control random generation results using a random seed. For example, if you set the seed at 2024, then every time you do random sampling, you always get the same sampling result.

To ensure the results are exactly the same every time you do sampling, in R we set the random seed to a specific number using the function set.seed().

Here we draw three balls at random. Because the two random seeds are identical, the two “random” sampling results are identical too.

The sample() function can actually draw lots of balls. But be careful about the with and without replacement issue. The default setting is draw without replacement. So when we try to draw 6 balls at once, it renders an error, saying cannot take a sample larger than the population when replace = FALSE.

sample(bag_balls, 6)

# Error in sample.int(length(x), size, replace, prob):

# cannot take a sample larger than the population when 'replace = FALSE'Basically we only 5 balls, without replacement, once a ball is drawn, the ball is out of bag, and only 4 balls remain in the bag. So without replacement, we can at most draw 5 balls, which is the “entire population”. If we wanna draw more than 5 times from the same bag, we got to specify replace = TRUE in the sample() function.

sample(bag_balls, 6, replace = TRUE)

# [1] "blue" "red" "blue" "red" "red" "red"W can actually do the Monte Carlo simulation using just the sample() function like sample(balls, size = B, replace = TRUE). This means that we return the ball back to the bag after drawing it, and we repeatedly draw a ball continually B times basically under the same conditions.

Not surprisingly, we get results very similar to those previously obtained using the replicate() function. Moreover, if we use the same random seed on sample() and replicate(), their sampling results will be the same:

To ensure the results are exactly the same every time you do sampling, in R we set the random seed to a specific number using the function np.random.seed().

Note that by default np.random.choice() does sampling with replacement. We need to set replace=False.

# Sampling three balls without replacement

np.random.seed(2024)

np.random.choice(bag_balls, size=3, replace=False)

# array(['red', 'blue', 'blue'], dtype='<U4')## same result!

np.random.seed(2024)

np.random.choice(bag_balls, size=3, replace=False)

# array(['red', 'blue', 'blue'], dtype='<U4')Here we draw three balls at random. Because the two random seeds are identical, the two “random” sampling results are identical too.

The np.random.choice() function can actually draw lots of balls. We only 5 balls, without replacement, once a ball is drawn, the ball is out of bag, and only 4 balls remain in the bag. So without replacement, we can at most draw 5 balls, which is the “entire population”. If we wanna draw more than 5 times from the same bag, we got to specify replace should be True (by default) in the np.random.choice() function.

# Sampling six balls with replacement

np.random.choice(bag_balls, size=6, replace=True)

# array(['blue', 'blue', 'red', 'red', 'blue', 'blue'], dtype='<U4')# Another Monte Carlo simulation with replacement

np.random.seed(1000)

mc_sim_rep = np.random.choice(bag_balls, size=100, replace=True)

pd.Series(mc_sim_rep).value_counts()

# blue 63

# red 37

# Name: count, dtype: int64Not surprisingly, we get results very similar to those previously obtained using the for loop within a list. Moreover, if we use the same random seed on np.random.choice(), their sampling results will be the same:

# Compare two methods

np.random.seed(2025)

mc1 = [np.random.choice(bag_balls) for i in range(100)]

pd.Series(mc1).value_counts()

# blue 58

# red 42

# Name: count, dtype: int64

np.random.seed(2025)

mc2 = np.random.choice(bag_balls, size=100, replace=True)

pd.Series(mc2).value_counts()

# blue 58

# red 42

# Name: count, dtype: int6412.3 Random Number Generation from Probability Distributions

Remember from the previous two chapters that we learn the dpqr family of functions for calculating probabilities, densities, and generating values from a probability distribution. To generate random values, we use rdist(n, ...). ... denotes the required parameter values for the distribution being drawn from. For example,

rbinom(n, size, prob)generatessizeand the probability of success specified inprob.rpois(n, lambda)generateslambda.rnorm(n, mean = 0, sd = 1)generatesmeanandsd. By default, the mean is zero, and the variance is one. Sornorm(5)draws five numbers from the standard normal distribution.

## the default mean = 0 and sd = 1 (standard normal)

rnorm(5)

# [1] 0.983 -0.409 -0.669 1.319 -1.085To generate random values, in Python we can use dist.rvs(..., size) from the scipy.stats module. size is the number of observations we would like to generate. The ... denotes the required parameter values for the distribution being drawn from. For example,

-

norm.rvs(loc, scale, size)generatessizeobservations from the normal distribution with mean and standard deviation specified inlocandscale. By default, the mean is zero, and the variance is one. Sonorm.rvs(size=5)draws five numbers from the standard normal distribution. Note that the wordsizecannot be skipped because it is the third argument. If we writenorm.rvs(5), the function will draw a number from a normal distribution with mean 5 and variance 1

from scipy.stats import norm

norm.rvs(size=5)

# array([-1.20602816, 1.30241414, -0.5761093 , 1.07786143, -0.64792117])NumPy offers np.random.randn() to sample from the standard normal distribution.

np.random.randn(5)

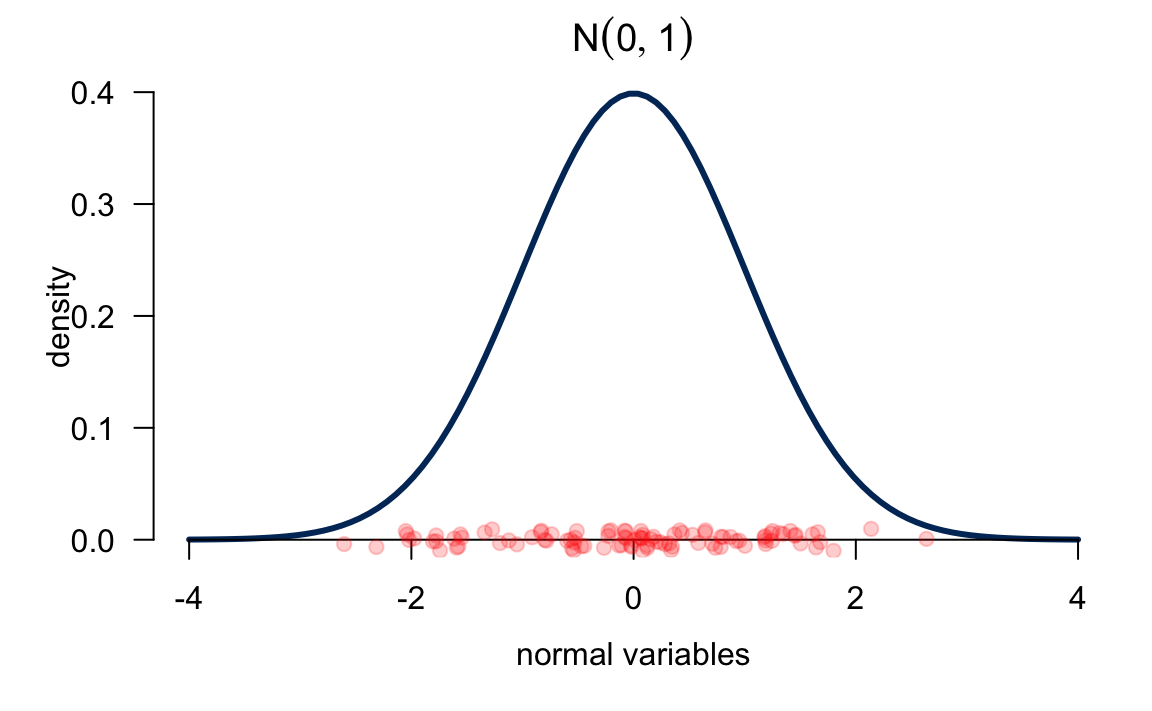

# array([-0.06048719, -1.09012056, 1.47339158, -1.9039995 , 1.81507773])All the 100 red points below are random draws from the standard normal distribution. You can see that most of the points are around the mean because the density around the mean is higher. Also, we can see when we draw normal samples, it is very difficult to get a sample with a very extreme value because its corresponding density value is quite small. Therefore, we tend to underestimate the population variance if we use the sample data to estimate it. And that’s one of the reason why we divided by

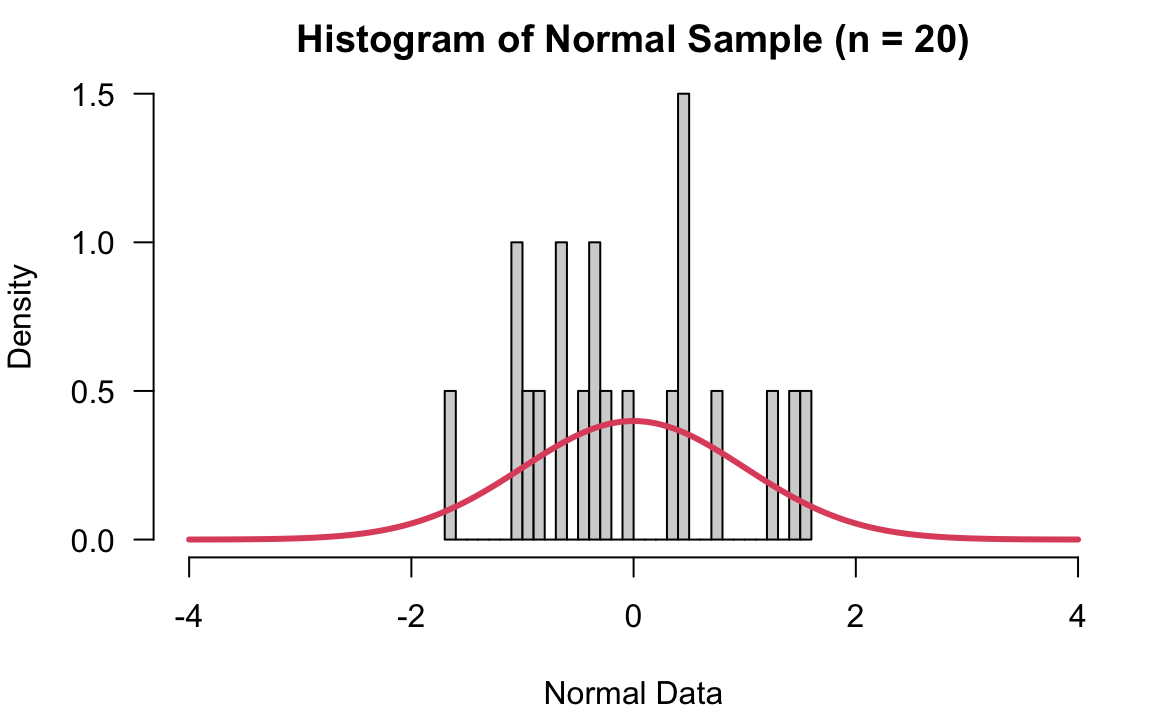

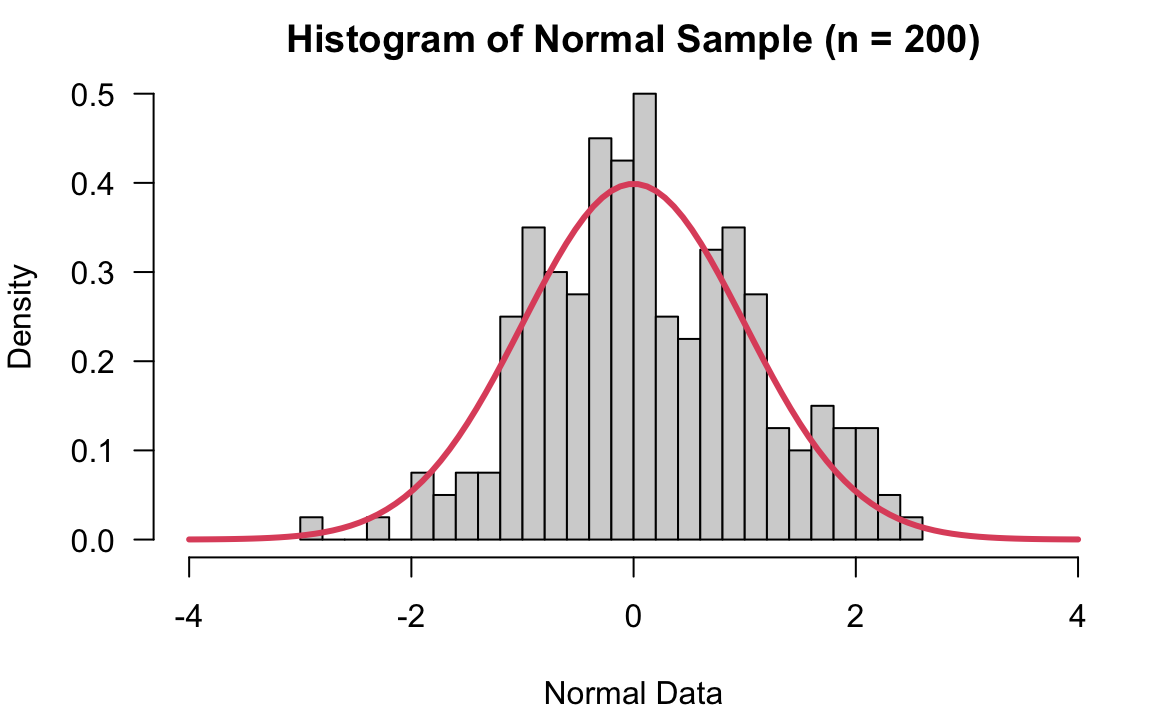

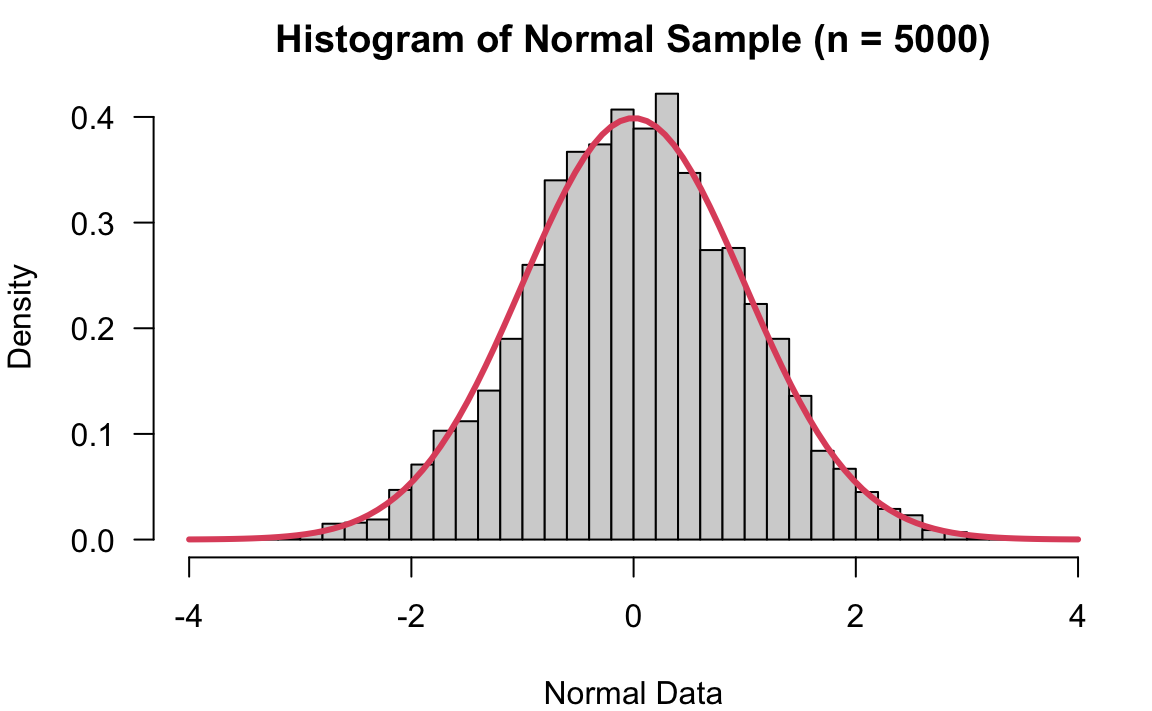

In statistics, we hope the sampled data to be as representative of population as possible. Suppose the population is normal. If the sample is a random sample, we hope the sample size to be large. The larger the sample size, the more representative of population the sample is.

When the sample size is just 20, you can see the sample data does not look very normal. When

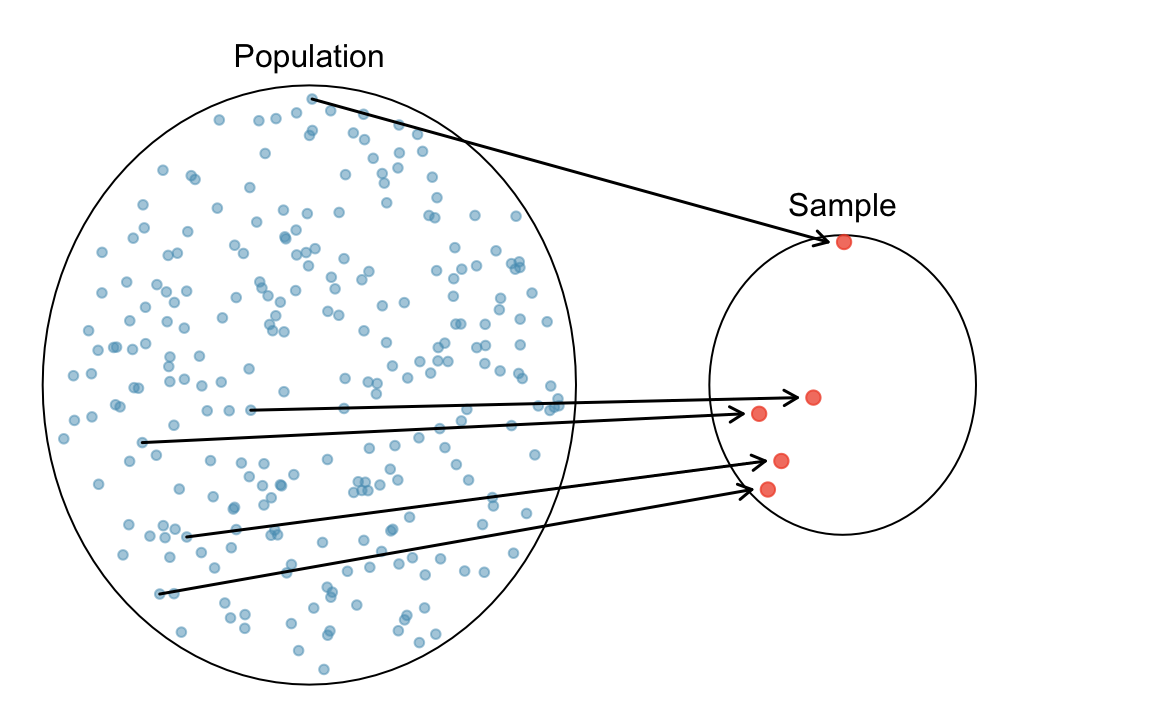

12.4 Why Use Sampling Distribution

When we do statistical inference, we assume each data point in the sample is drawn from the target population whose characteristic we focus is assumed to follow some probability distribution that is unknown to us. For example, suppose we would like to do inference about Marquette students’ mean height. We assume the student height follows a normal distribution

The sampling distribution is an important concept that connects probability and statistics together. We use the sampling distribution quite often, at least at the introductory level, to do statistical inference, and that’s why we need to learn what it is before doing statistical inference.

Parameter

Parameters in a probability distribution are the values describing the entire distribution. For example,

- Binomial: two parameters,

- Poisson: one parameter,

- Normal: two parameters,

As long as we know the values of the parameters of some distribution, we are able to calculate any probability of the distribution, and describe the distribution exactly. The entire distribution is controlled solely by the few parameters.

In statistics, we usually assume our target population follows some distribution, but its parameters are unknown to us. For example, we may assume human weight follows

Treat Each Data Point as a Random Variable

A statistical data analysis more or less involves some probability. How do we bring probability into the analysis? How is the data related to probability? Here we are going to learn some insight about it. Suppose in order to do a data analysis and inference about some population characteristic, the population mean for example, we collect a sample data of size

Here is how the probability comes into play. First, we assume the target population follows some probability distribution, say

Take Marquette students weight for example. Suppose the weight follows

# 134 110 177 183 144 150 95 200 145 189So the realized value of

Then we call such sample data

-

Before we actually collect the data, the data

- Once we collect the data, we know the realized value of these random variables:

12.5 Sampling Distribution

Any value computed from a sample

- The sample mean

- Sample variance

Since

Sampling Distribution of Sample Mean

Since

The following table shows 5 replicates of sample data of size 10. The first data set has 10 realized values 117 169 111 190 98 94 127 105 93 187. If we were to collect another data set, the first realized value of 117 because again

# x1 x2 x3 x4 x5 x6 x7 x8 x9 x10 mean

# Dataset-1 117 169 111 190 98 94 127 105 93 187 129

# Dataset-2 192 175 179 159 168 167 103 145 151 93 153

# Dataset-3 93 110 129 173 145 156 189 184 182 94 146

# Dataset-4 155 136 129 173 137 92 176 130 189 161 148

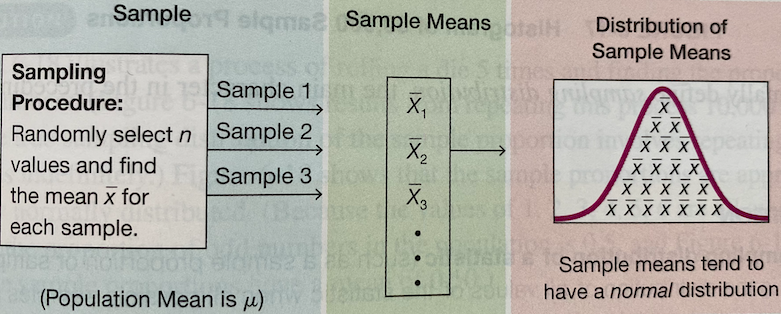

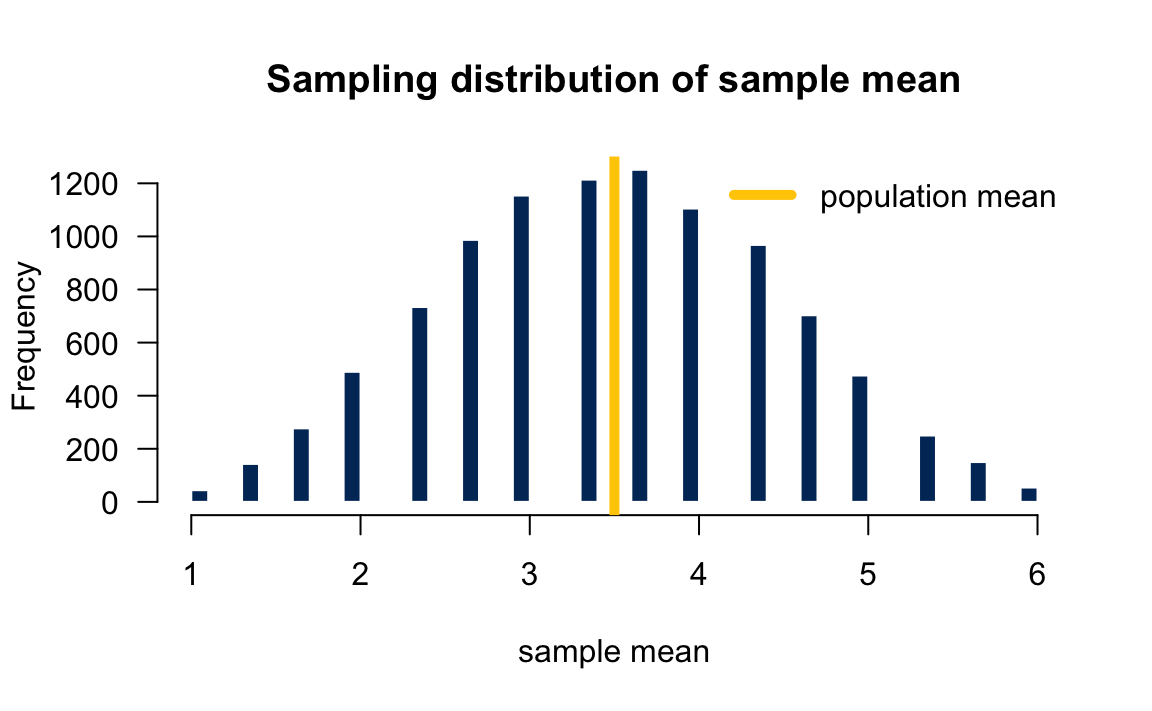

# Dataset-5 121 131 132 91 168 143 138 191 145 140 140Figure 12.2 illustrates how we collect the sample of sample means that represents its sampling distribution. In short, if we were able to collect lots of samples of size

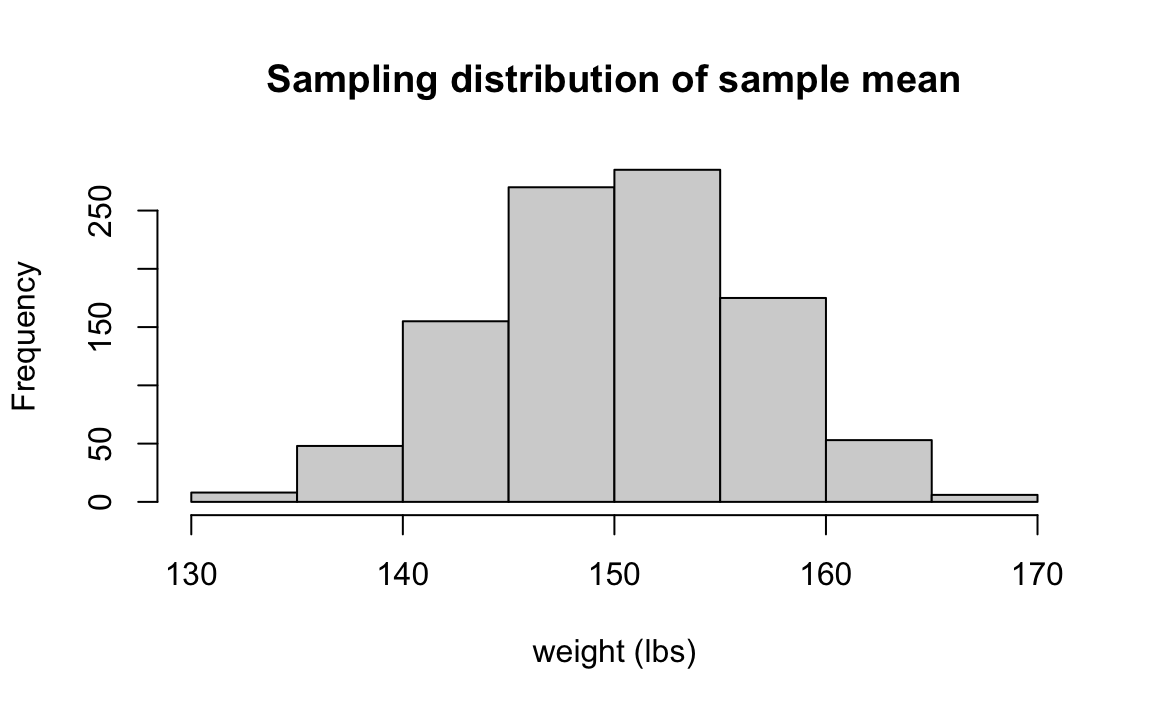

The following histogram shows the sampling distribution of the sample mean for the sample of size 10 when the sampling are repeated 1000 times. That is, we have 1000

The concept is a little abstract, and you may need time to digest it. The applet Sampling Distribution Applet provides animation of how the sampling distribution is formed. I highly recommend that you play with it, and figure out the entire building process.

This is an important question. So far we know each data point or random variable

-

The sample mean

# data 1: 30 40 50 60 70

# data 2: 0 5 50 95 100

# data 3: 0 5 10 15 220The three data sets all have

- The sample mean

The population distribution

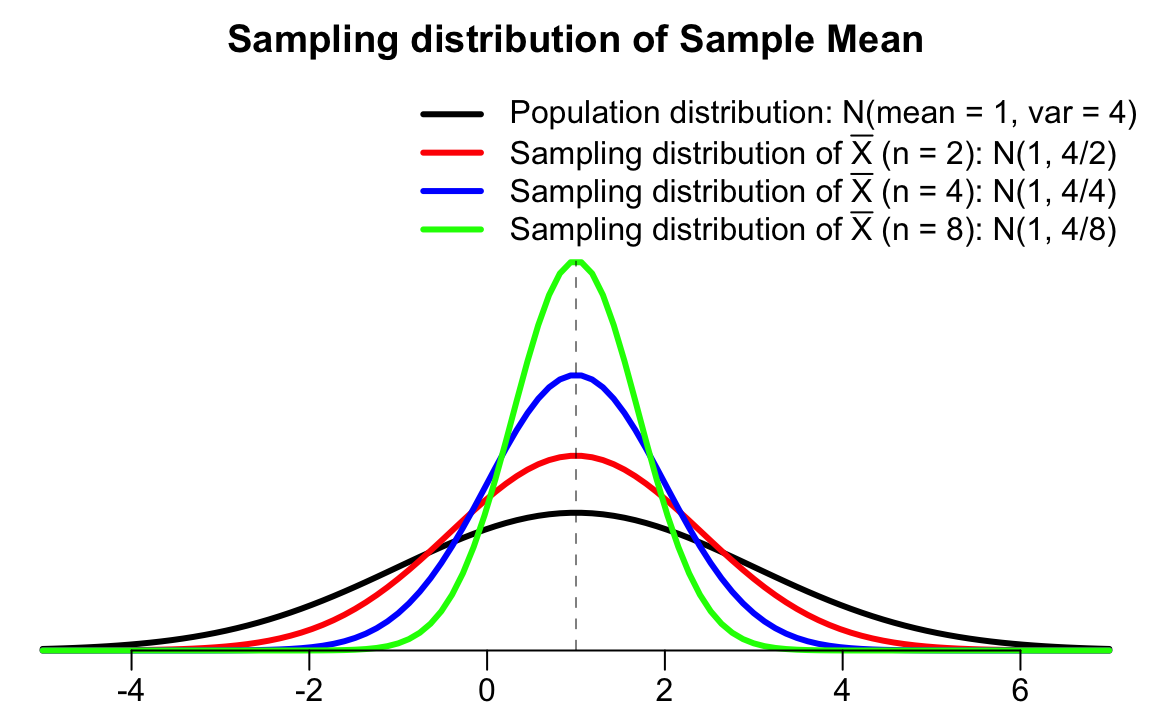

Suppose

If the population distribution is

Figure 12.3 depicts that the sampling distributions of the sample mean are less variable than the population distribution (black colored). As the sample size

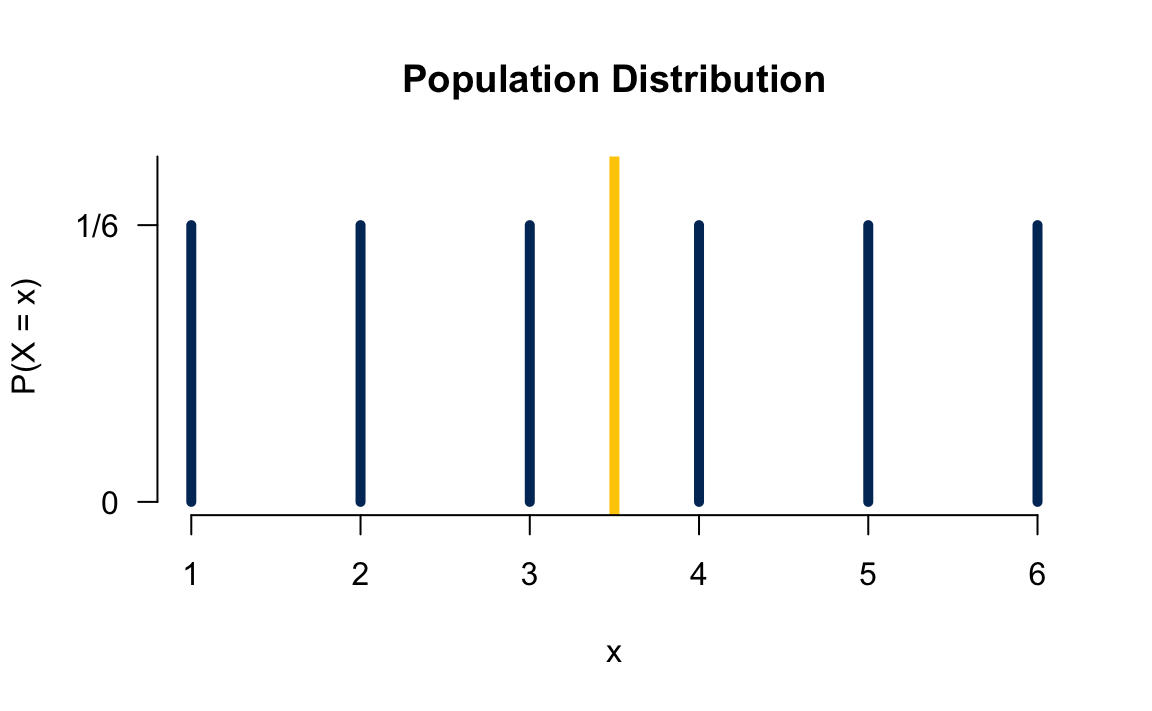

Example: Rolling a Die

Let’s see how we get a sampling distribution through an example. Suppose one rolls a fair die 3 times 🎲🎲 🎲 independently to obtain 3 values from the “population”

To obtain the sampling distribution of the sample mean, we first repeat the process 10,000 times, and get 10,000 corresponding sample means.

# x1 x2 x3 mean

# Dataset-1 6 3 2 3.67

# Dataset-2 4 2 5 3.67

# Dataset-3 3 2 6 3.67

# . . . . . .

# x1 x2 x3 mean

# Dataset-9998 5 4 6 5.00

# Dataset-9999 4 1 1 2.00

# Dataset-10000 4 6 1 3.67Then plot the histogram of those sampling means. Figure 12.5 shows the histogram of those 10000 sample means which can be treated as the sampling distribution of the sample mean. What do we see from the plots? First, since the population distribution is discrete, so is the sampling distribution. Second, both have the identical mean 3.5. 1 Third, the sampling distribution look more like a normal distribution.

12.6 Standardization of Sample Mean

Any random variable can be standardized. For a single random variable

Again, since

Example: Psychomotor Retardation

Suppose psychomotor retardation scores for a group of patients have a normal distribution with a mean of 930 and a standard deviation of 130.

- What is the probability that the mean retardation score of a random sample of 20 patients was between 900 and 960?

First, from the question assume that

What we want to compute is

Finally we just need to find

If we don’t do standardization, remember to use values in the original scale, and specify the mean and standard deviation. Keep in mind the standard deviation is

# Z-scores and probabilities

z1 = (960-930)/(130/np.sqrt(20))

z1

# 1.0320313742306721

z2 = (900-930)/(130/np.sqrt(20))

z2

# -1.0320313742306721

norm.cdf(z1) - norm.cdf(z2)

# 0.6979425798488381If we don’t do standardization, remember to use values in the original scale, and specify the mean and standard deviation. Keep in mind the standard deviation is

# Direct probability calculation

norm.cdf(960, loc=930, scale=130/np.sqrt(20)) - norm.cdf(900, loc=930, scale=130/np.sqrt(20))

# 0.6979425798488381The probability that the mean psychomotor retardation score of a random sample of 20 patients is between 900 and 960 is about 70%.

12.7 Exercises

- Head lengths of Virginia possums follow a normal distribution with mean 104 mm and standard deviation 6 mm.

- What is the sampling distribution of the sample mean of the head length when the sample size

- What is the sampling distribution of the sample mean of the head length when the sample size

- Assume that females have pulse rates that are normally distributed with a mean of 76.0 beats per minute and a standard deviation of 11.5 beats per minute.

- If 1 adult female is randomly selected, find the probability that her pulse rate is less than 81 beats per minute.

- If 18 adult female are randomly selected, find the probability that their mean pulse rate is less than 81 beats per minute.

The average or the empirical mean of those 10000