29 Logistic Regression

We already finished the discussion of linear regression. There are a lot of different regression models and regression problems that can be discussed. If you want to learn more about regression, take regression analysis or machine learning courses. In this chapter, we will be switching to the topic of classification which is another huge and popular topic. Specifically, we talk about logistic regression. There are many other classification methods out there, for example K-nearest neighbors, generalized additive models, trees, random forests, boosting, support vector machines, etc. Each method has it own advantages and disadvantages, and no one method dominates all. If you are interested in classification and want to learn more about classification methods, take a statistical machine learning course.

29.1 Regression vs. Classification

Linear regression assumes that the response

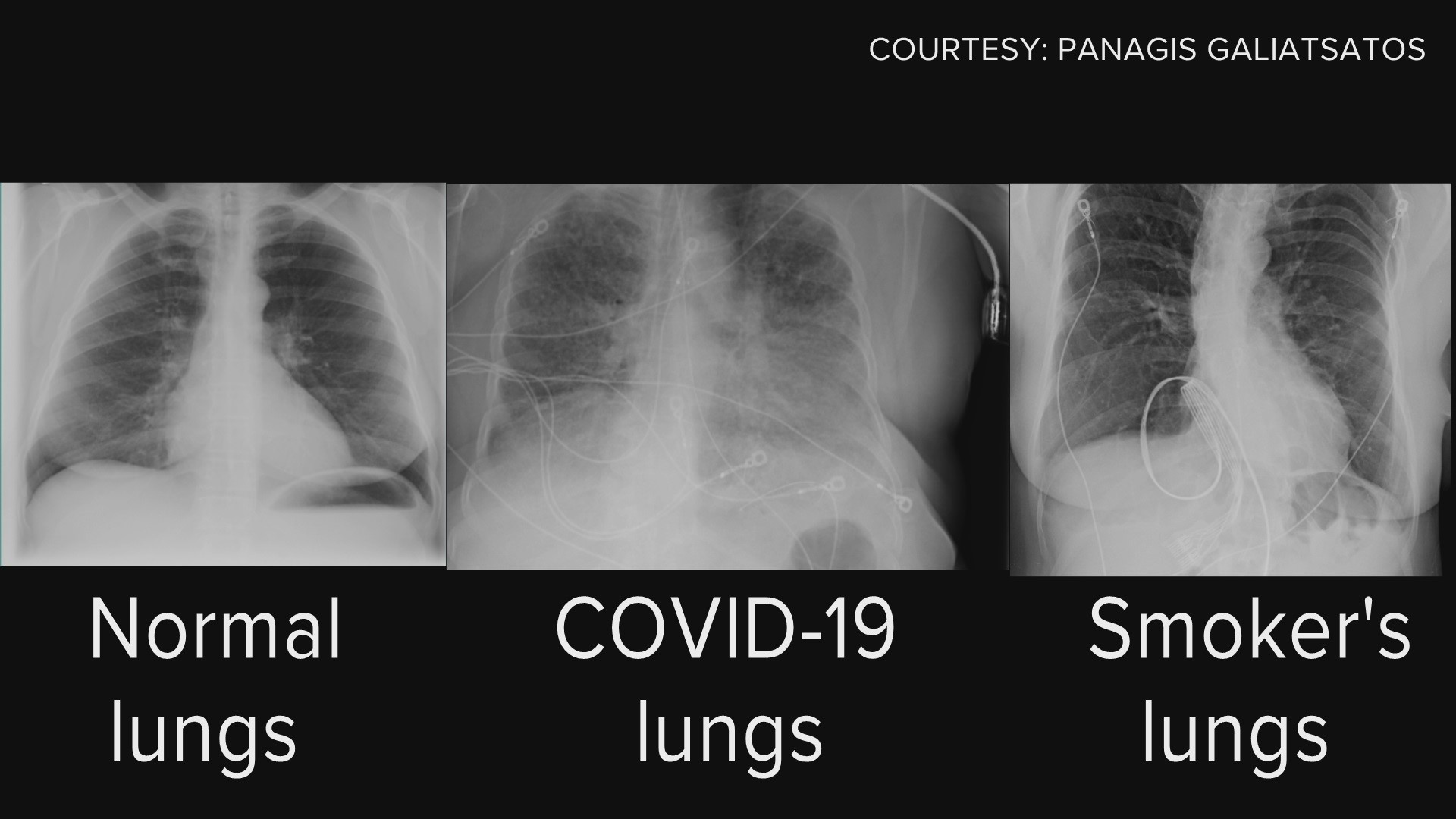

A process of predicting categorical response is known as classification. There are many classification tools, or classifiers used to predict a categorical response. For instance, logistic regression is a classifier.

Normal vs. COVID vs. Smoker’s Lungs

Fake vs. Fact

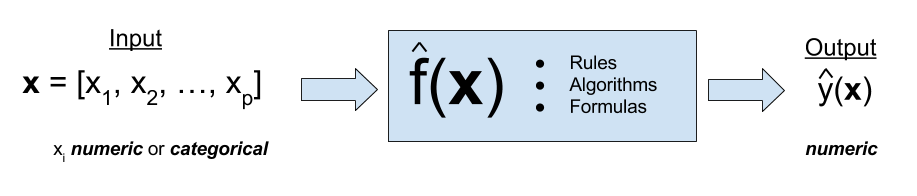

Regression Function

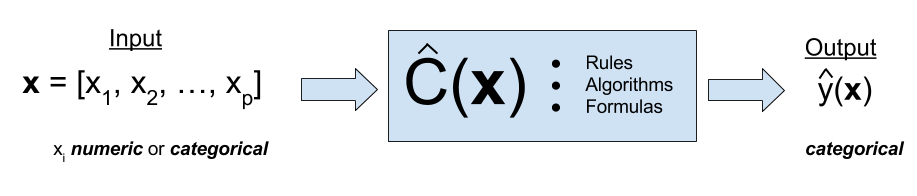

Like regression, we have our predictor inputs

Here, the classifier

In machine learning, it usually separates regression and classification apart, one for numerical response, the other for categorical response. But the term regression in general means any relationship between response variables and predictors, including both numerical and categorical responses. So the definition of regression in machine learning is a little bit narrow scoped. A regression model in general can deal with either numerical response or the regression problem in machine learning, or categorical response or the classification problem. That’s why we can use a logistic regression to do classification problems.

Classification Example

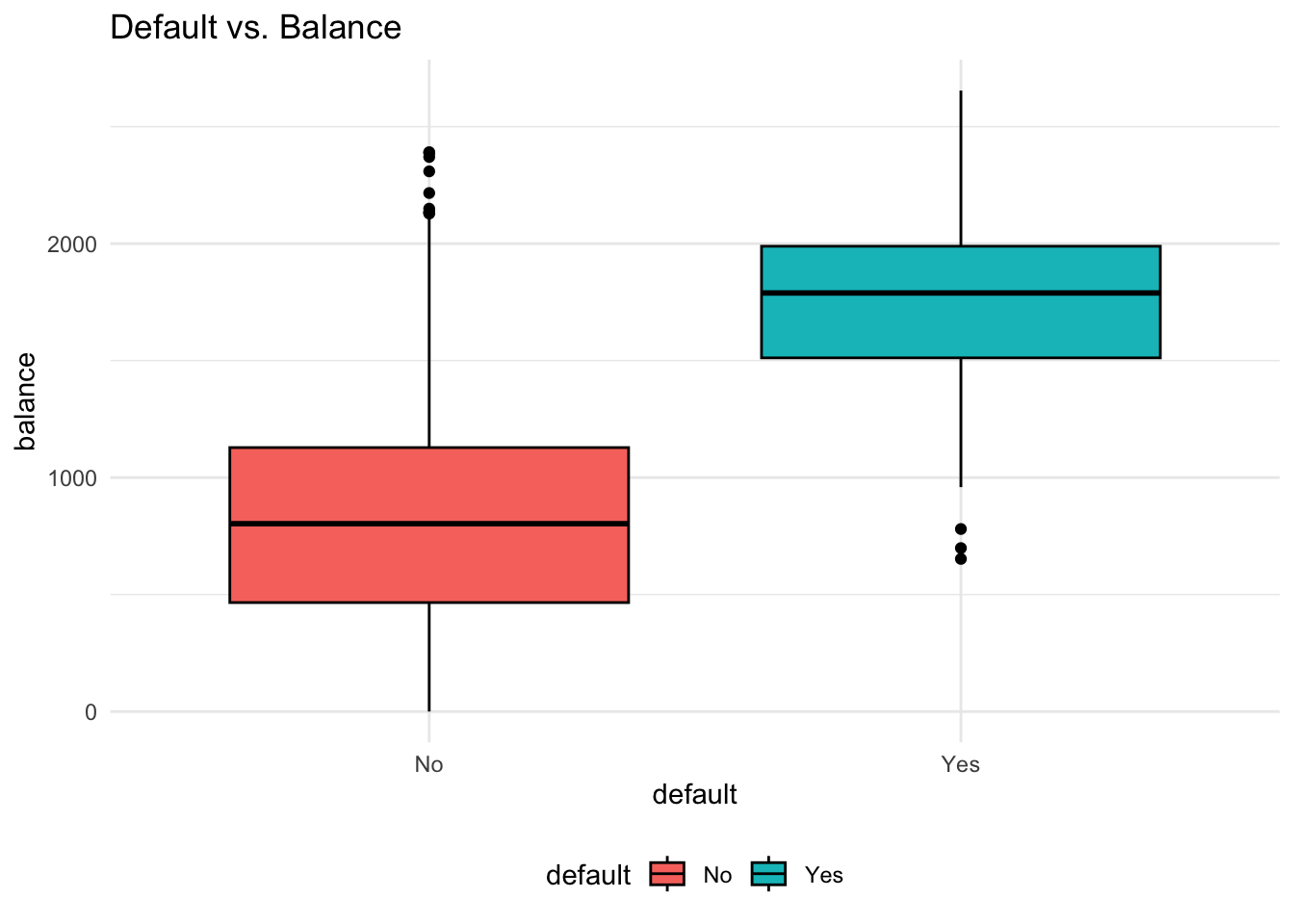

- Predict whether people will default on their credit card payment, where

yesorno, based on their monthly credit card balance, - We use the sample data

Why Not Linear Regression?

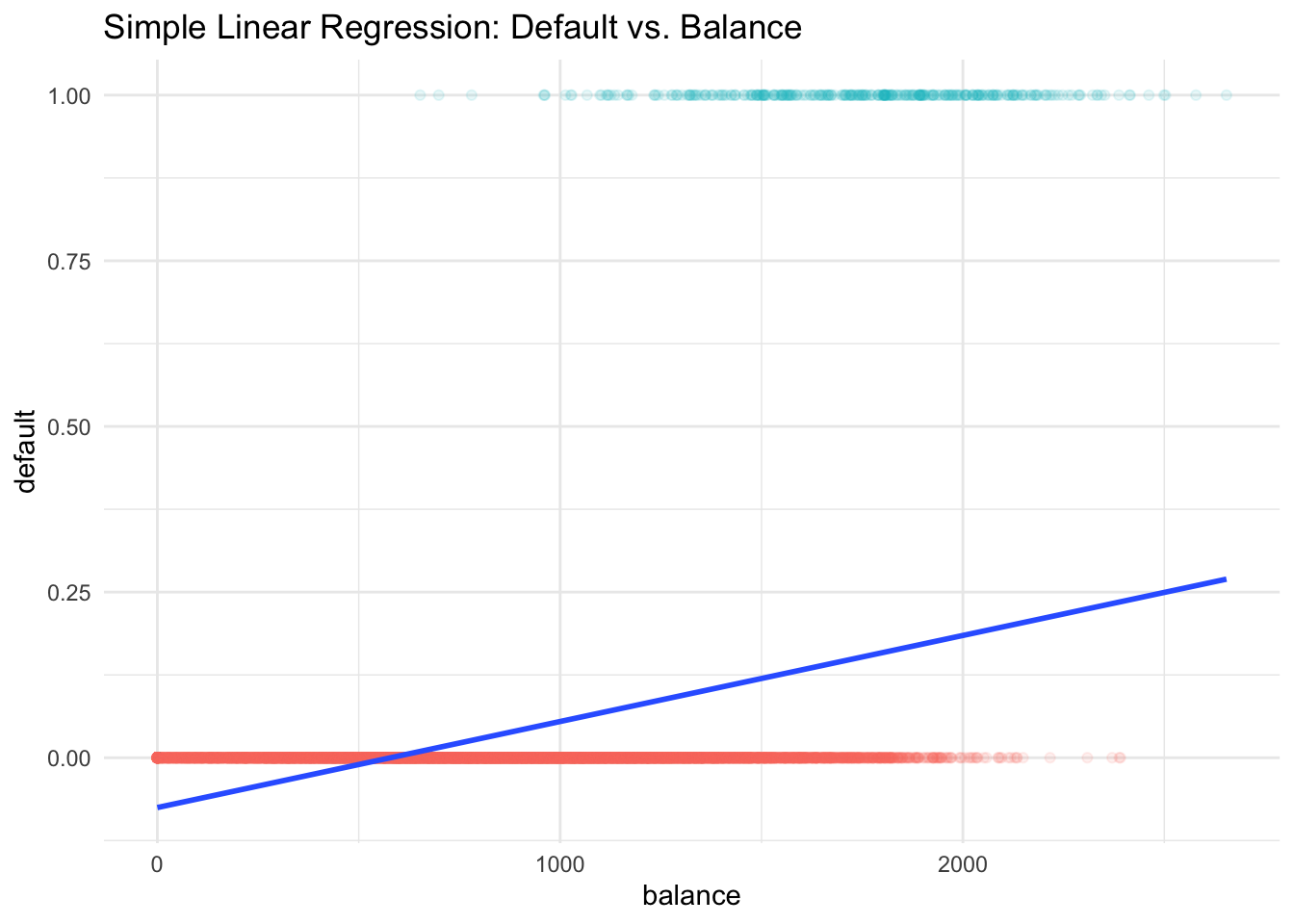

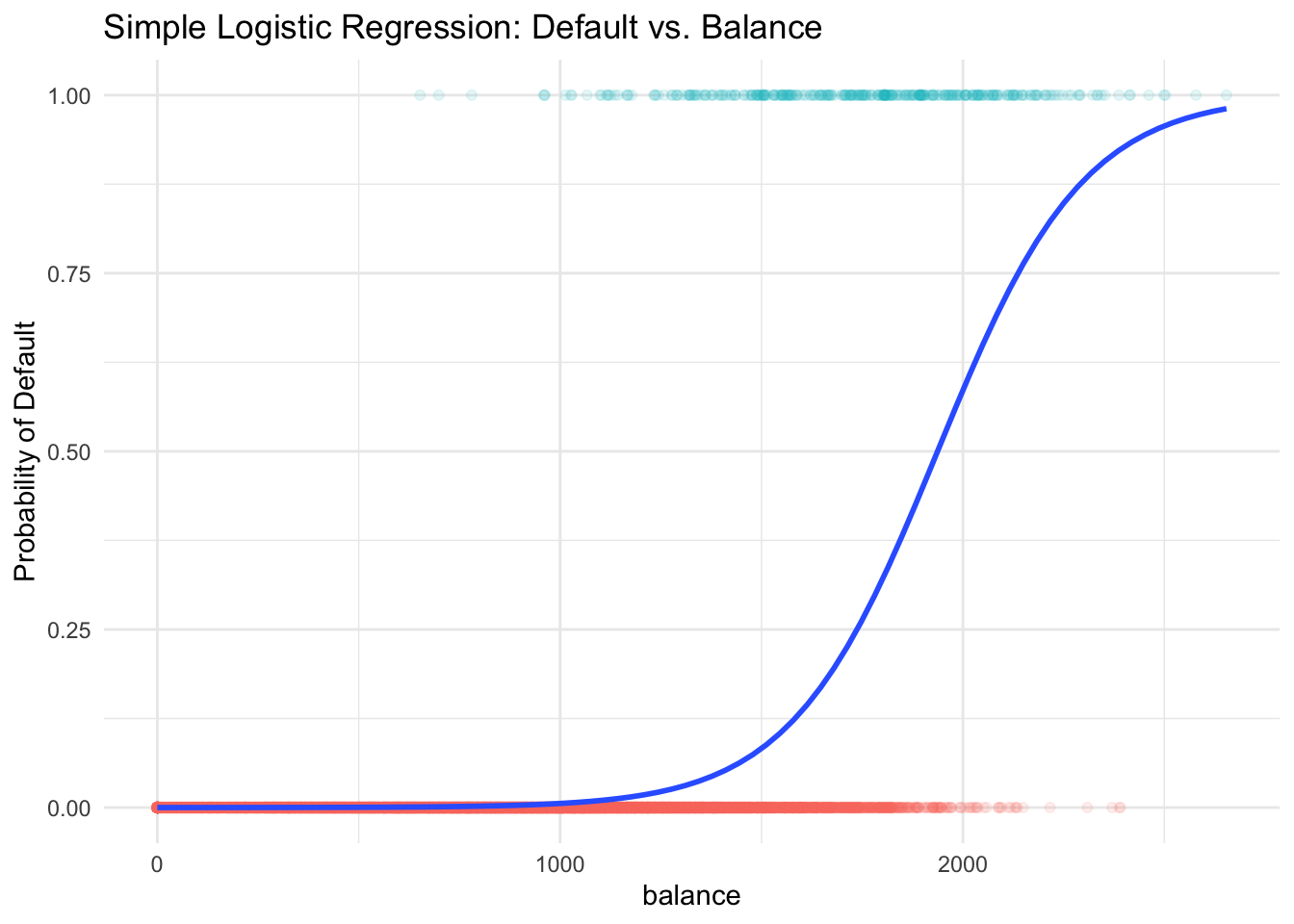

Before we jump into logistic regression, one question is, why not just fit a linear regression model? That is, we define 0 as not default and 1 as default, and treat 0 and 1 as numerical values, and run a linear regression model, like

In fact, the predicted valued of

First, probability is always between 0 and 1, but some estimates here are outside the

Also, we assume the probability of default is linearly increasing with credit card balance, which is generally not true in reality.

In addition, the dummy variable approach

As a result, we need to use a model that is appropriate for categorical responses, like logistic regression.

Why Logistic Regression?

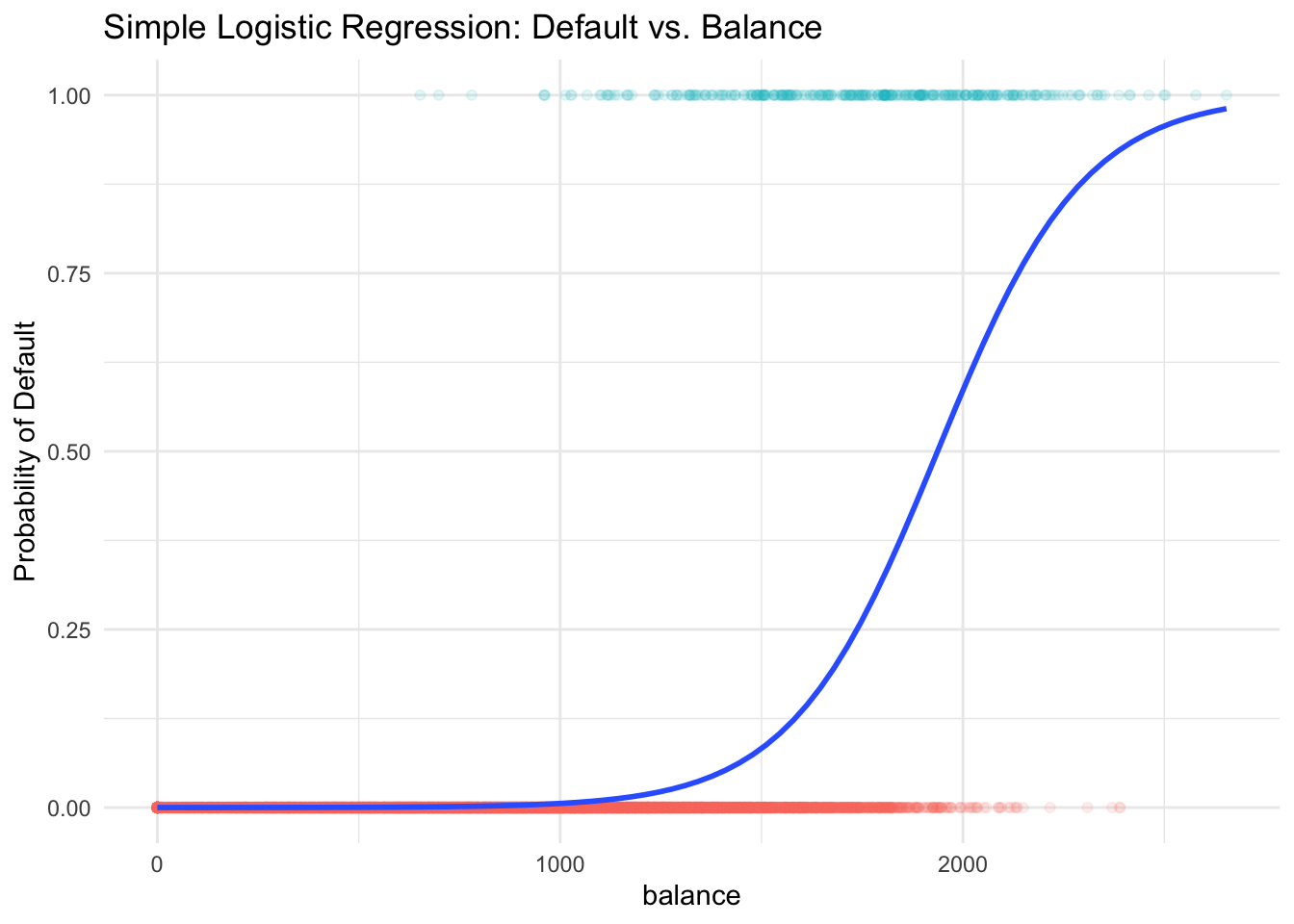

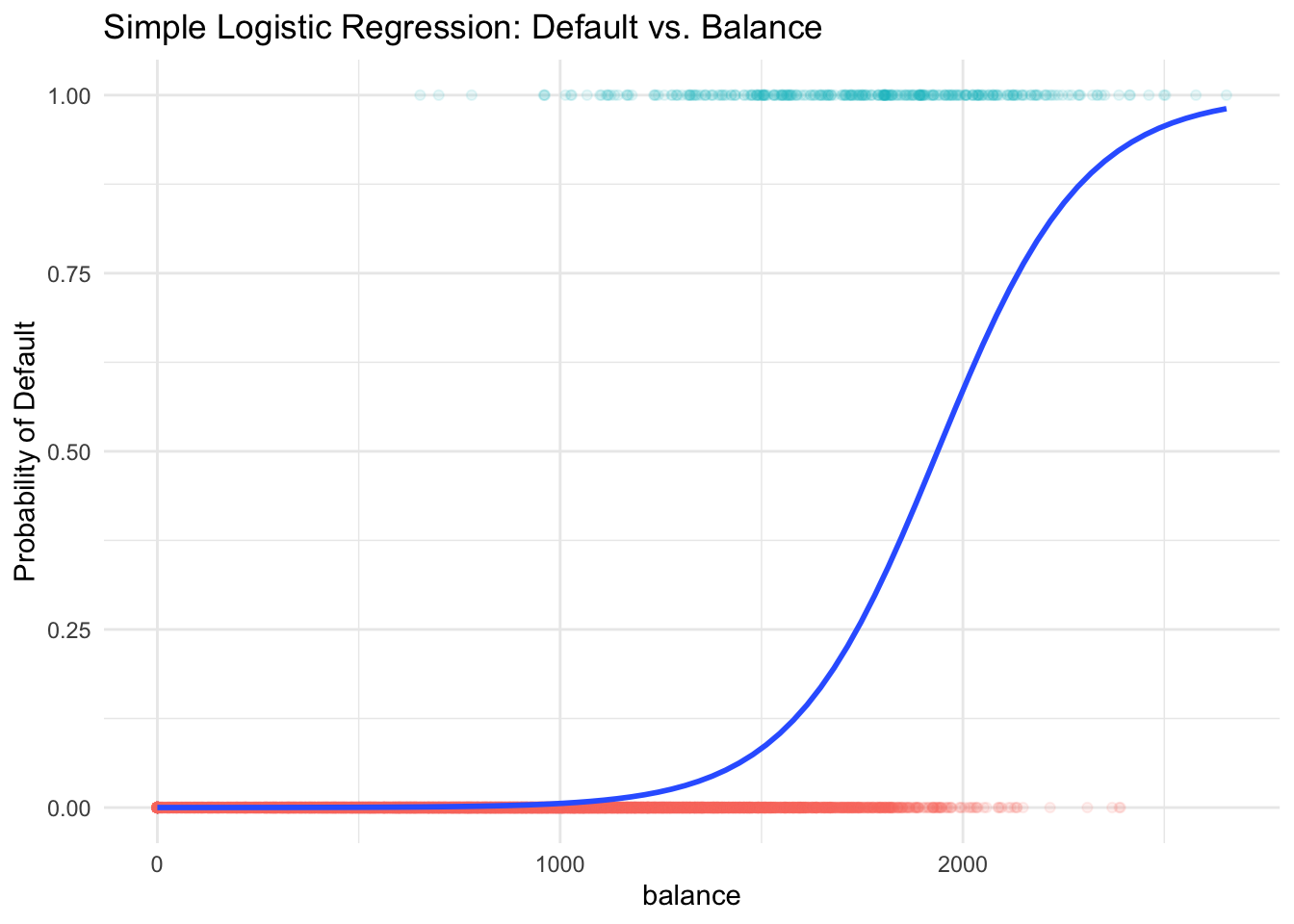

Some classification methods, logistic regression for example, first predict the probability of each category of default using an S-shaped curve.

You can see the estimated probability curve is always between 0 and 1 and higher balance leads to higher chance of default. If our cut-off or threshold probability is 0.5,

29.2 Introduction to Logistic Regression

Binary Responses

The story starts with the binary response. The idea is that we treat each default

Nonconstant Probability

Two outcomes: Default

The probability of success,

With a different value of

-

balance.

The idea of nonconstant probability in logistic regression is similar to the idea of different mean response value in linear regression. In linear regression, the mean response level is linearly affected by the predictor’s value,

Because of this Bernoulli assumption, the logistic regression is also called binomial regression.

Logistic Regression

Now it’s time to see what the logistic regression is. Logistic regression models a binary response

-

-

But remember, we are not predicting

Now that it’s not good to use

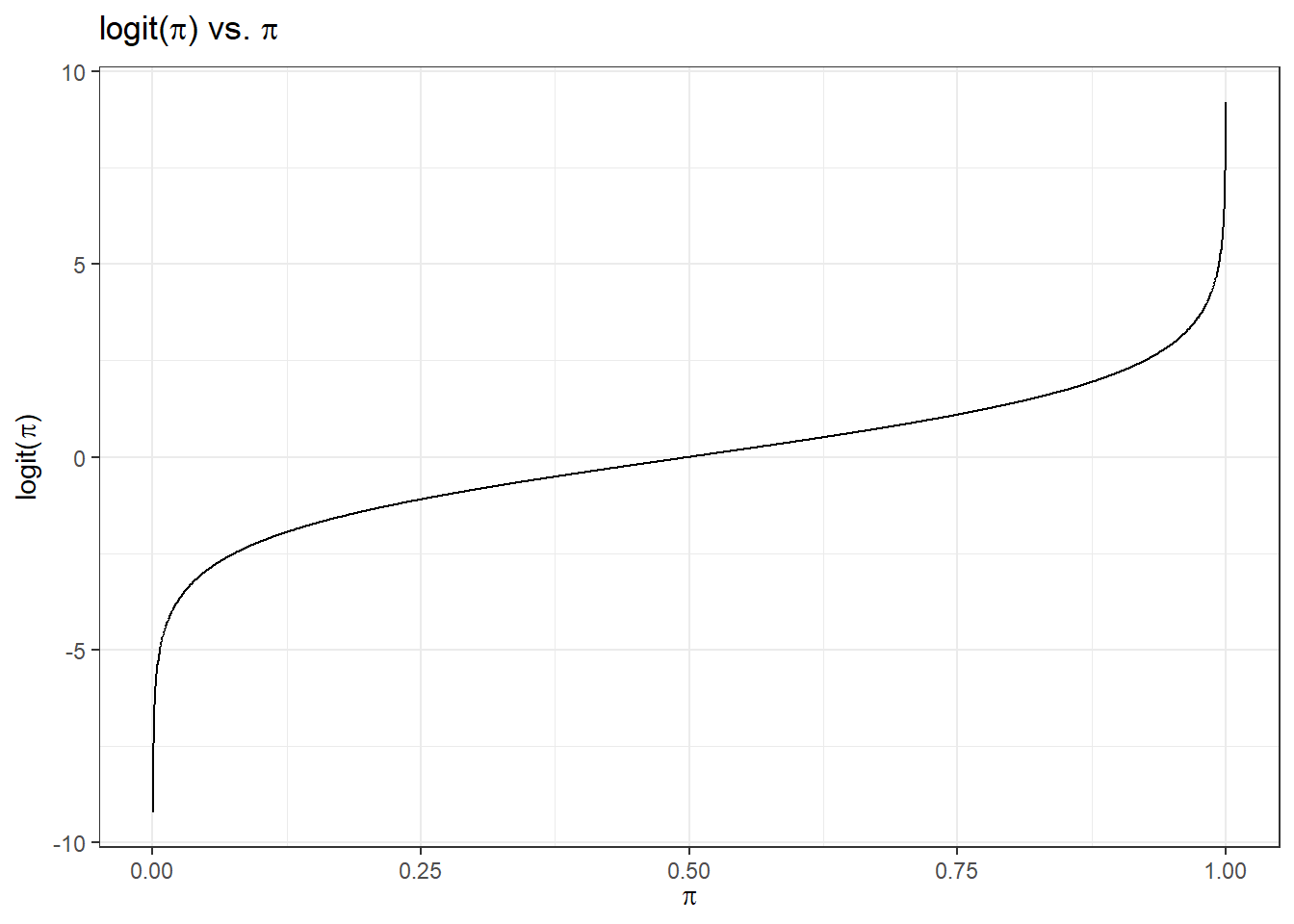

In the logistic regression, we use the logit function:

which is in fact the log odds. This transformation is monotone. The higher

Then we can assume

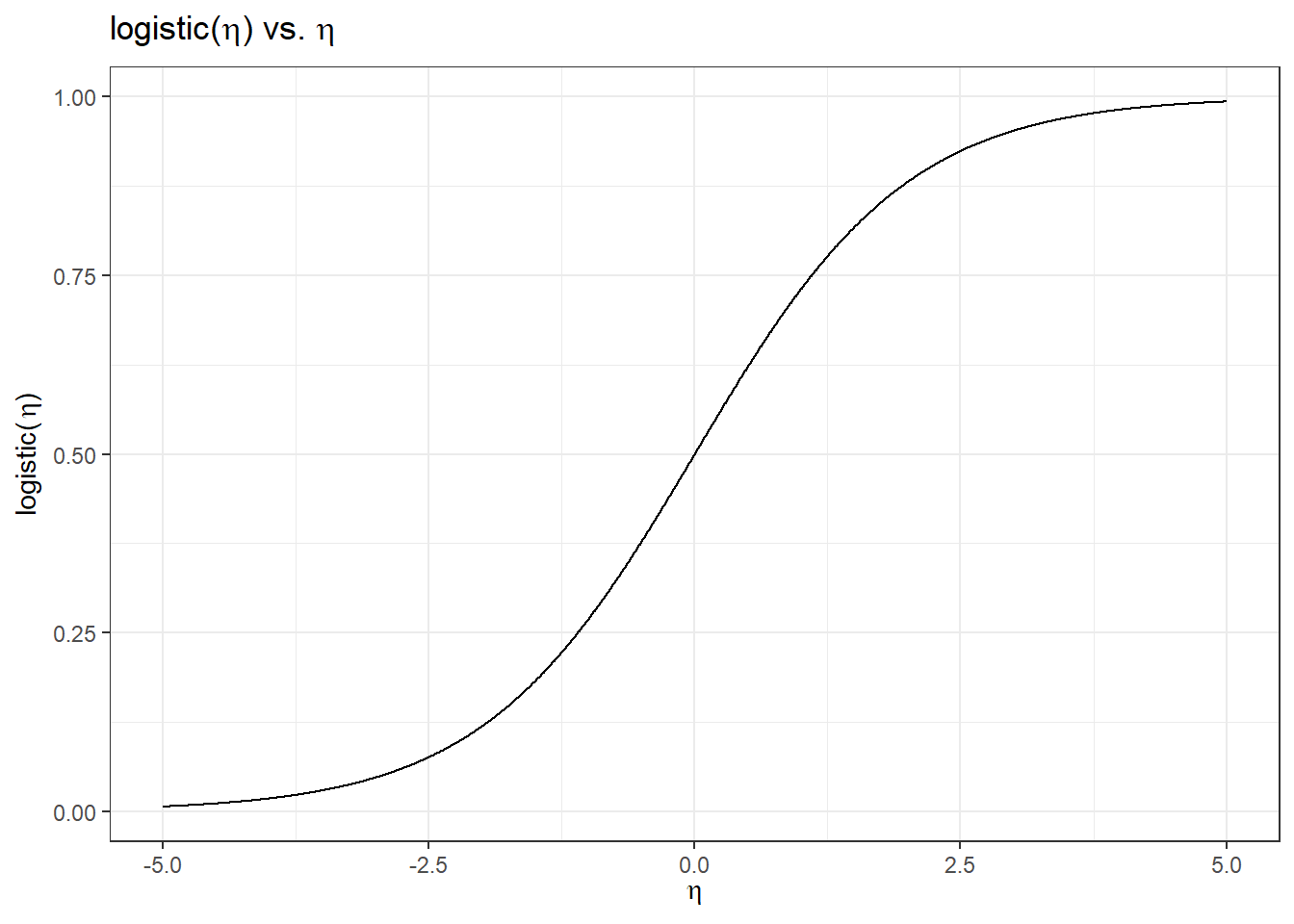

Well, the question is, if we obtain the

So the logit function

So once

29.3 Simple Logistic Regression Model

To sum up, here shows a simple logistic regression model: For

Each

Once we get the estimates

Probability Curve

The relationship between

Because of the S-shaped curve, the amount that

Regardless of the value of

Interpretation of Coefficients

The ratio

Example: If 1 in 5 people will default, the odds is 1/4 since

- Increasing

Example: Logistic Regression

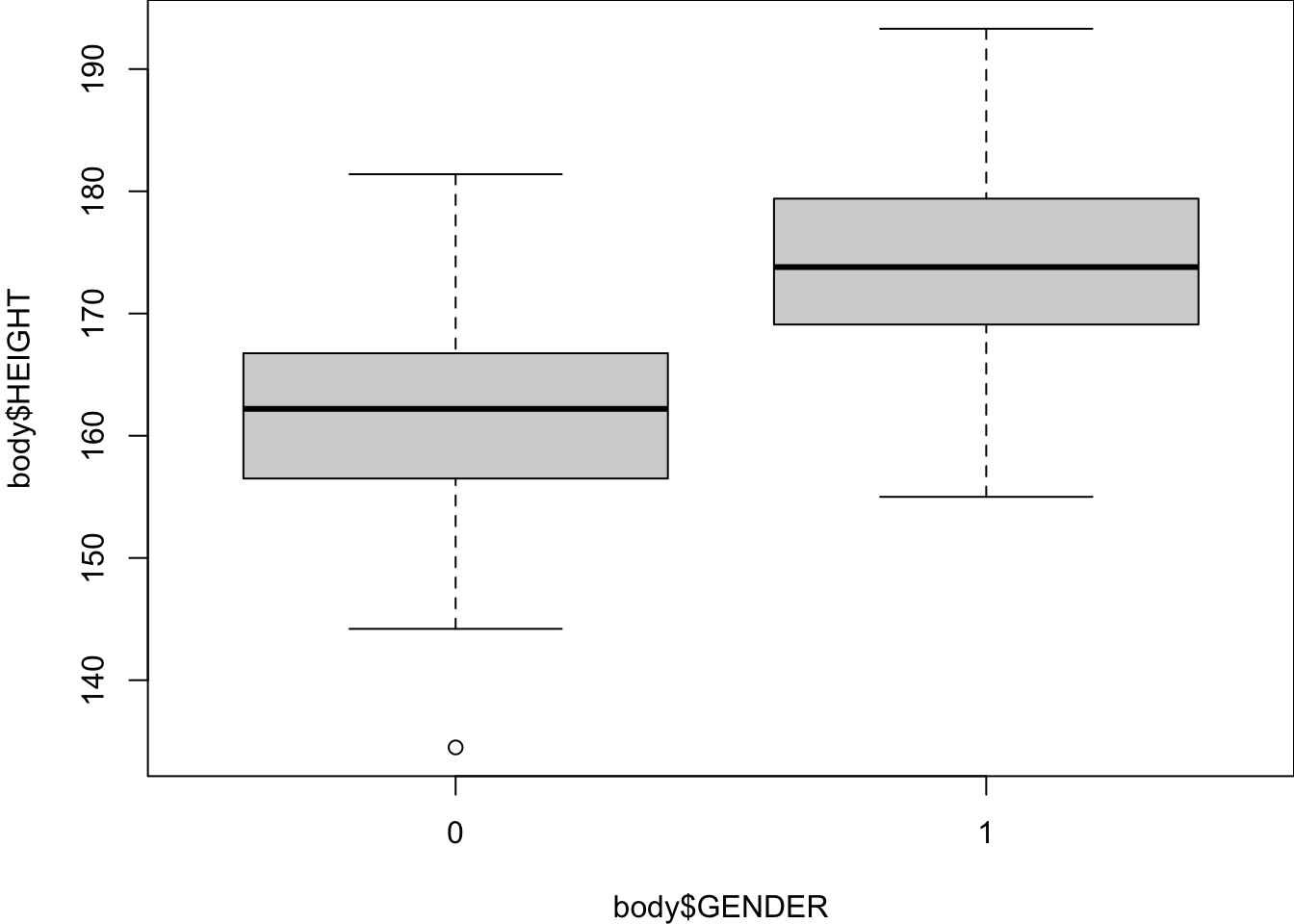

We use the body data to demonstrate the implementation of logistic regression. The response variable is GENDER, and the predictor is HEIGHT. We would like to use logistic regression to predict whether a person is male or female using the height information of that person.

In the data,

GENDER = 1if maleGENDER = 0if female

The unit of HEIGHT is centimeter (cm) (1 cm = 0.3937 in).

body <- read.table("./data/body.txt", header = TRUE)

head(body) AGE GENDER PULSE SYSTOLIC DIASTOLIC HDL LDL WHITE RED PLATE WEIGHT HEIGHT

1 43 0 80 100 70 73 68 8.7 4.80 319 98.6 172.0

2 57 1 84 112 70 35 116 4.9 4.73 187 96.9 186.0

3 38 0 94 134 94 36 223 6.9 4.47 297 108.2 154.4

4 80 1 74 126 64 37 83 7.5 4.32 170 73.1 160.5

5 34 1 50 114 68 50 104 6.1 4.95 140 83.1 179.0

6 77 1 60 134 60 55 75 5.7 3.95 192 86.5 166.7

WAIST ARM_CIRC BMI

1 120.4 40.7 33.3

2 107.8 37.0 28.0

3 120.3 44.3 45.4

4 97.2 30.3 28.4

5 95.1 34.0 25.9

6 112.0 31.4 31.1import pandas as pd

body = pd.read_table("./data/body.txt", sep='\s+')

body.head() AGE GENDER PULSE SYSTOLIC ... HEIGHT WAIST ARM_CIRC BMI

0 43 0 80 100 ... 172.0 120.4 40.7 33.3

1 57 1 84 112 ... 186.0 107.8 37.0 28.0

2 38 0 94 134 ... 154.4 120.3 44.3 45.4

3 80 1 74 126 ... 160.5 97.2 30.3 28.4

4 34 1 50 114 ... 179.0 95.1 34.0 25.9

[5 rows x 15 columns]Again, we are not using gender to predict someone’s height, which is usually done by linear regression. Instead, our response variable is a binary categorical variable, and we are doing classification with a numeric predictor.

Data Summary

A basic data summary by gender tells us that height plays a role in distinguish male from female, so using height to classify gender would be helpful.

table(body$GENDER)

0 1

147 153 summary(body[body$GENDER == 1, ]$HEIGHT) Min. 1st Qu. Median Mean 3rd Qu. Max.

155.0 169.1 173.8 174.1 179.4 193.3 summary(body[body$GENDER == 0, ]$HEIGHT) Min. 1st Qu. Median Mean 3rd Qu. Max.

134.5 156.5 162.2 161.7 166.8 181.4 boxplot(body$HEIGHT ~ body$GENDER)

# Show the distribution of GENDER

body['GENDER'].value_counts()GENDER

1 153

0 147

Name: count, dtype: int64body[body['GENDER'] == 1]['HEIGHT'].describe()count 153.000000

mean 174.124837

std 7.100975

min 155.000000

25% 169.100000

50% 173.800000

75% 179.400000

max 193.300000

Name: HEIGHT, dtype: float64body[body['GENDER'] == 0]['HEIGHT'].describe()count 147.000000

mean 161.687075

std 7.482960

min 134.500000

25% 156.500000

50% 162.200000

75% 166.750000

max 181.400000

Name: HEIGHT, dtype: float64import matplotlib.pyplot as plt

import seaborn as sns

plt.figure()

sns.boxplot(x='GENDER', y='HEIGHT', data=body)

plt.show()

Model Fitting

We use the function glm() in R to fit a logistic regression model. “glm” means generalized linear model (GLM). Linear regression is a linear model, and it is a normal model because the response variable is normally distributed given

In the function, we use the same formula syntax as lm(), and one additional job we need to do is specify the family of the GLM. family = "binomial" should be used because the logistic regression is a binomial regression.

logit_fit <- glm(GENDER ~ HEIGHT, data = body, family = "binomial")

(summ_logit_fit <- summary(logit_fit))

Call:

glm(formula = GENDER ~ HEIGHT, family = "binomial", data = body)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -40.54809 4.63084 -8.756 <2e-16 ***

HEIGHT 0.24173 0.02758 8.764 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 415.77 on 299 degrees of freedom

Residual deviance: 251.50 on 298 degrees of freedom

AIC: 255.5

Number of Fisher Scoring iterations: 5summ_logit_fit$coefficients Estimate Std. Error z value Pr(>|z|)

(Intercept) -40.5480864 4.63083742 -8.756102 2.021182e-18

HEIGHT 0.2417325 0.02758399 8.763507 1.892674e-18We use the function statsmodels.formula.api.logit() in Python to fit a logistic regression model. In the function, we use exactly the same syntax as ols().

from statsmodels.formula.api import logit

logit_fit = logit(formula='GENDER ~ HEIGHT', data=body).fit()Optimization terminated successfully.

Current function value: 0.419162

Iterations 7logit_fit.summary()| Dep. Variable: | GENDER | No. Observations: | 300 |

| Model: | Logit | Df Residuals: | 298 |

| Method: | MLE | Df Model: | 1 |

| Date: | Tue, 15 Oct 2024 | Pseudo R-squ.: | 0.3951 |

| Time: | 10:37:11 | Log-Likelihood: | -125.75 |

| converged: | True | LL-Null: | -207.88 |

| Covariance Type: | nonrobust | LLR p-value: | 1.320e-37 |

| coef | std err | z | P>|z| | [0.025 | 0.975] | |

| Intercept | -40.5481 | 4.631 | -8.756 | 0.000 | -49.625 | -31.471 |

| HEIGHT | 0.2417 | 0.028 | 8.763 | 0.000 | 0.188 | 0.296 |

logit_fit.paramsIntercept -40.548086

HEIGHT 0.241733

dtype: float64There is another function Logit() that uses the response vector and design matrix to perform logistic regression.

X = body[['HEIGHT']]

y = body['GENDER']

import statsmodels.api as sm

logit_model_fit = sm.Logit(y, sm.add_constant(X)).fit()

logit_model_fit.summary()Based on the fitted result, we learn that

Since

A one centimeter increase in HEIGHT increases the log odds of being male by 0.24 units.

The odds ratio, HEIGHT.

Prediction

Knowing how the predictor affects the chance of response belonging to some category is one goal. But in classification, we usually focus more on prediction. For example, we may want to know Pr(GENDER = 1) when HEIGHT is 170 cm.

Once we obtain the coefficient estimates and the predictor value, we just need to plug them into the logistic function to obtain the probability we want.

Through the formula, we learn that from our model, when a person is 170cm tall, the probability that this person is male is about 63/3%.

predict(logit_fit, type = "response") gives us a vector of type = "link", predict() gives us newdata = data.frame(HEIGHT = 170). Remember the variable name should be exactly the same as the name in the original data set.

predict(logit_fit, newdata = data.frame(HEIGHT = 170), type = "response") 1

0.6333105 predict(logit_fit, newdata = data.frame(HEIGHT = 170), type = "link") 1

0.5464453 logit_fit.predict(which='mean') gives us a vector of which='linear', predict() gives us newinput = pd.DataFrame({'HEIGHT': [170]}) and get_prediction(newinput). Remember the variable name should be exactly the same as the name in the original data set.

pi_hat = logit_fit.predict(which='mean')

eta_hat = logit_fit.predict(which='linear') ## default gives us b0 + b1*x# Create new data for prediction

newinput = pd.DataFrame({'HEIGHT': [170]})

predict = logit_fit.get_prediction(newinput)

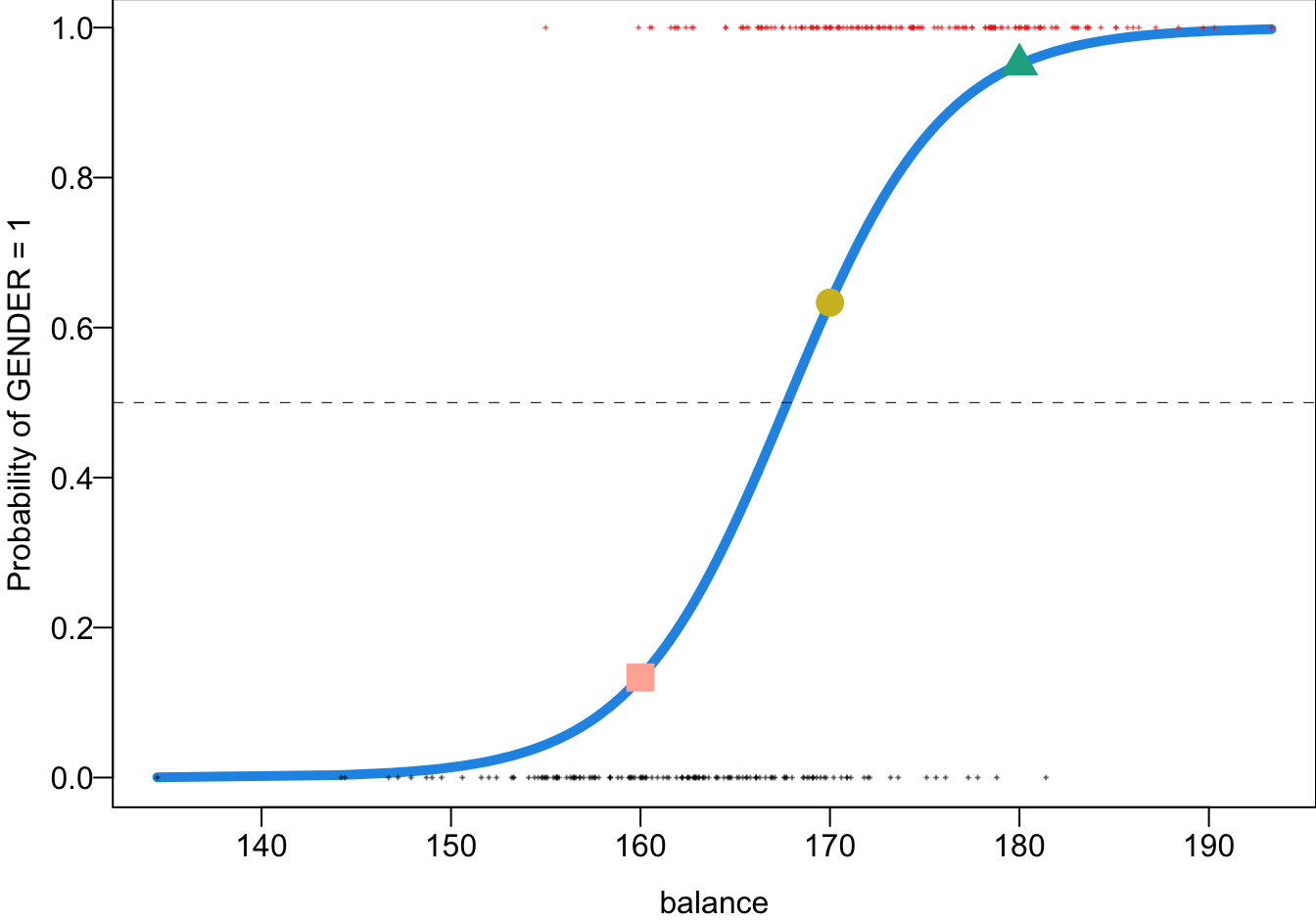

predict.predictedarray([0.63331048])predict.linpredarray([0.54644529])Probability Curve

predict(logit_fit, newdata = data.frame(HEIGHT = c(160, 170, 180)), type = "response") 1 2 3

0.1334399 0.6333105 0.9509103 # Make predictions for new HEIGHT values

newheight = pd.DataFrame({'HEIGHT': [160, 170, 180]})

pred = logit_fit.get_prediction(newheight)

pred.predictedarray([0.13343992, 0.63331048, 0.95091031])The blue S-shaped curve is the estimated probability curve. It can be plotted using pi_hat.

- 160 cm, Pr(male) = 0.13

- 170 cm, Pr(male) = 0.63

- 180 cm, Pr(male) = 0.95

29.4 Evaluation Metrics

Having probabilities is just a intermediate step. Most of the time our final goal is to classify our response, doing a binary decision. Given a predicted probability, we may correctly classify the label, or mis-classify the label. To know whether or not our model does a good job on classification, we need some evaluation metrics.

First, we can use a tool called a confusion matrix to display all possible outcomes in a binary classification scenario. In this context, let’s designate 0 as Negative and 1 as Positive, regardless of what these values represent. If the actual truth is 0 and we classify the response as 0, this outcome is a True Negative (TN), meaning we made a correct decision. However, if we classify the response as 1 instead, we commit an error, resulting in a False Positive (FP). In this case, the true condition is Negative, but our model incorrectly predicts it as Positive.

Similarly, if the truth is 1 and we correctly predict it as 1, the classification is accurate and is called a True Positive (TP). However, if we incorrectly label a response as Negative when it is actually Positive, we make a False Negative (FN).

| 0 | 1 | |

|---|---|---|

| Labeled 0 | True Negative (TN) | False Negative (FN) |

| Labeled 1 | False Positive (FP) | True Positive (TP) |

A good classifier accurately identifies a high number of TNs and TPs while minimizing FNs and FPs. Commonly used performance measures include Sensitivity (True Positive Rate), Specificity (True Negative Rate), and Accuracy.

Sensitivity (True Positive Rate, TPR)

Specificity (True Negative Rate, TNR)

Accuracy

More on Wiki page

Example: Confusion Matrix

To produce a confusion matrix, we need two things: the true response labels, and the classification from the model. In the example, the true labels are just body$GENDER data. For the classification result, we need to make a binary decision from the estimated probabilities obtained from the model. Here we use 0.5 as the threshold. If table() function to generate a confusion matrix.

To produce a confusion matrix, we need two things: the true response labels, and the classification from the model. In the example, the true labels are just body['GENDER'] data. For the classification result, we need to make a binary decision from the estimated probabilities obtained from the model. Here we use 0.5 as the threshold. If confusion_matrix() function from sklearn.metrics to generate a confusion matrix.

prob = logit_fit.predict()

## true observations

gender_true = body['GENDER']

## predicted labels

gender_predict = (prob > 0.5).astype(int)

# Confusion matrix

from sklearn.metrics import confusion_matrix

confusion_matrix(gender_true, gender_predict)array([[118, 29],

[ 29, 124]])The true positive rate is 124/(124+29) = 0.81, and the true negative rate is 118/(118+29) = 0.803. The overall accuracy is (124 + 118) /(124 + 29 + 29 + 118) = 0.807.

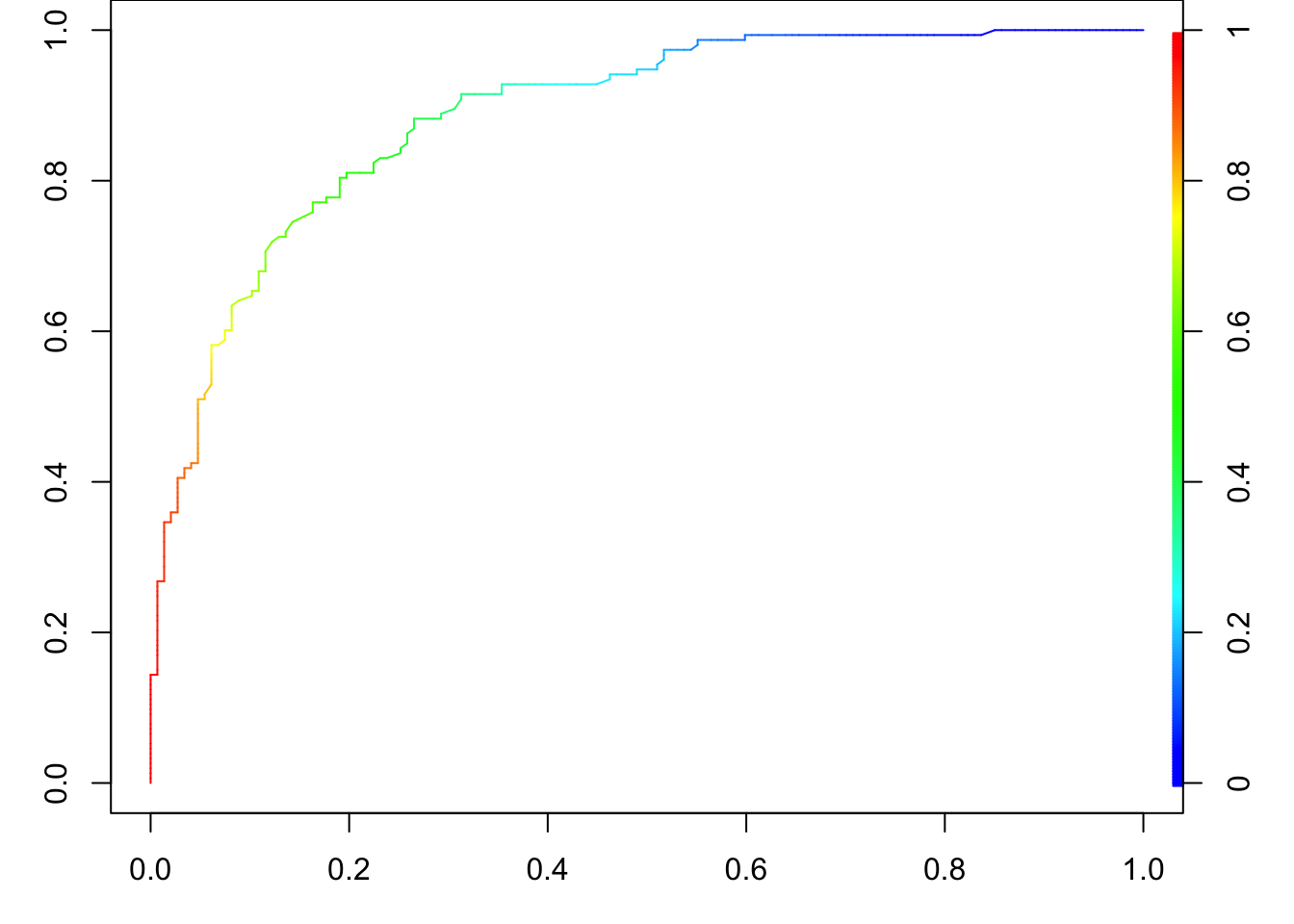

A commonly used visualization tool for assessing the classification performance is the receiver operating characteristic (ROC) curve. 1 This curve plots True Positive Rate (Sensitivity) vs. False Positive Rate (1 - Specificity). The confusion matrix provides the classification result for a single threshold, typically 0.5 in many cases. However, by adjusting the threshold, we can obtain different classification outcomes. For example, if

The ROC curve effectively illustrates how sensitivity and specificity change across every possible cutoff value between 0 and 1. When the cutoff is small, it becomes more likely that

This dynamic explains why the two endpoints of the ROC curve are positioned at the top-right and bottom-left corners of the graph. The top-right corner represents a scenario with high sensitivity and low specificity (low cutoff), while the bottom-left corner represents high specificity and low sensitivity (high cutoff).

The ideal classification result occurs when TPR is 1 and FPR is 0, corresponding to the top-left point of the ROC plot. A superior ROC curve would closely resemble a right-angle shape (red-dashed), with the “knee” of the curve near this perfect point. Building on this concept, a commonly used performance measure derived from the ROC curve is the Area Under the Curve (AUC). The AUC quantifies the overall ability of the model to distinguish between classes. The larger the AUC, the better the classification performance, with a value of 1 representing perfect classification and a value of 0.5 indicating no better than random chance (black-dashed).

29.5 Exercises

- The following logistic regression equation is used for predicting whether a bear is male or female. The value of

- Identify the predictor and response variables. Which of these are dummy variables?

- Given that the variable

Lengthis in the model, does a heavier weight increase or decrease the probability that the bear is a male? Please explain. - The given regression equation has an overall p-value of 0.218. What does that suggest about the quality of predictions made using the regression equation?

- Use a length of 60 in. and a weight of 300 lb to find the probability that the bear is a male. Also, what is the probability that the bear is a female?

R packages for ROC curves: ROCR and pROC, yardstick of Tidymodels↩︎