8 Probability Rules and Bayes Formula

In this chapter, we formally define some terms used in probability, and learn some basic probability operations.

8.1 Probability Operations and Rules

Experiments, Events and Sample Space

Probability starts with the concept of sets. When we calculate the probability of “something”, that something is represented as a set, which is a collection of some outcomes generated by an process or experiment associated to the something we are interested. To denote a set, we usually use a pair of curly braces { }, and the elements of the set is put inside the braces, each separated by a comma, for example, {red, green, yellow} is a set with three color elements.

Here we define some terminology that are commonly used in set and probability concepts.

- An experiment is any process in which the possible outcomes can be identified ahead of time.

The key words is ahead of time. For example, we know what is the result of flipping a coin, which is heads or tails showing up, before we actually do it. Therefore, flipping a coin is an experiment. Similarly, before we roll a six-sided die, we already know the possible outcome of doing that, which is 1, 2, 3, 4, 5, 6, so rolling a die is also an experiment.

- An event is a set of possible outcomes of the experiment.

Generally there are two or more potential outcomes for some experiment. Any collection of those outcomes is called an event. For example, there are 6 possible outcomes for rolling a die, 1, 2, 3, 4, 5, 6. Then any collection of those 6 numbers is an event. So “An odd number showing up” which corresponds to the collection {1, 3, 5} is an event. “An even number showing up” that is represented by {2, 4, 6} is also an event.

- The sample space

Based on the definition, the sample space the largest set associated with an experiment because it collects all possible outcomes. In other words, when an experiment is conducted, no matter what outcome shows up, it is always in the sample space.

The table below provides a summary of experiments flipping a coin and rolling a die.

| Experiment | Possible Outcomes | Some Events | Sample Space |

|---|---|---|---|

| Flip a coin 🪙 | Heads, Tails | {Heads}, {Heads, Tails}, … | {Heads, Tails} |

| Roll a die 🎲 | 1, 2, 3, 4, 5, 6 | {1, 3, 5}, {2, 4, 6}, {2}, {3, 4, 5, 6}, … | {1, 2, 3, 4, 5, 6} |

Set Concept: Example of Rolling a six-side balanced die

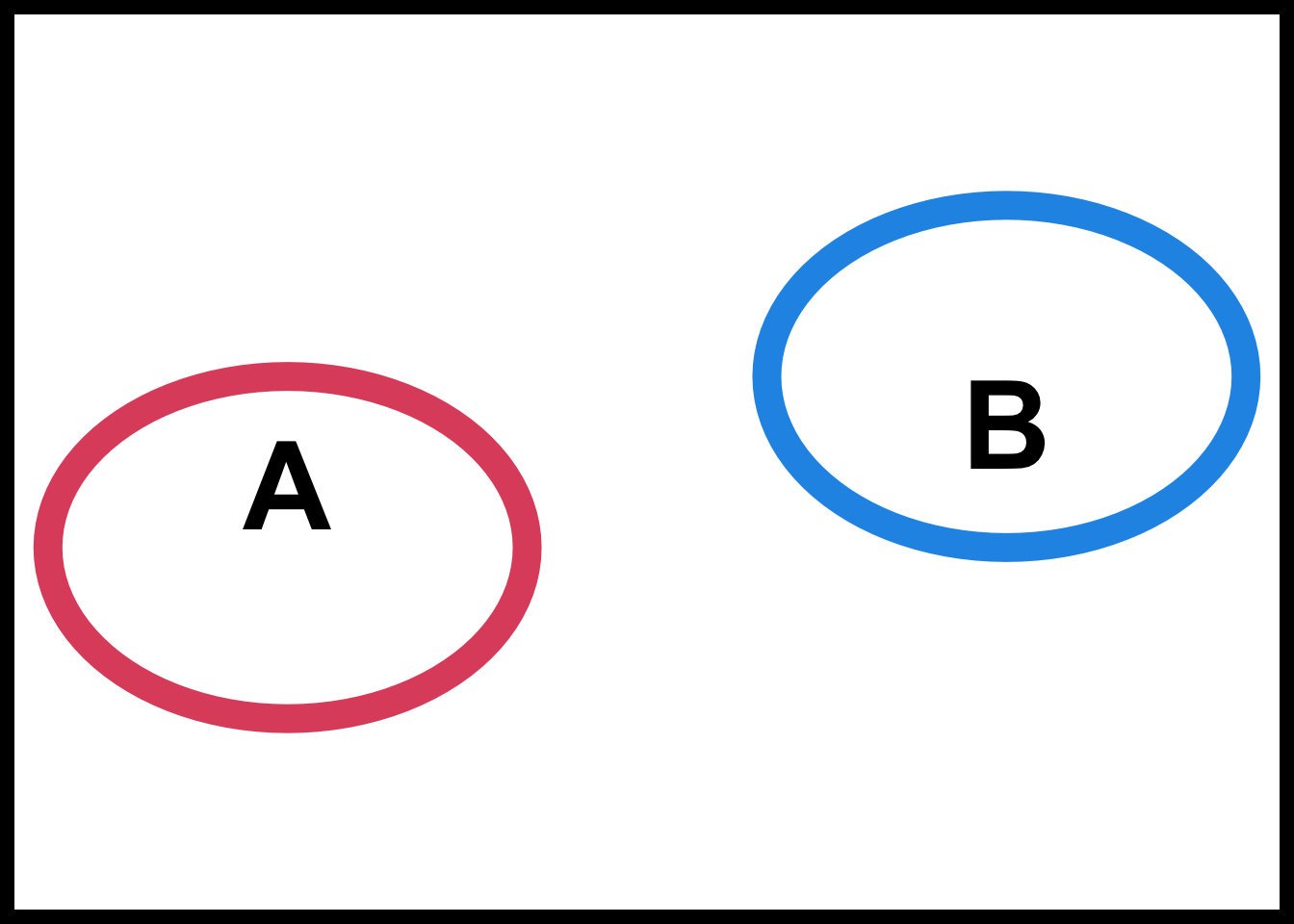

Draw a Venn Diagram every time you get stuck! Venn diagram is a very useful tool for identifying a set, so I encourage you to draw a venn diagram when you get stuck on complicated set operations.

-

The complement of an event (set)

- The union

- The intersection

-

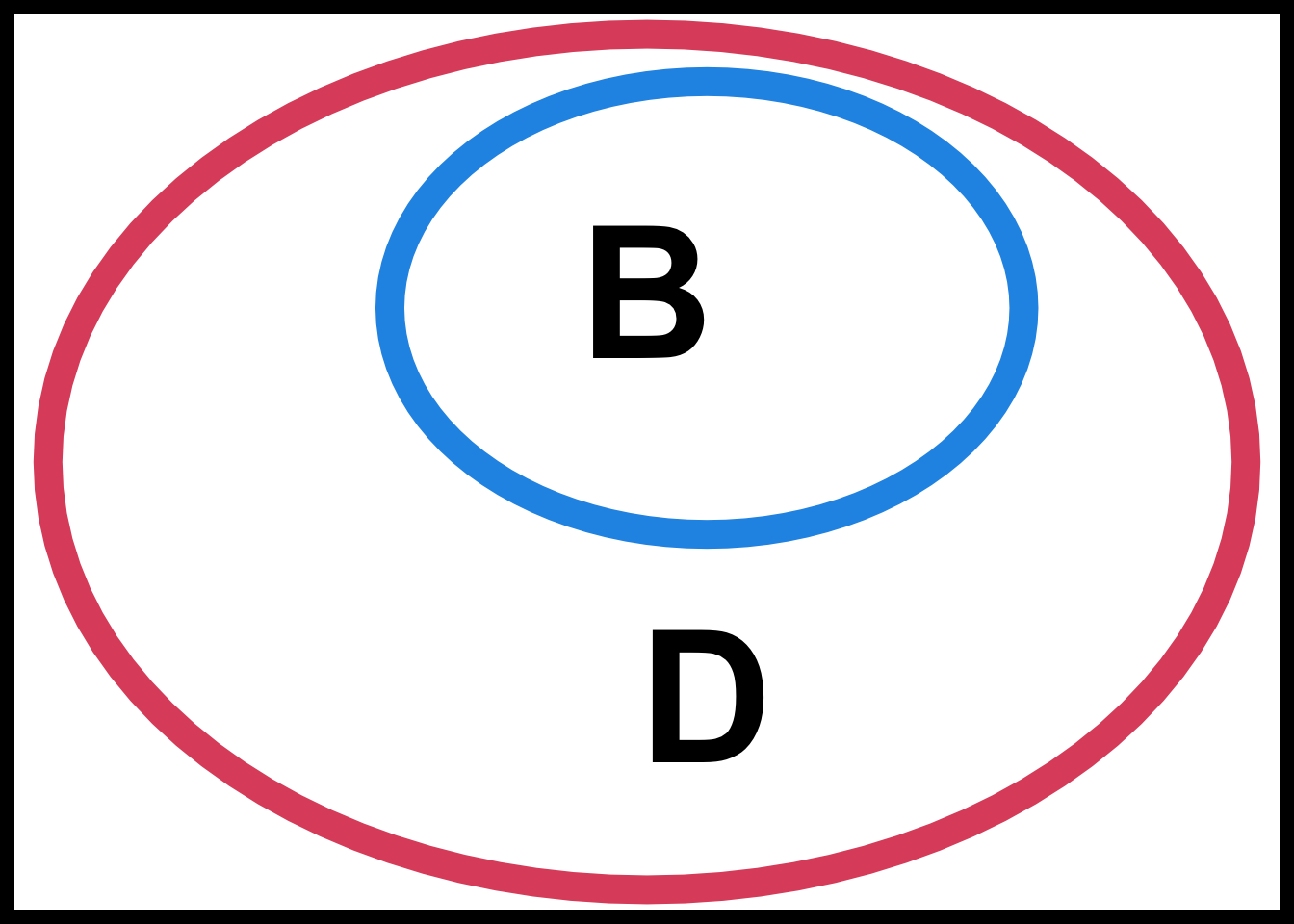

- The containment

Keep in mind that every event (set) being considered is a subset of the sample space

Probability Rules

We have learned how to represent an event in terms of sets and set operations. Here we are going to learn several probability rules for events.

First, we denote the probability of an event

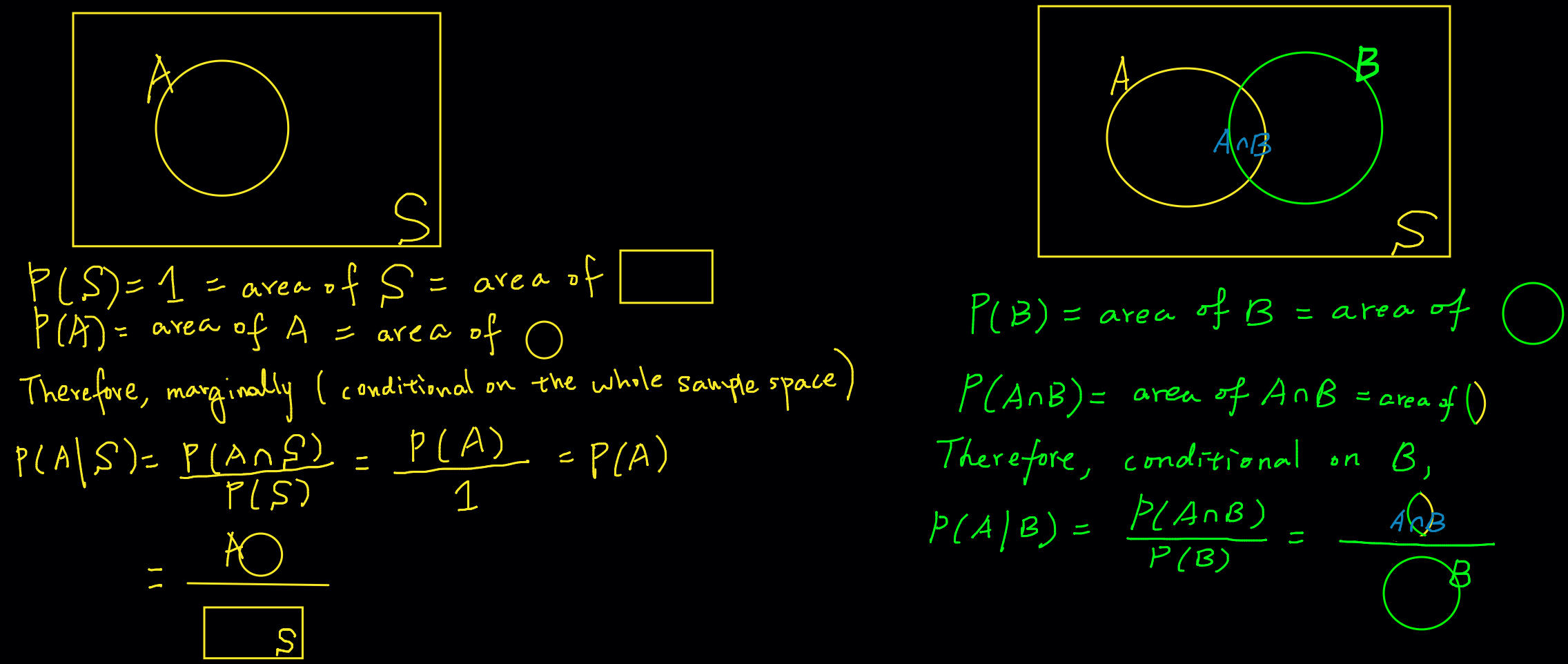

Treat the probability of an event as the area of the event in the Venn diagram.

In order to have a coherent and logically consistent set of probability rules, we need some axioms that are self-evident to anyone. The three axioms are as follows.

- For any event

- If

If we treat the sample space as an event, the probability of the entire sample space is always equal to one because this event must happen every time the experiment is conducted. In the Venn diagram, the sample space is the entire rectangle, and in probability, we presume the area of the rectangle is one.

Because any event is a collection of some outcomes that could possibly occur or not occur, its probability is greater than or equal to zero. Any probability cannot be negative. It is clearly shown in terms of Venn diagram because an area of any shape of an object is greater than or equal to zero.

Finally, if the two events are disjoint, the probability of the union of the two is just the sum of their own probability. For example, if the probability of getting a green M&M is 20% and that of getting a blue M&M is 15%, then the probability of getting a green or blue M&M is 20% + 15% = 35%. It is clearly shown in the Venn diagram too because the total area of the two disjoint events in the sample space is the sum of the individual area.

With the three axioms, the entire probability operation system can be constructed. Some basic properties are listed here.

-

-

Addition Rule:

- If

The empty set does not contain any possible outcomes of an experiment. Because some outcome must be occurred after an experiment is conducted, it is impossible for some event to happen without any outcome involved. Therefore, the probability of the empty set is zero. In terms of Venn diagram, an empty set is a set with area zero because it does not occupy any part (outcome) of the entire sample space.

Since every event being considered must be a subset of the sample space, the area of any event is smaller than the area of the sample space which is one. Therefore, for any event

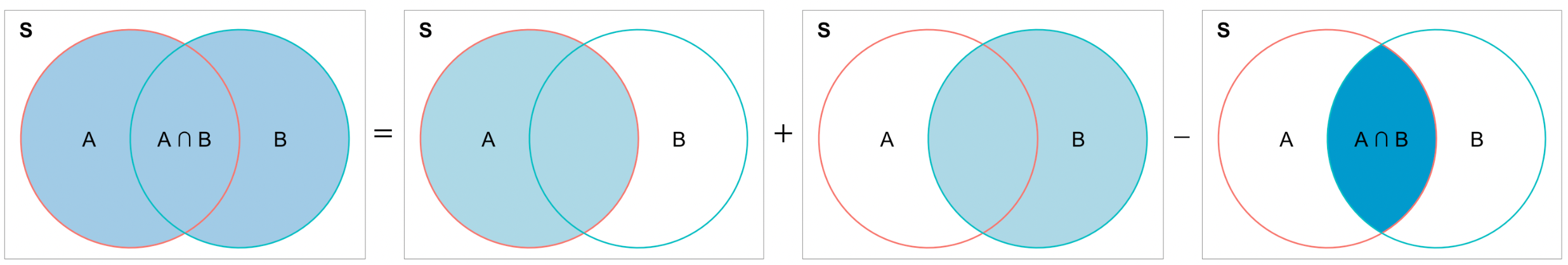

The addition rule can be clearly understood using the Venn diagram. Figure 8.1 shows how

In fact, the third axiom is a special case of the addition rule. Figure 8.2 illustrates the case. Since the two events are disjoint,

Since

If

Example: M&M Colors

- The makers of the M&Ms report that their plain M&Ms are composed of

- 15% Yellow, 10% Red, 20% Orange, 25% Blue, 15% Green and 15% Brown

-

Solution

8.2 Conditional Probability and Independence

Conditional Probability

Quite often people are interested in the probability of something happening given the fact that some other event has been occurred or some information about the experiment or its outcomes have been known. In this case, we can calculate the conditional probability that takes the occurred event or known information into account. The conditional probability would be more appropriate for quantifying uncertainty about what we are interested because knowing some event being occurred is a piece of valuable information that helps us properly adjust the chance of something happening.

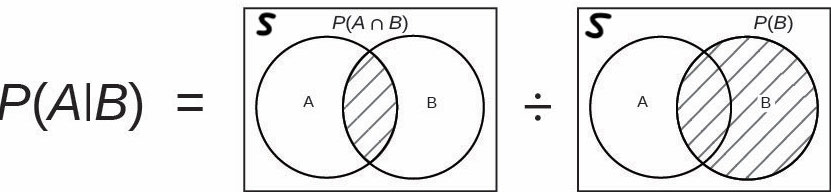

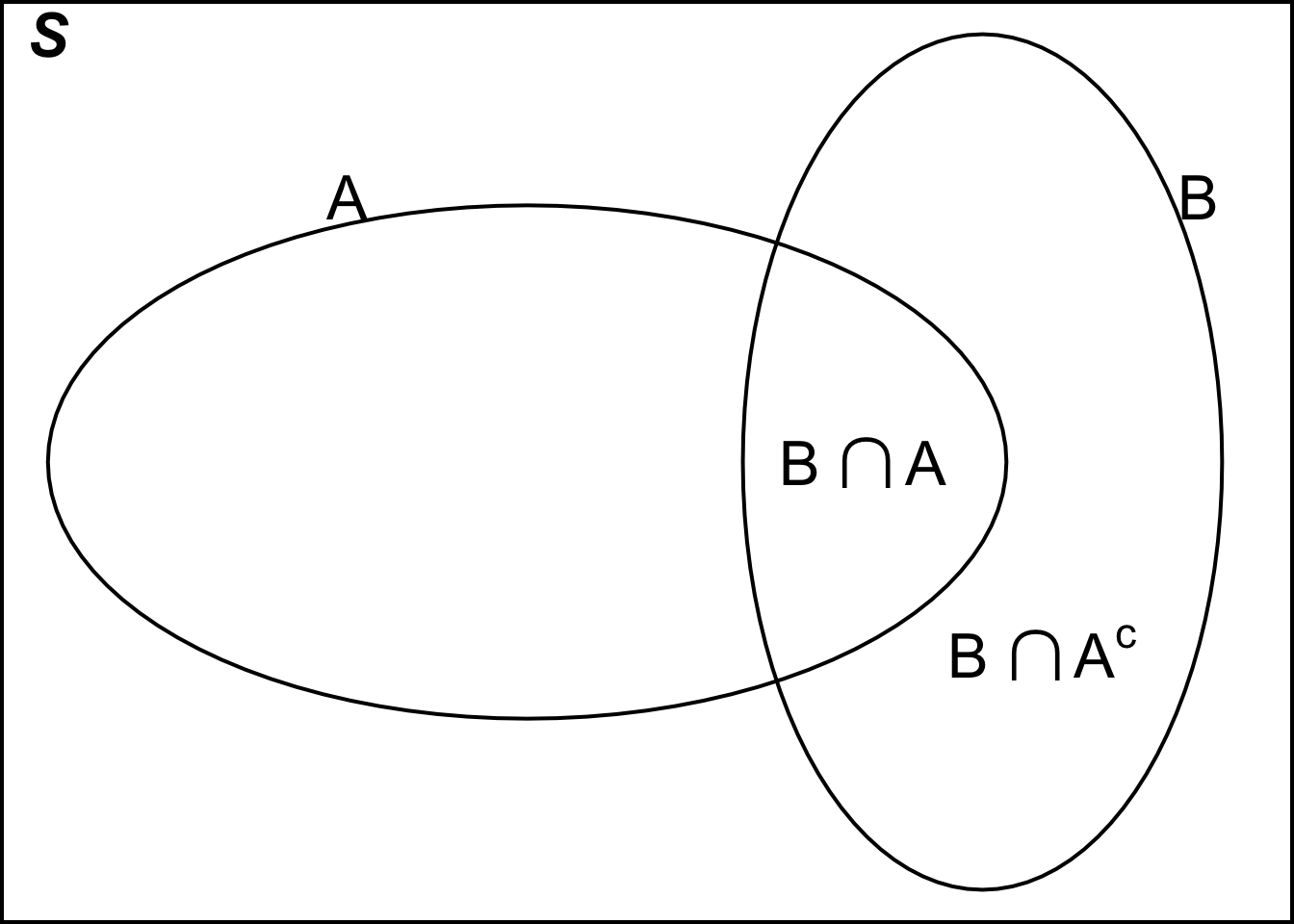

By definition, the conditional probability of

The vertical bar “

The formula is well defined when

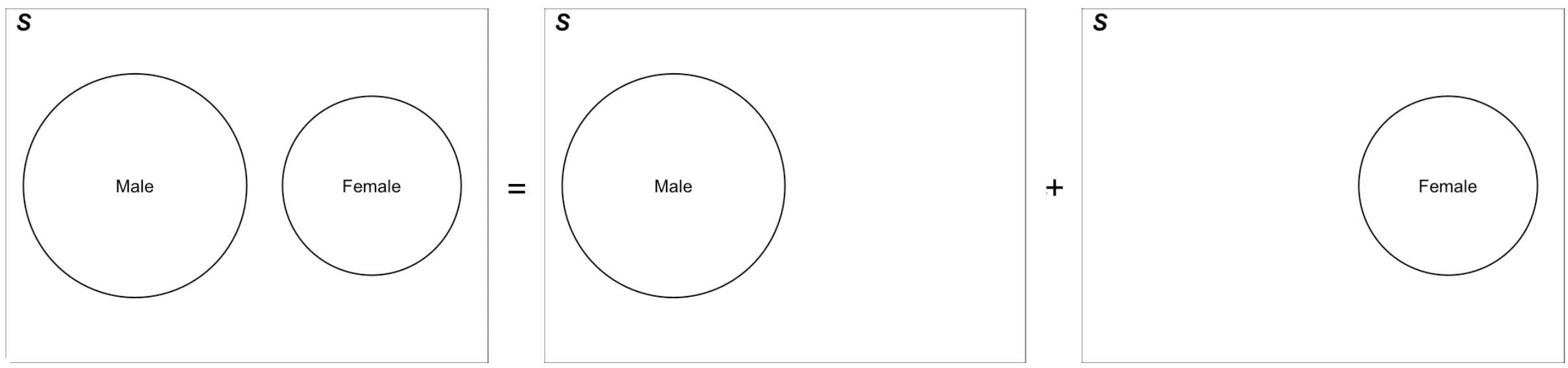

So what is the difference between

Now if we know

Here is an example of new sample space or search pool. Suppose we would like to calculate the probability of a woman who is greater than 20 years old in a certain area. When we don’t have any background information about the woman, to compute the probability, we need to base on the entire female population of interest. But if we do know that the woman has two children, we shrink our focus on the pool of women who have two children, and compute the proportion of the women pool that is over 20 years of age.

The conditional probability formula lead to the multiplication rule:

Example: Peanut Butter and Jelly

- Suppose 80% of people like peanut butter, 89% like jelly and 78% like both. Given that a randomly sampled person likes peanut butter, what is the probability that she also likes jelly?

- We want

- From the problem we have

- If we don’t know if the person loves peanut butter, the probability that he or she loves jelly is 89%.

- If we do know she loves peanut butter, the probability that he or she loves jelly is going up to 97.5%.

Independence

In the previous example, we learn that whether a person loves peanut butter affects the probability that she loves jelly. This piece of information is relevant, and the two events “love peanut butter” and “love jelly” are dependent each other because the one event will affect the chance of the other event happening. Uncovering the association or dependence is important for statistical inference because it helps statisticians better pin down the probability of being interest.

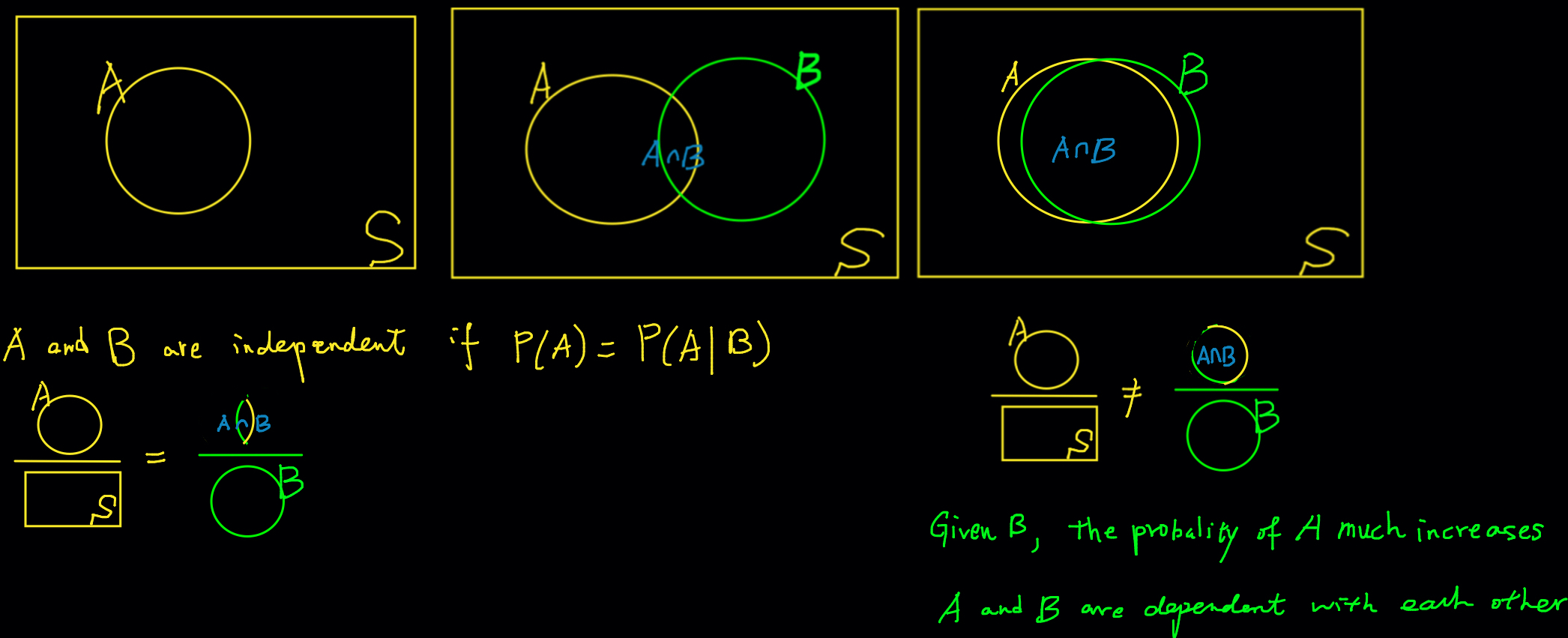

Formally speaking, event

Intuitively, this means that knowing

Here is a question. Can we compute

Figure 8.5 explain independence using the Venn diagram. Independence means that the ratio of area of

Independence Example

Solution

8.3 Bayes’ Formula

Bayes’ formula is one of the most important theorems in the probability and statistical theory. It is the basis of Bayesian inference and Bayesian machine learning discussed in Chapter 22, which is getting more and more popular these days due to fast computation technology. In this section, we learn why we need the Bayes’s formula, and how we can use it to obtain the probability we are interested.

Why Bayes’ Formula?

Quite often, we know

Let me ask you a question. Suppose that during a doctor’s visit, you tested positive for COVID. If you only get to ask the doctor one question, which would it be?

- What’s the chance that I actually have COVID?

- If in fact I don’t have COVID, what’s the chance that I would’ve gotten this positive test result?

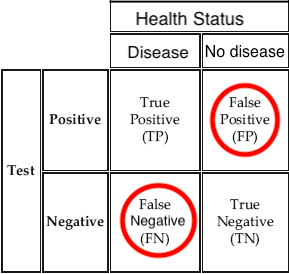

If I were you, I would choose a. because I care more about whether I got COVID or not, not the efficacy of the test! In fact, diagnostic tests provide

So, how can we utilize the diagnostic test efficacy to get the chance that one actually gets COVID? Bayes’ formula is the answer. It provides a way to find

Formula

The Bayes’ formula comes from the conditional probability. If

The first equality is just the definition of conditional probability. For the second equality, we partition

Notice that we use the information about

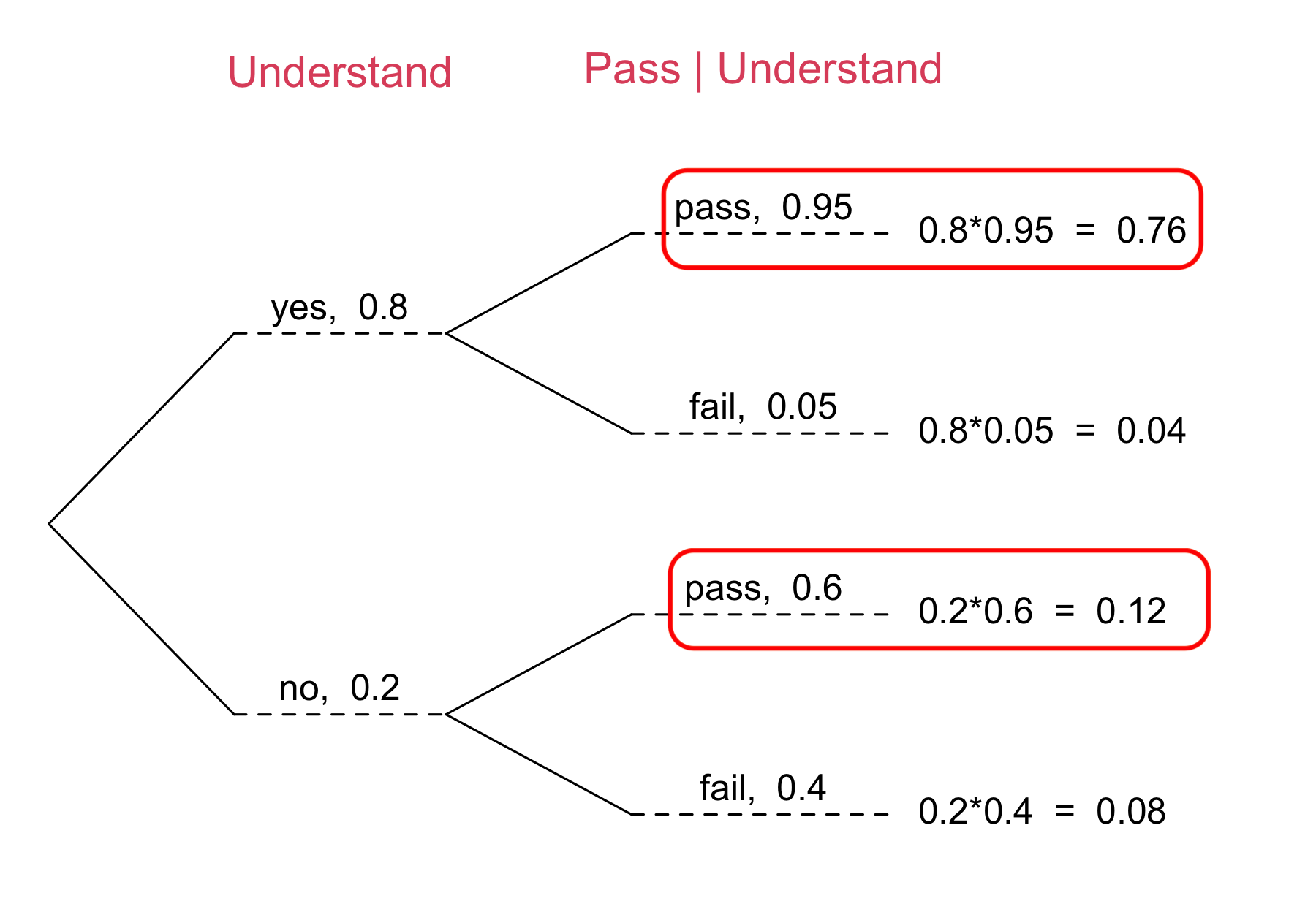

Example: Passing Rate

After taking MATH 4720,

Of those who understood the Bayes’ formula,

Of those who did not understand the Bayes’ formula, only

-

Step 1: Formulate what we would like to compute

-

Step 2: Define relevant events in the formula:

- Let

- Let

-

Step 3: Find probabilities in the Bayes’ formula using provided information.

-

Step 4: Apply Bayes’ formula.

Tree Diagram Illustration

-

- Of those who understood the Bayes’ formula,

- Of those who did not understand the formula,

Law of total probability*

If in general

This is the law of total probability. We’ve seen this with

How about

If

This is the general Bayes’ formula for arbitrary integer

8.4 Exercises

- A Pew Research survey asked 2,422 randomly sampled registered voters their political affiliation (Republican, Democrat, or Independent) and whether or not they identify as swing voters. 38% of respondents identified as Independent, 25% identified as swing voters, and 13% identified as both.

- Are being Independent and being a swing voter disjoint, i.e. mutually exclusive?

- What percent of voters are Independent but not swing voters?

- What percent of voters are Independent or swing voters?

- What percent of voters are neither Independent nor swing voters?

- Is the event that someone is a swing voter independent of the event that someone is a political Independent?

| Earth is warming | Not warming | Don’t Know/Refuse | Total | |

|---|---|---|---|---|

| Conservative Republican | 0.11 | 0.20 | 0.02 | 0.33 |

| Mod/Lib Republican | 0.06 | 0.06 | 0.01 | 0.13 |

| Mod/Cons Democrat | 0.25 | 0.07 | 0.02 | 0.34 |

| Liberal Democrat | 0.18 | 0.01 | 0.01 | 0.20 |

| Total | 0.60 | 0.34 | 0.06 | 1.00 |

-

A Pew Research poll asked 1,423 Americans, “From what you’ve read and heard, is there solid evidence that the average temperature on earth has been getting warmer over the past few decades, or not?”. The table above shows the distribution of responses by party and ideology, where the counts have been replaced with relative frequencies.

- Are believing that the earth is warming and being a liberal Democrat mutually exclusive?

- What is the probability that a randomly chosen respondent believes the earth is warming or is a Mod/Cons Democrat?

- What is the probability that a randomly chosen respondent believes the earth is warming given that he is a Mod/Cons Democrat?

- What is the probability that a randomly chosen respondent believes the earth is warming given that he is a Mod/Lib Republican?

- Does it appear that whether or not a respondent believes the earth is warming is independent of their party and ideology? Explain your reasoning.

After an MATH 4740/MSSC 5740 course, 73% of students could successfully construct scatter plots using R. Of those who could construct scatter plots, 84% passed, while only 62% of those students who could not construct scatter plots passed. Calculate the probability that a student is able to construct a scatter plot if it is known that she passed.

Of all homes having wood-burning furnaces, 30% own a type 1 furnace, 25% a type 2 furnace, 15% a type 3, and 30% other types. Over 3 years, 5% of type 1 furnaces, 3% of type 2, 2% of type 3, and 4% of other types have resulted in fires. If a fire occurs in a particular home, what is the probability that a type 1 furnace is in the home?

We say sets