11 Continuous Probability Distributions

In this chapter, we discuss continuous probability distributions. We first learn the idea and properties of continuous distributions, then talk about probably the most important and commonly used distribution in probability and statistics, the normal distribution.

11.1 Introduction

Unlike a discrete random variable taking finite or countable values, a continuous random variable takes on any values from an interval of the real number line. For example, a continuous random variable

Instead of probability functions, a continuous random variable

The probability that

The cumulative distribution function (cdf) of

😎 Luckily, we don’t calculate integrals in this course. You just need to remember that for continuous random variables,

- The pdf does not represent a probability.

- The integral of pdf represents a probability.

- The cdf itself by definition is a probability that is also from the integral of pdf.

Every probability density function must satisfy the two properties:

The second property tells us that

In fact, any function satisfying the two properties can be served as a probability density function for some random variable.

Density Curve

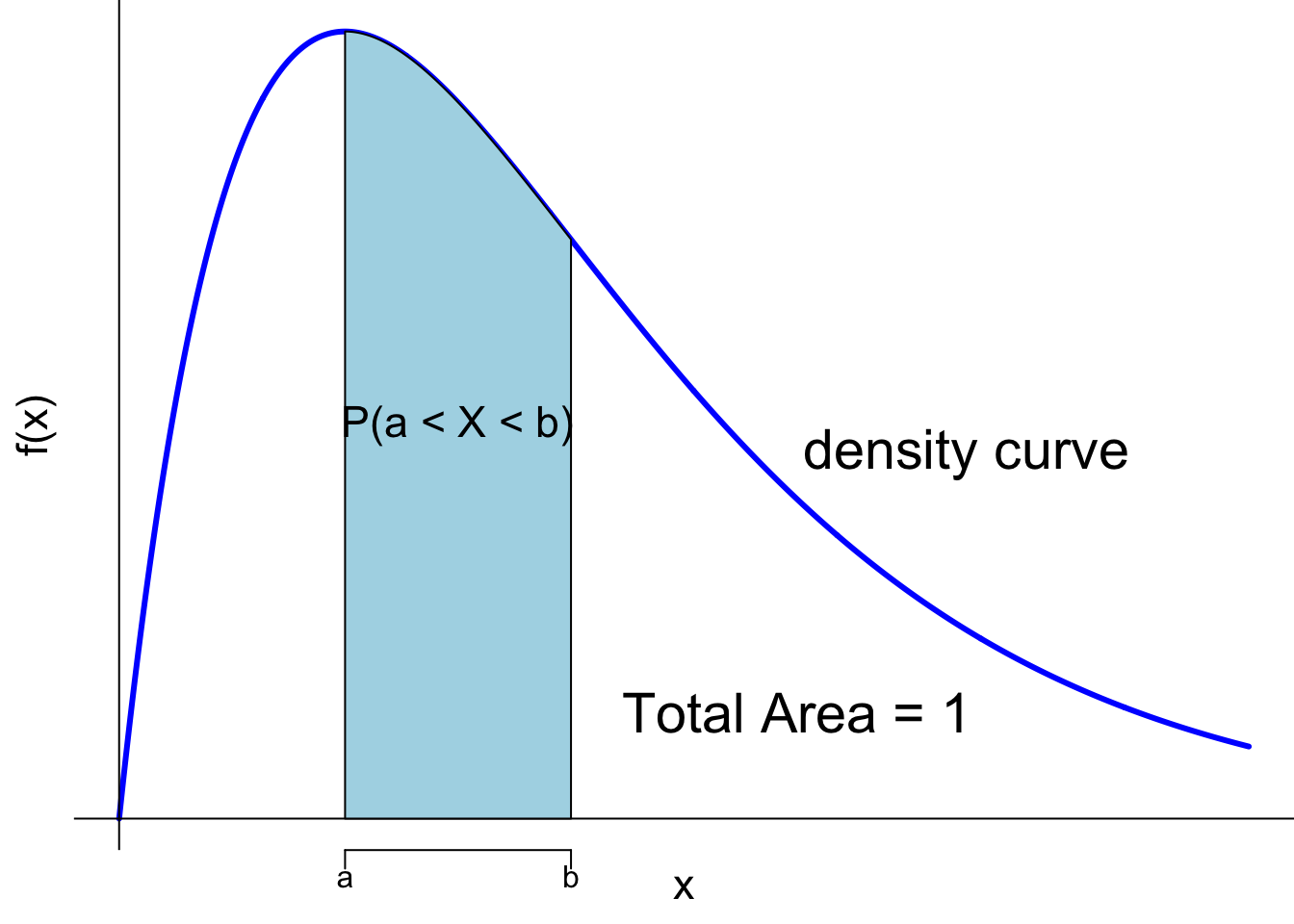

A probability density function generates a graph called a density curve that shows the likelihood of a random variable at all possible values. Figure 11.1 shows an example of density curve colored in blue. From Calculus 101, we have two important findings:

The integral of

The total area under any density curve is equal to 1:

Keep in mind that the area under the density curve represents probability, not the density value or the height of the density curve at some value of

One question is for a continuous random variable

Therefore, for a continuous random variable

Commonly Used Continuous Distributions

There are tons of continuous distributions out there, and we won’t be able to discuss all of them. In this book, we will touch on normal (Gaussian), student’s t, chi-square, and F distributions. Some other popular distributions include uniform, exponential, gamma, beta, inverse gamma, Cauchy, etc. If you are interested in learning more distributions and their properties, please take a calculus-based probability theory course.

11.2 Normal (Gaussian) Distribution

We now discuss the most important distribution in probability and statistics, the normal distribution or Gaussian distribution.1

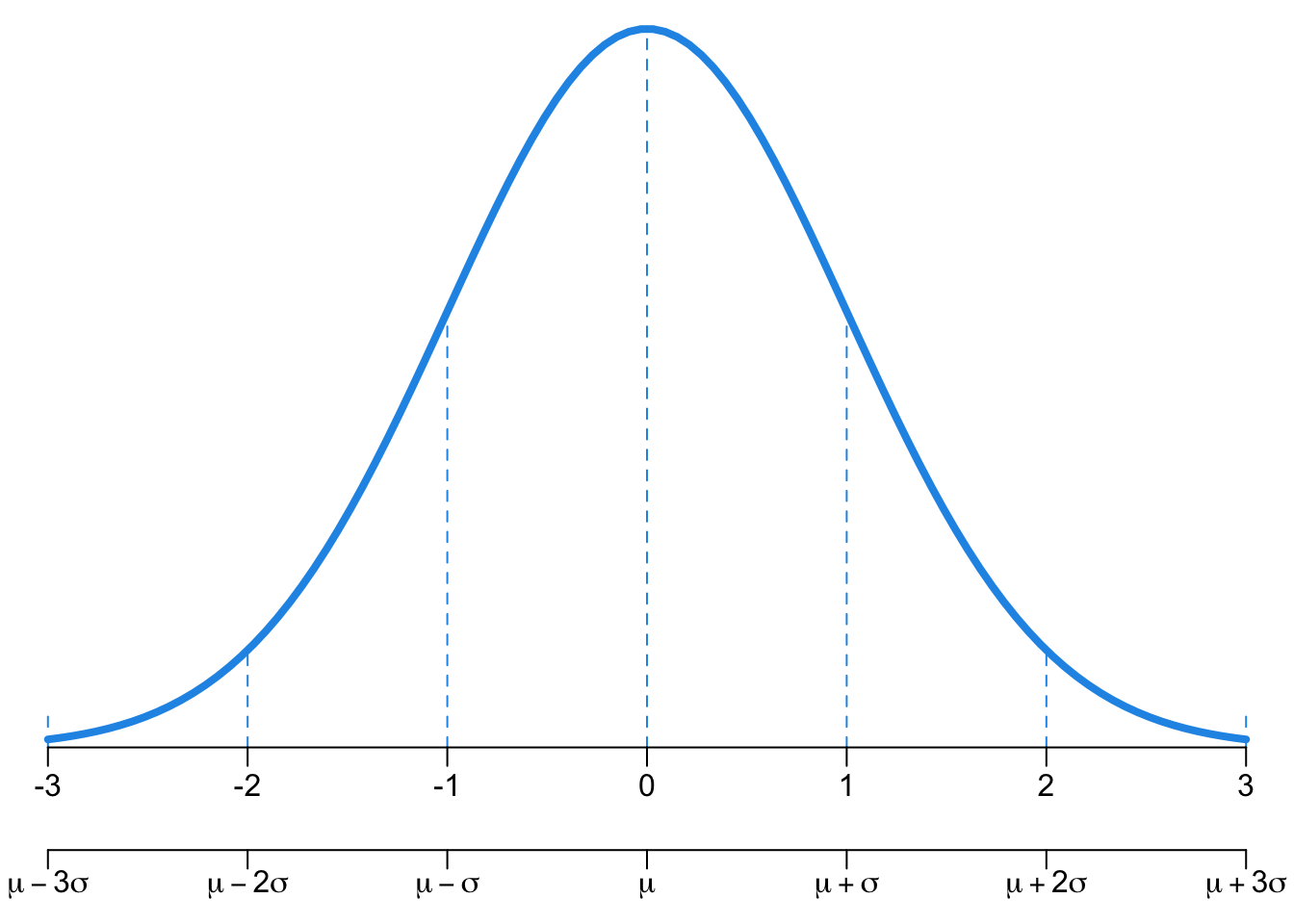

The normal distribution, referred to as

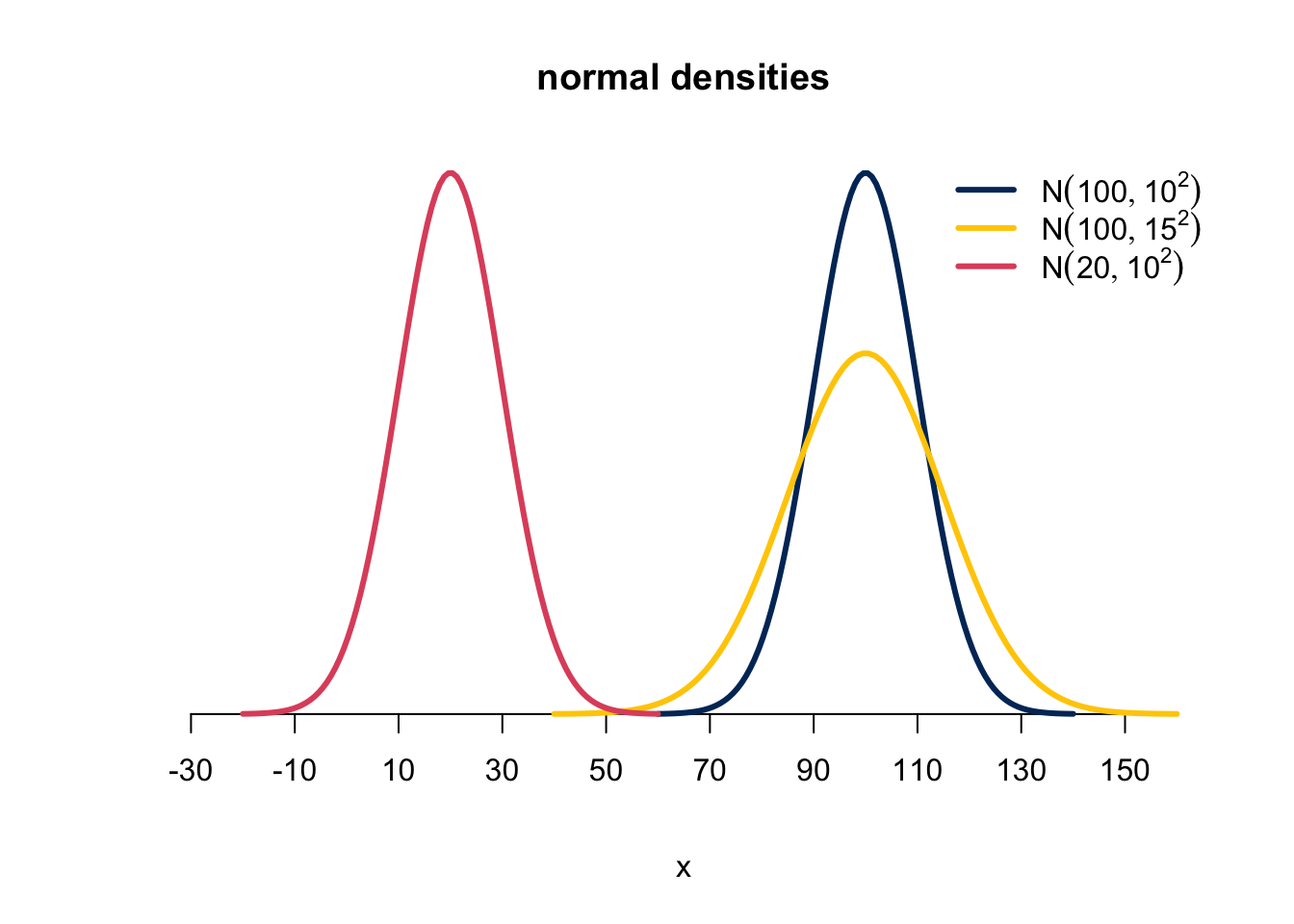

Figure 11.2 are examples of normal density curves and how they change with different means and standard deviations. The normal distribution is always bell-shaped and symmetric about the mean

import {interval as interval} from "@mootari/range-slider@1781"

11.3 Standardization and Z-Scores

Standardization is a transformation that allows us to convert any normal distribution

Why do we want to perform standardization? We want to put data on a standardized scale, because it helps us make comparisons more easily. Later we will see why. Let’s first see how we can do standardization.

If

The

Observations larger than the mean have positive

Observations smaller than the mean have negative

A

A

If

Any transformation of a random variable is still a random variable but with a different probability distribution.

Graphical Illustration

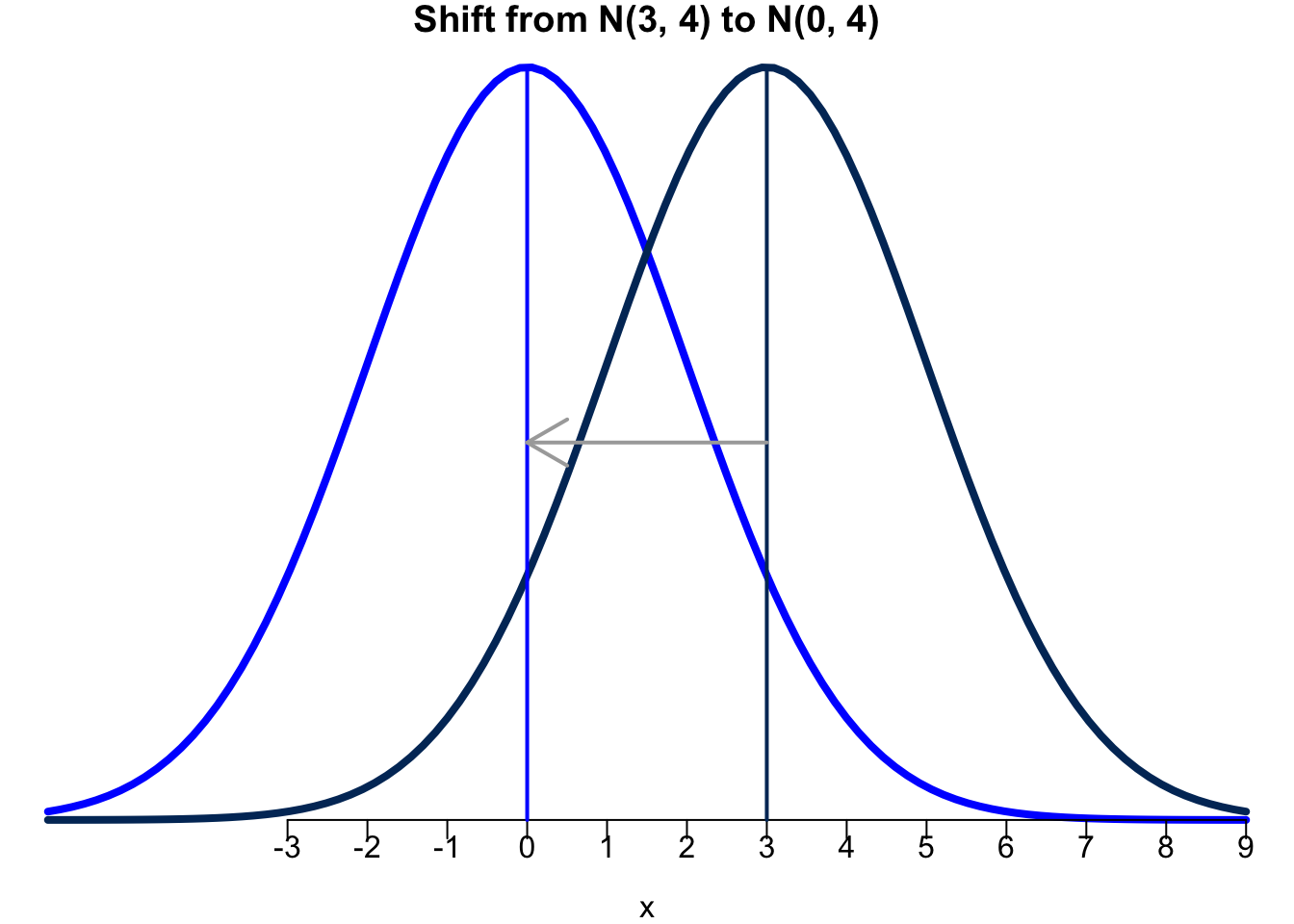

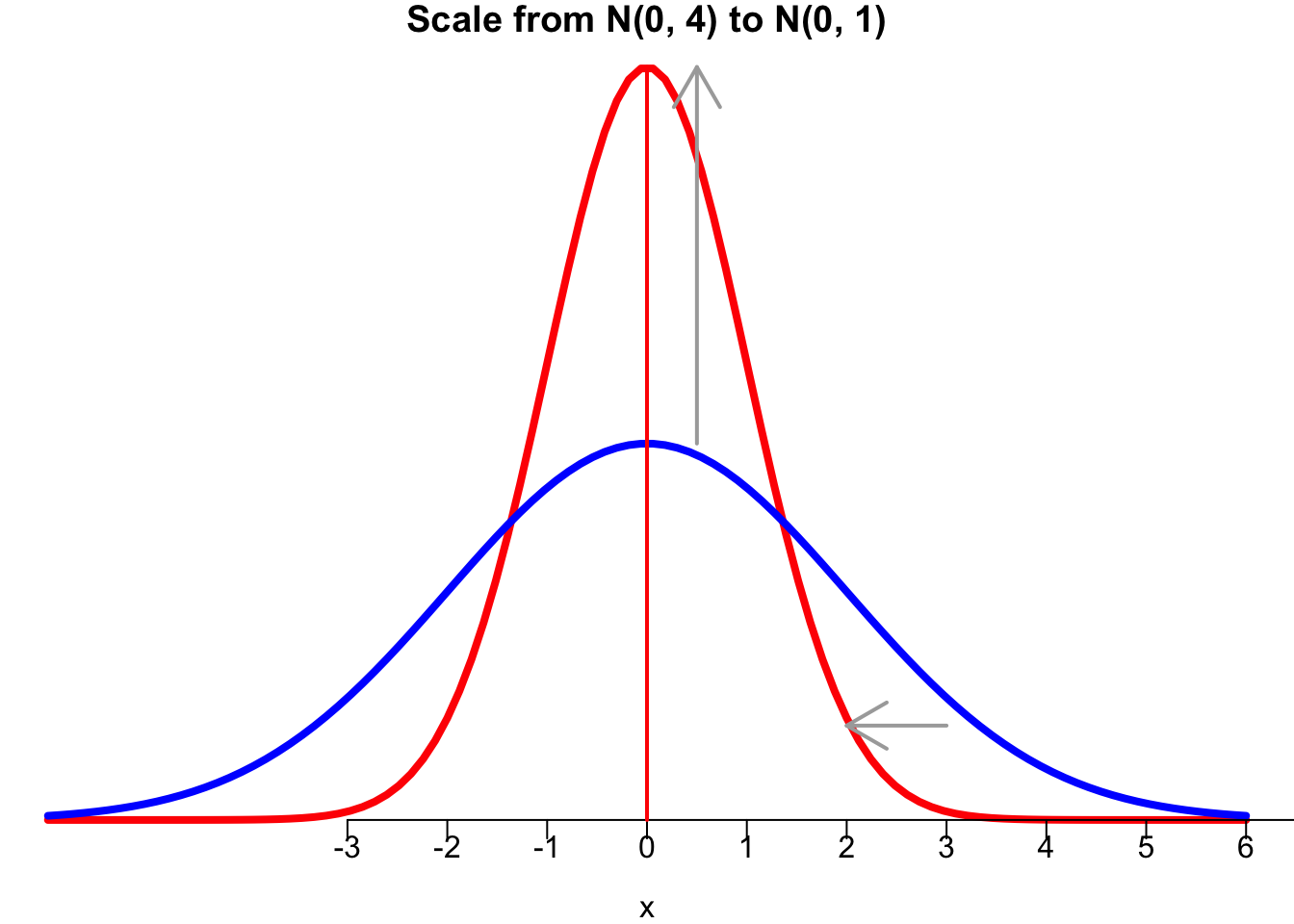

What does the standardization really do? Well it first subtracts the original variable value

- First,

- Second,

If

A value of

SAT and ACT Example (OS Example 4.2)

Standardization can help us compare the performance of students on the SAT and ACT, which both have nearly normal distributions. The table below lists the parameters for each distribution.

| Measure | SAT | ACT |

|---|---|---|

| Mean | 1100 | 21 |

| SD | 200 | 6 |

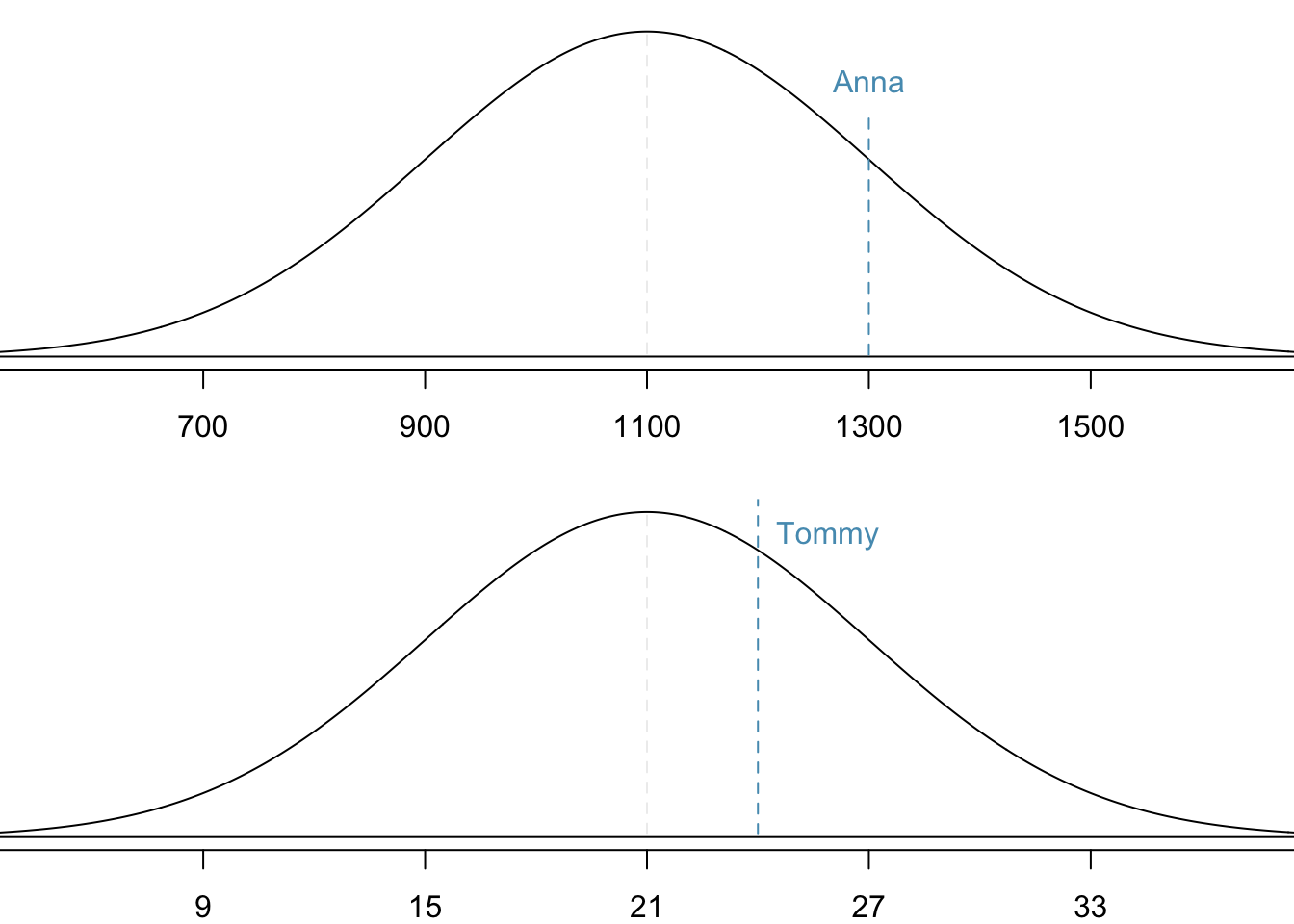

Suppose Anna scored a 1300 on her SAT and Tommy scored a 24 on his ACT. We want to determine whether Anna or Tommy performed better on their respective tests.

Standardization

Since SAT and ACT are measured on a different scale, we are not able to compare the two scores unless we measure them using the same scale. What we do is standardization. Both SAT and ACT are normally distributed but with different mean and variance. We first transform the two distributions into the standard normal distribution, then examining Anna and Tommys’ performance by checking the location of their score on the standard normal distribution.

The idea is that we first measure the two scores using the same scale and unit. The new transformed score in both cases is how many standard deviations the original score is away from its original mean. That is, both SAT and ACT are measured using the z-score. Then if A’s z-score is larger than B’ z-score, we know that A performs better than B because A has a relatively higher score than B.

The z-score of Anna and Tommy is

This standardization tells us that Anna scored 1 standard deviation above the mean and Tommy scored 0.5 standard deviations above the mean. From this information, we can conclude that Anna performed better on the SAT than Tommy performed on the ACT.

Figure 11.7 shows the SAT and ACT distributions. Note that the two distributions are depicted using the same density curve, as if they are measured on the same scale or standard normal distribution. Clearly we can see that Anna tends to do better with respect to everyone else than Tommy did.

11.4 Tail Areas and Normal Percentiles

Finding Tail Areas

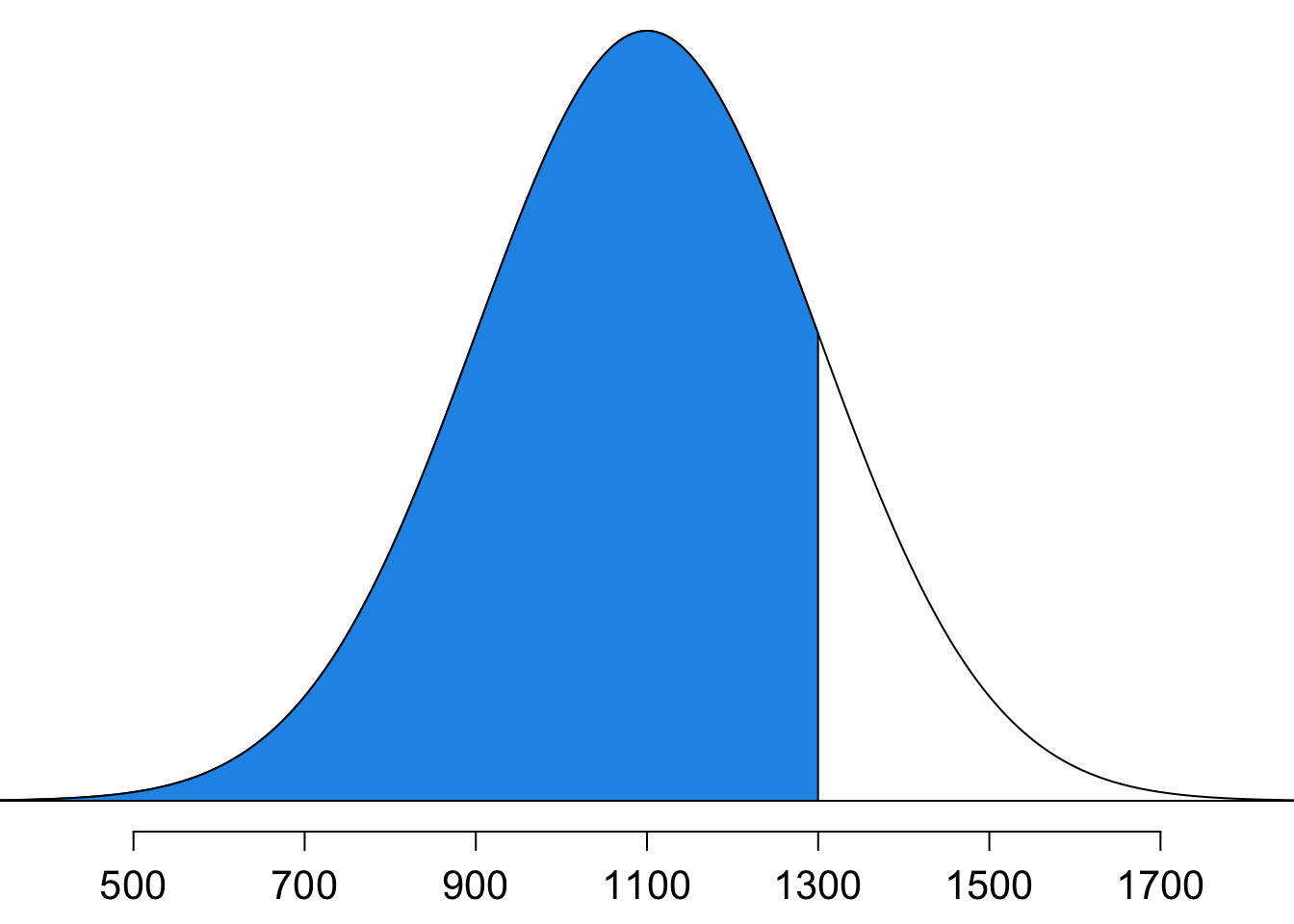

Finding tail areas allows us to determine the percentage of cases that are above or below a certain score. Going back to the SAT and ACT example, this can help us determine the fraction of students have an SAT score below Anna’s score of 1300. This is the same as determining what percentile Anna scored at, which is the percentage of cases that had lower scores than Anna. Therefore, we are looking for

With mean and sd representing the mean and standard deviation of a normal distribution, we use

pnorm(q, mean, sd)to computepnorm(q, mean, sd, lower.tail = FALSE)to compute

With loc and scale representing the mean and standard deviation of a normal distribution, we use

norm.cdf(x, loc, scale)to computenorm.sf(x, loc, scale)to compute

from scipy.stats import norm, binomnorm.cdf(x=1, loc=0, scale=1)0.8413447460685429norm.cdf(1300, loc=1100, scale=200)0.8413447460685429Notice that the z-score 1 in standard normal is equivalent to 1300 in

Second ACT and SAT Example

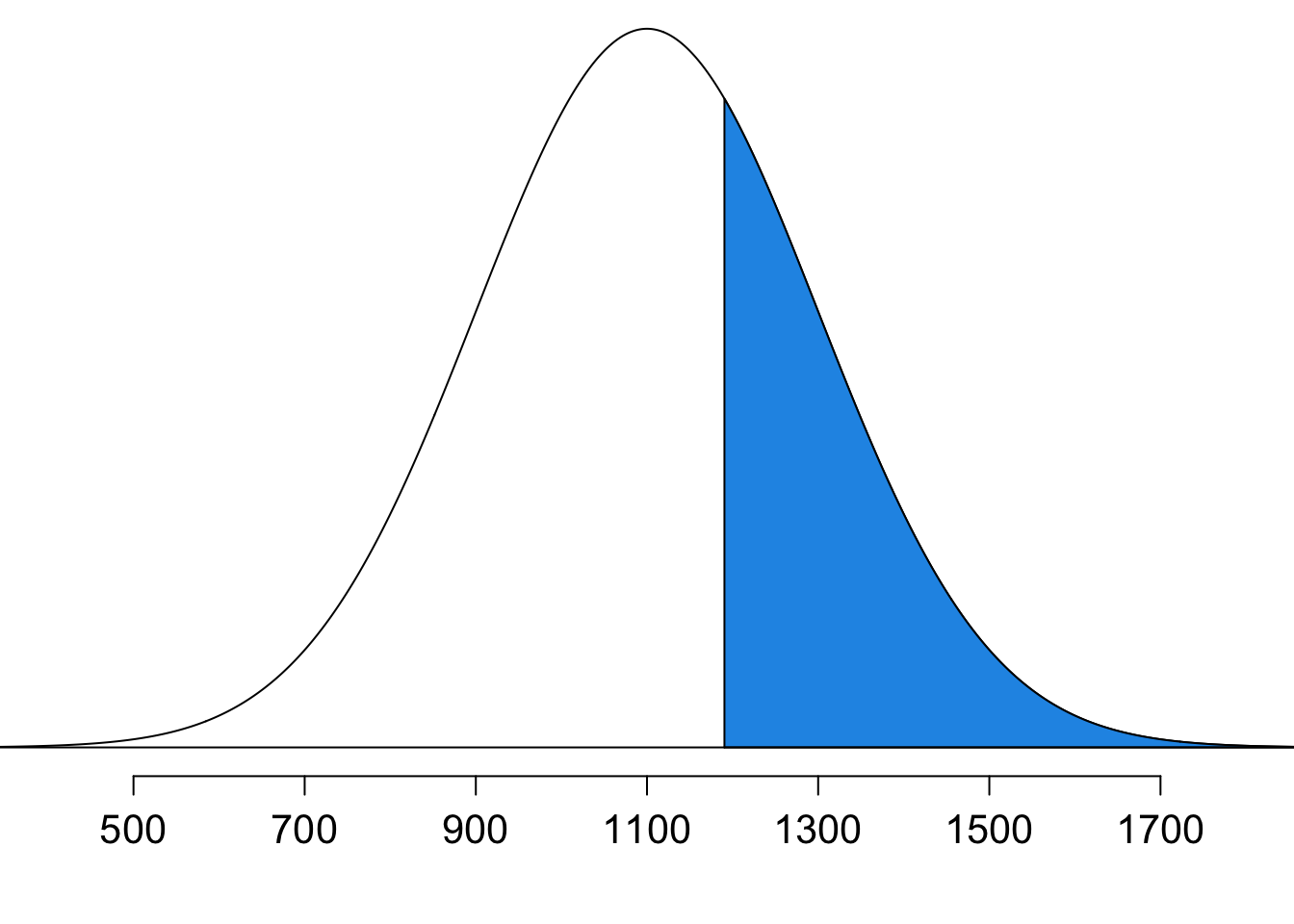

Shannon is an SAT taker, and nothing else is known about her SAT aptitude. With SAT score following

Let’s get the probability step by step. The first step is to figure out the probability we want to compute from the description of the question.

-

Step 1: State the problem

- We want to compute

- We want to compute

If you are an expert like me, you may already know how to get the probability using R once you know what you want to compute. But if you are a beginner, I strongly recommend you drawing a normal picture, and figure out which area is your goal.

- Step 2: Draw a picture

Note that Figure 11.10 reflects the fact that

The next step, which is not necessary, is to find the z-score. Using z-scores help us write shorter R code to compute the wanted probability.

- Step 3: Find

At this point, we obtain the target probability once we get pnorm() function to get it done.

- Step 4: Find the area using

pnorm()

1 - pnorm(0.45)[1] 0.3263552When we use R pnorm() to compute normal probabilities, standardization is not a must. However, if we don’t use z-scores, we must specify the mean and SD of the original distribution of pnorm(x, mean = mu, sd = sigma). Otherwise, R does not know which normal distribution we are considering. For example,

1 - pnorm(1190, mean = 1100, sd = 200)[1] 0.3263552By default, pnorm() uses the standard normal distribution assuming mean = 0 and sd = 1. So if we use z-scores to compute probabilities, we don’t need to specify the value of mean and standard deviation, and our code is shorter:

1 - pnorm(0.45)[1] 0.3263552Any probability can be computed using the “less than” form (lower or left tail). In the previous example, we use

This step is not necessary too, and we can directly compute pnorm(). However, if the calculation involves the “greater than” form, or we focus on upper or right tail part of the distribution, we need to add lower.tail = FALSE in pnorm(). For example,

pnorm(1190, mean = 1100, sd = 200, lower.tail = FALSE)[1] 0.3263552By default, lower.tail = TRUE, and pnorm(q, ...) finds a probability

- Step 4: Find the area using

norm.cdf()

1 - norm.cdf(0.45)0.32635522028791997When we use Python norm.cdf() to compute normal probabilities, standardization is not a must. However, if we don’t use z-scores, we must specify the mean and SD of the original distribution of norm.cdf(x, loc= mu, scale=sigma). Otherwise, Python does not know which normal distribution we are considering. For example,

1 - norm.cdf(1190, loc=1100, scale=200)0.32635522028791997By default, norm.cdf() uses the standard normal distribution assuming loc=0 and scale=1. So if we use z-scores to compute probabilities, we don’t need to specify the value of mean and standard deviation, and our code is shorter:

1 - norm.cdf(0.45)0.32635522028791997Any probability can be computed using the “less than” form (lower or left tail). In the previous example, we use

This step is not necessary too, and we can directly compute norm.sf(). For example,

norm.sf(1190, loc=1100, scale=200)0.32635522028791997Normal Percentiles in R

Quite often we want to know what score we need to get in order to be in the top 10% of the test takers, or the minimal score we should get to be not at the bottom 20%. To answer such questions, we need to find the percentile or quantile of the underlying distribution.

To get the

qnorm(p, mean, sd)to get a value ofqnorm(p, mean, sd, lower.tail = FALSE)to get

norm.ppf(p, loc, scale)to get a value ofnorm.isf(p, loc, scale)to getisfis short for inverse survival function.

SAT and ACT Example

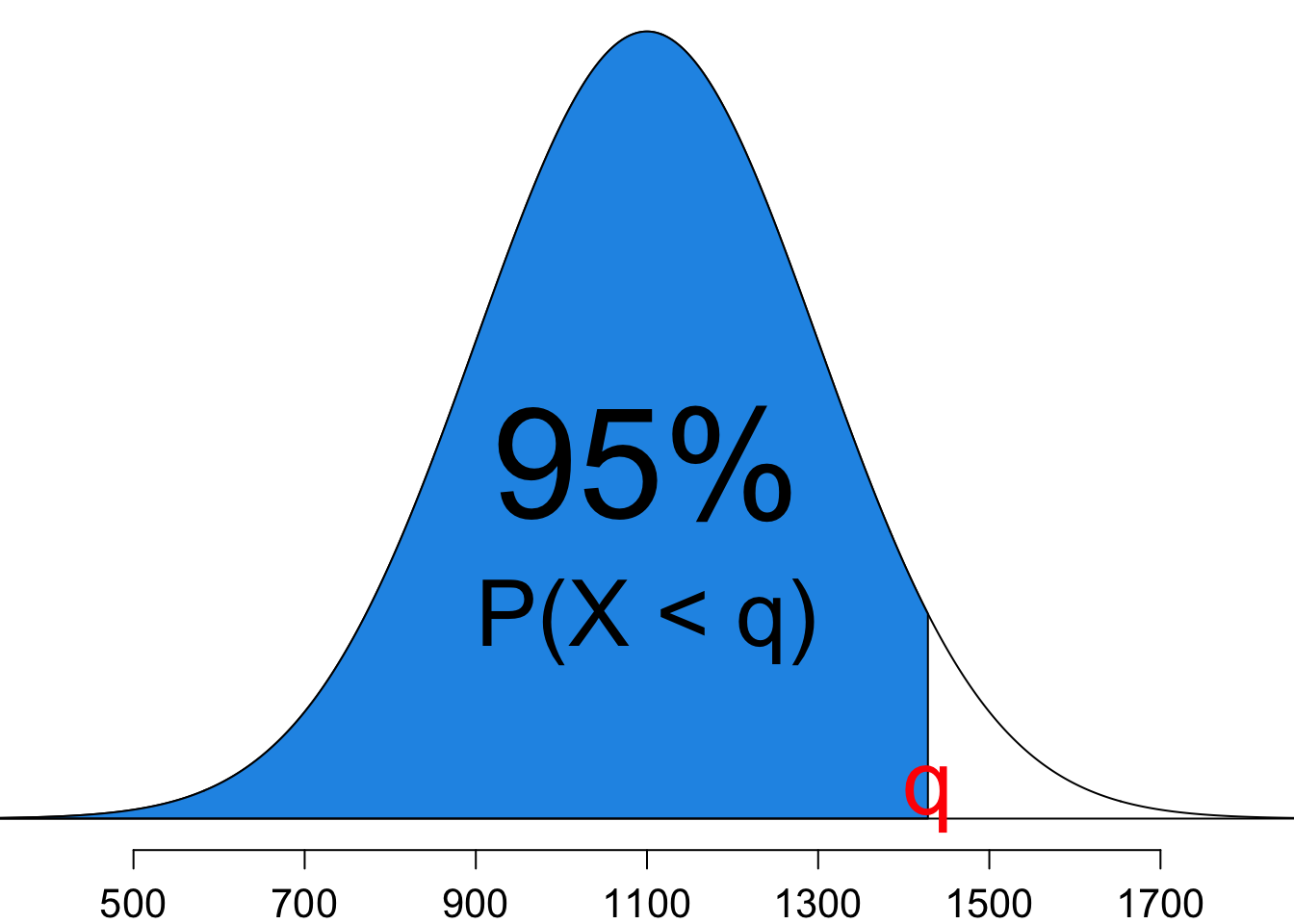

Back to our SAT example. What is the 95th percentile for SAT scores?

Keep in mind that a percentile or quantile is a value of random variable

The first step again is to figure out what we want. If we want to find the 95th percentile, it means that we want to find the variable value

-

Step 1: State the problem

- We want to find

- We want to find

The whole idea is shown graphically in Figure 11.11. We already know the percentage 95%. All we need to do is to find the value

- Step 2: Draw a picture

Like we do in finding probabilities, we can first do the standardization for finding quantiles although it is not necessary. So we use qnorm() to find the z-score

-

Step 3: Find

qnorm():

(z_95 <- qnorm(0.95))[1] 1.644854Now, since we are interested in the 95th percentile of SAT, not the z-score, we need to transform the 95th percentile of

-

Step 4: Find the

-

-

(x_95 <- 1100 + z_95 * 200)[1] 1428.971Therefore, the 95th percentile for SAT scores is 1429.

Note that we can directly find the 95th percentile of SAT without standardization. We just need to remember to stay in the original SAT distribution by explicitly specifying mean = 1100 and sd = 200 in the qnorm() function, as we do for pnorm().

qnorm(p = 0.95, mean = 1100, sd = 200)[1] 1428.971-

Step 3: Find

norm.ppf():

z_95 = norm.ppf(0.95)

z_951.6448536269514722Now, since we are interested in the 95th percentile of SAT, not the z-score, we need to transform the 95th percentile of

-

Step 4: Find the

-

-

x_95 = 1100 + z_95 * 200

x_951428.9707253902943Therefore, the 95th percentile for SAT scores is 1429.

Note that we can directly find the 95th percentile of SAT without standardization. We just need to remember to stay in the original SAT distribution by explicitly specifying loc=1100 and scale=200 in the norm.ppf() function, as we do for norm.cdf().

norm.ppf(0.95, loc=1100, scale=200)1428.970725390294311.5 Finding Probabilties

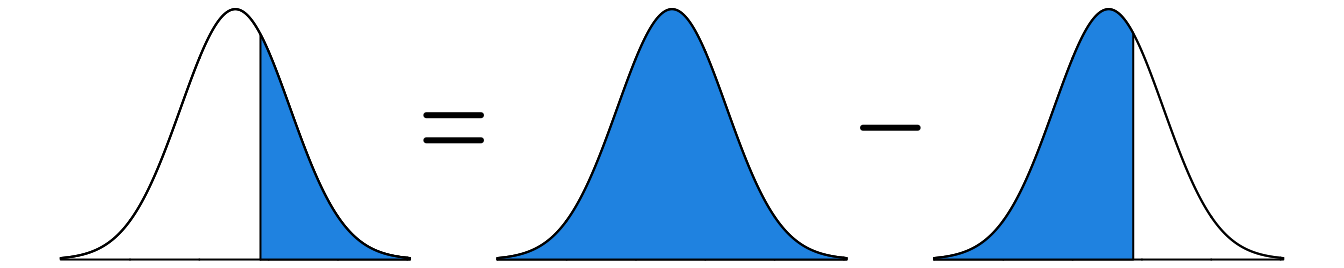

👉 To find a probability, if you are a beginner, it is always good to draw and label the normal curve and shade the area of interest. Below is a summary of how we can use pnorm() to compute various kinds of probabilities.

- 👉 Less than

pnorm(z)pnorm(x, mean = mu, sd = sigma)

- 👉 Greater than

1 - pnorm(z)1 - pnorm(x, mean = mu, sd = sigma)pnorm(x, mean = mu, sd = sigma, lower.tail = FALSE)

-

👉 Between two numbers

pnorm(z_b) - pnorm(z_a)pnorm(b, mean = mu, sd = sigma) - pnorm(a, mean = mu, sd = sigma)

-

👉 Outside of two numbers

pnorm(z_a) + pnorm(z_b, lower.tail = FALSE)pnorm(z_a) + 1 - pnorm(z_b)pnorm(a, mean = mu, sd = sigma) + pnorm(b, mean = mu, sd = sigma, lower.tail = FALSE)pnorm(a, mean = mu, sd = sigma) + 1 - pnorm(b, mean = mu, sd = sigma)

👉 To find a probability, if you are a beginner, it is always good to draw and label the normal curve and shade the area of interest. Below is a summary of how we can use norm.cdf() and norm.sf() to compute various kinds of probabilities.

- 👉 Less than

norm.cdf(z)norm.cdf(x, loc=mu, scale=sigma)

- 👉 Greater than

1 - norm.cdf(z)1 - norm.cdf(x, loc=mu, scale=sigma)norm.sf(x, loc=mu, scale=sigma)

-

👉 Between two numbers

norm.cdf(z_b) - pnorm.cdf(z_a)norm.cdf(b, loc=mu, scale=sigma) - norm.cdf(a, loc=mu, scale=sigma)

-

👉 Outside of two numbers

norm.cdf(z_a) + norm.sf(z_b)norm.cdf(z_a) + 1 - norm.cdf(z_b)norm.cdf(a, loc=mu, scale=sigma) + norm.sf(b, loc=mu, scale=sigma)norm.cdf(a, loc=mu, scale=sigma) + 1 - norm.cdf(b, loc=mu, scale=sigma)

11.6 Checking Normality

11.6.1 Normal quantile plot

If we use a statistical method with its assumption being violated, the analysis results and conclusion made by the method will be worthless. Many statistical methods assume variables are normally distributed. Therefore, testing the appropriateness of the normal assumption is a key step.

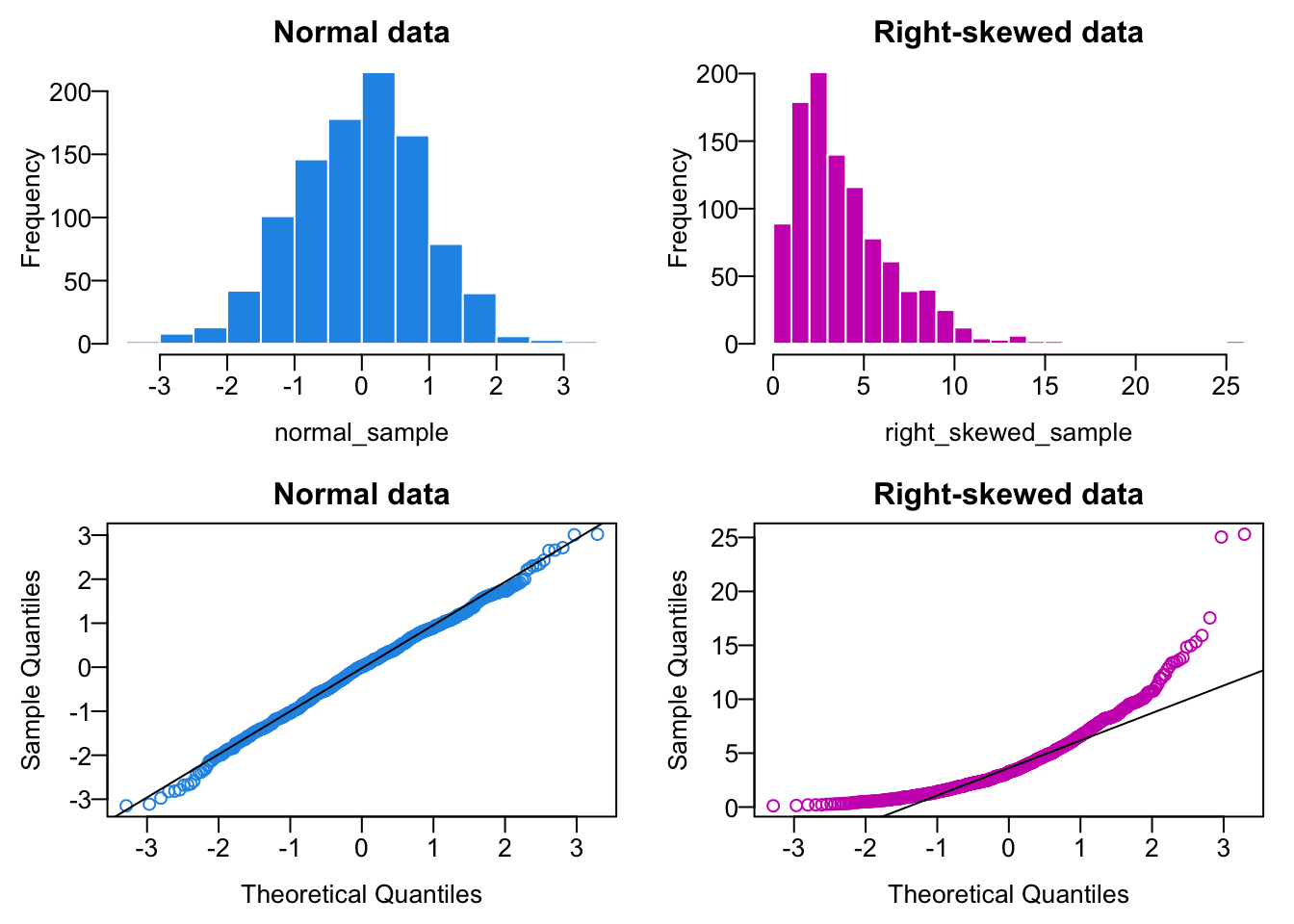

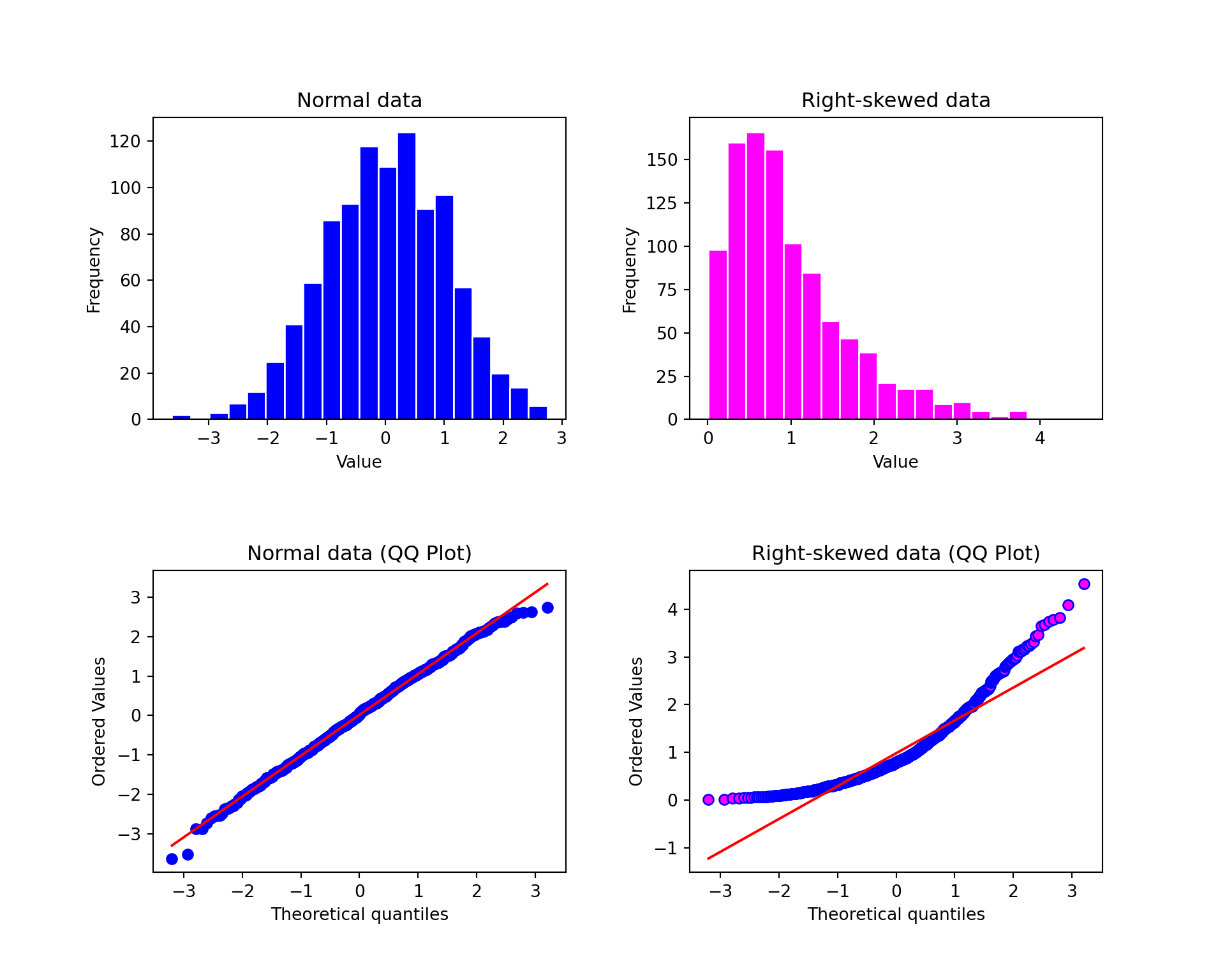

We can check this normality assumption using a so-called normal quantile plot (normal probability plot) or a Quantile-Quantile plot (QQ plot).

The construction of the QQ plot is technical, and we don’t need to dig into that at this moment. The bottom line is if the data are (nearly) normally distributed, the points on the QQ plot will lie close to a straight line.

If the data are right-skewed, the points on the QQ plot will be convex-shaped. If the data are left-skewed, the points on the QQ plot will be concave-shaped.

To generate a QQ-plot for checking normality in R, we can use qqnorm() and qqline(), where the first argument in the functions is the sample data we would like to check. Figure 11.12 shows QQ plots at the bottom for normal and right-skewed data samples. Since the data normal_sample actually come from a normal distribution, its histogram looks like normal, and its QQ plot look like a perfect straight line. On the other hand, on the right hand side we have a right skewed data set right_skewed_sample. Clearly, its QQ plot is a upward curve, and definitely not linear, indicating that the sample data are not normally distributed.

To generate a QQ-plot for checking normality in R, we can use stats.probplot(), where the first argument in the functions is the sample data we would like to check. Figure 11.13 shows QQ plots at the bottom for normal and right-skewed data samples. Since the data normal_sample actually come from a normal distribution, its histogram looks like normal, and its QQ plot look like a perfect straight line. On the other hand, on the right hand side we have a right skewed data set right_skewed_sample. Clearly, its QQ plot is a upward curve, and definitely not linear, indicating that the sample data are not normally distributed.

import numpy as np

import scipy.stats as stats

import matplotlib.pyplot as plt

# First plot: Histogram of normal data

nor_hist = axs[0, 0].hist(normal_sample, bins=20, color='blue', edgecolor='white')

axs[0, 0].set_title("Normal data")

axs[0, 0].set_xlabel("Value")

axs[0, 0].set_ylabel("Frequency")

# Second plot: QQ plot for normal data

nor_qq = stats.probplot(normal_sample, dist="norm", plot=axs[1, 0])

axs[1, 0].set_title("Normal data (QQ Plot)")

# Third plot: Histogram of right-skewed data

skew_hist = axs[0, 1].hist(right_skewed_sample, bins=20, color='magenta', edgecolor='white');

axs[0, 1].set_title("Right-skewed data")

axs[0, 1].set_xlabel("Value")

axs[0, 1].set_ylabel("Frequency")

# Fourth plot: QQ plot for right-skewed data

skew_qq = stats.probplot(right_skewed_sample, dist="norm", plot=axs[1, 1])

axs[1, 1].get_lines()[0].set_markerfacecolor('magenta')

axs[1, 1].set_title("Right-skewed data (QQ Plot)")

# Display the plots

plt.show()The code involves doing subplots in one single figure, and it makes the code a bit complex and long. No need to worry that much.

11.6.2 Normality test

If visualization is not enough for you to tell whether the data are far from normally distributed, we can use some formal procedures to make such conclusion. Since the methods are about hypothesis testing which will be first introduced in Chapter 16, we will take about normality test in later chapters after we learn hypothesis testing.

11.7 Normal Approximation to Binomial Distribution*

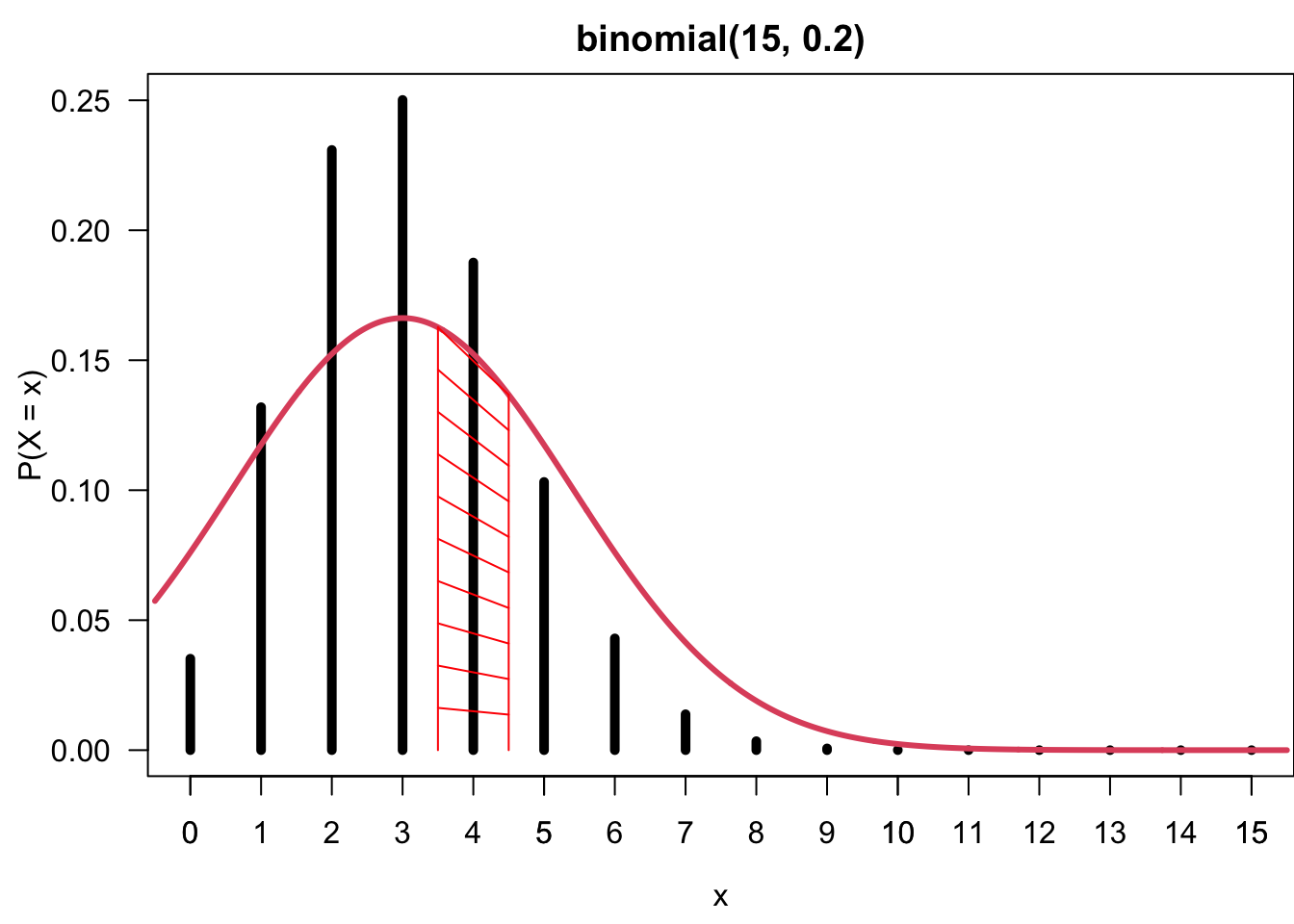

In Chapter 10, we learn that a binomial distribution can be approximated by a Poisson distribution. In this section we learn that a binomial distribution can also be approximated by a normal distribution. How good the approximation is again depends on the size of

To determine a normal distribution, we need parameters

When

Can you see any potential issue of using normal to approximate binomial? In fact, we are using a continuous normal distribution to approximate a discrete binomial distribution. In order to have a good approximation, especially when

Continuity correction is made to transform discrete binomial values

By doing so,

Figure 11.14 illustrate that

Example of Normal Approximation (4.26 of OTT)

A large drug company has 100 potential new prescription drugs under clinical test. About 20% of all drugs that reach this stage are eventually licensed for sale. What is the probability that at least 15 of the 100 drugs are eventually licensed?

n <- 100 # number of trials

p <- 0.2 # probability of being licensed for sale

## 1. Exact Binomial Probability P(X >= 15) = 1 - P(X < 14)

1 - pbinom(q = 14, size = n, prob = p)[1] 0.9195563## 2. Normal approximation with Continuity Correction

## P(X >= 14.5) = 1 - P(X < 14.5)

1 - pnorm(q = 14.5, mean = n * p, sd = sqrt(n * p * (1 - p)))[1] 0.9154343## 3. Normal approximation with NO Continuity Correction

## P(X >= 15) = 1 - P(X < 15)

1 - pnorm(q = 15, mean = n * p, sd = sqrt(n * p * (1 - p)))[1] 0.8943502n = 100 # number of trials

p = 0.2 # probability of success

from scipy.stats import norm, binom

# 1. Exact Binomial Probability P(X >= 15) = 1 - P(X < 15)

1 - binom.cdf(14, n, p)0.9195562788619489# 2. Normal approximation with continuity correction

1 - norm.cdf(14.5, loc=n * p, scale=np.sqrt(n * p * (1 - p)))0.9154342776486644# 3. Normal approximation without continuity correction

1 - norm.cdf(15, loc=n * p, scale=np.sqrt(n * p * (1 - p)))0.894350226333144611.8 Exercises

- What percentage of data that follow a standard normal distribution

- The average daily high temperature in June in Chicago is 74

- What is the probability of observing an 81

- How cool are the coldest 15% of the days (days with lowest average high temperature) during June in Chicago?

- What is the probability of observing an 81

- Head lengths of Virginia opossums follow a normal distribution with mean 104 mm and standard deviation 6 mm.

- Compute the

- Which observation (97 mm or 108 mm) is more unusual or less likely to happen than another observation? Why?

- Compute the

Personally I prefer call it Gaussian to normal distribution. Every distribution is unique and has its own properties. Why the Gaussian distribution is normal?↩︎