14 Point and Interval Estimation

In this chapter we will be talking about estimation including point and interval estimation. Point estimation uses one single number computed from the sample to estimate our unknown parameter. Interval estimation provides uncertainty quantification and uses a range of plausible numbers to let us know where the truth unknown parameter may be located.

14.1 Point Estimator

Let me ask you a question.

If the single number you use can be computed from of sample data

A point estimate is a value of a point estimator used to estimate a population parameter. So here is the subtle difference. A point estimator is a random variable which is a function of sample data

Back to the question. If we want to estimate the unknown population mean, which number we use to estimate it? We now have an intuitive answer. The sample mean

Sample Mean as an Point Estimator

Let’s see how the sample mean is used as an point estimator for

We are going to collect a sample of size five, rnorm() to generate random numbers from a normal distribution, where the first argument is the number of observations to be generated. ::: {.cell layout-align=“center”}

## Generate data x1, x2, x3, x4, x5, each from distribution N(2, 1)

set.seed(1234)

x_data_1 <- rnorm(n = 5, mean = 2, sd = 1):::

The following shows the realized five data points and the sample mean. ::: {.cell layout-align=“center”} ::: {.cell-output-display}

| x1 | x2 | x3 | x4 | x5 | sample mean |

|---|---|---|---|---|---|

| 0.79 | 2.28 | 3.08 | -0.35 | 2.43 | 1.65 |

::: :::

Here we use the sample mean

As we discussed in ?sec-prob-samdist, due to the randomness nature of drawing a sample value from the population distribution, we do not expect the statistic to be the same as the corresponding parameter. It is possible that most of our sample values happen to be larger or smaller than the true mean, or we may unluckily get an outlier sample value that distorts and drags the sample value toward it. In such cases, the sample mean will be not close to the true population mean. You can think this way. One data point represents one piece of information about the unknown population distribution. With a small sample size, our sample only represents a small part of the unknown distribution. The gap between sample mean and the true population mean is kind of like information lost because of not being able to collect the rest of the subjects in the population.

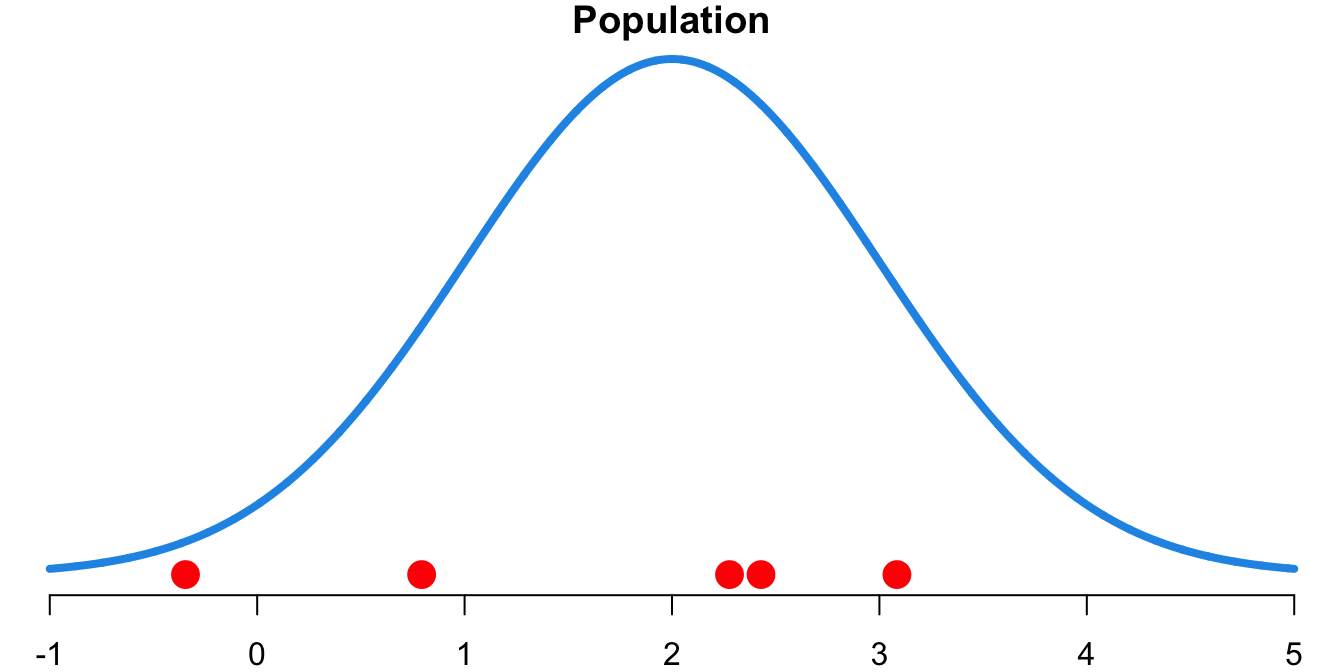

Figure 14.1 shows the sample data and the population distribution

Well, we could collect a sample again if resources are permitted. In simulation, another sample of size five is drawn from the same population

| x1 | x2 | x3 | x4 | x5 | sample mean |

|---|---|---|---|---|---|

| 2.59 | 2.71 | 1.89 | 1.55 | 2.61 | 2.27 |

The second sample mean,

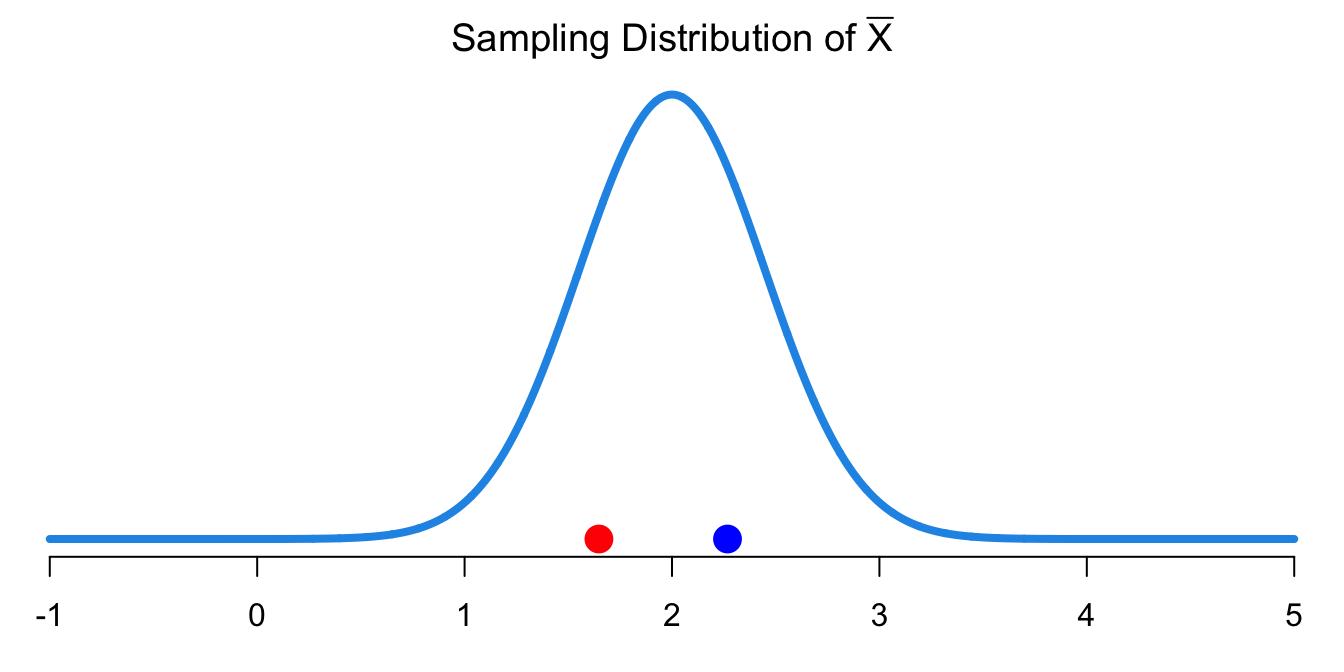

We have connected sampling distribution to statistical inference, in particular the point estimation together. Figure 14.2 shows the sampling distribution of

Why Point Estimates Are Not Enough

Since

If you want to catch a fish, would you prefer to use a spear or a net? I would use a net because I’m not a sharpshooter, and using a net covers a large range of possible locations where the fish can be. Due to the variation of

14.2 Confidence Intervals

In statistics, a plausible range of values for

How confident we are about the CI covering the parameter is called the level of confidence. The higher the confidence level is, the more reliable the CI is because the CI is more likely to capture the parameter.

Given the same level of confidence, the larger the variation of

Precision vs. Reliability

With a fixed sample size, the precision and reliability of a confidence interval are trading off. Here is a question.

We use a wider interval because a wider interval is more likely to capture the population parameter value. So a more reliable confidence interval is wider than a less reliable confidence interval. But What drawbacks are associated with using a wider interval?

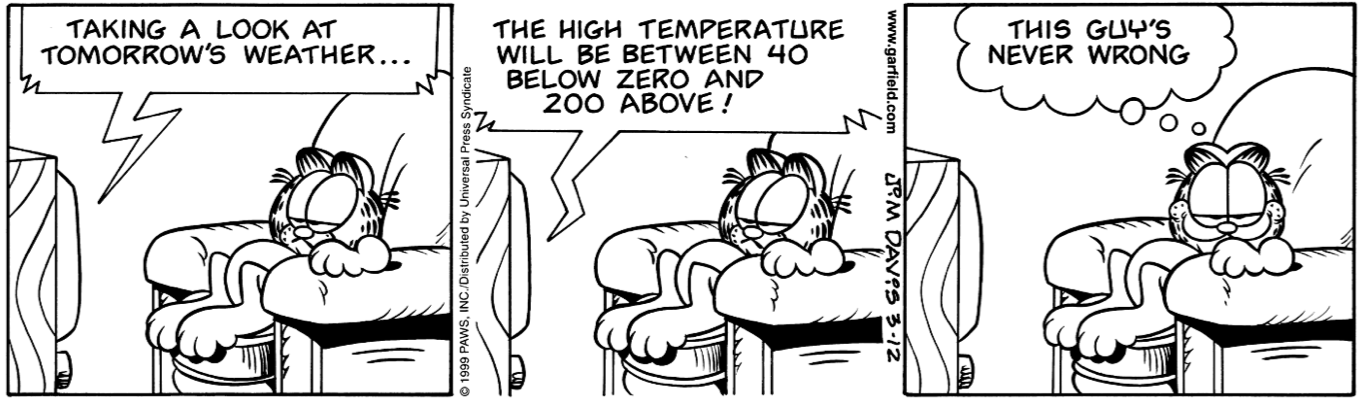

The precision and reliability trade-off is clearly explained in the cute comic in Figure 14.3. I can say I am 100% confident that your exam 1 score is between 0 and 100. Am I right? Yes. But do I provide helpful information? Absolutely not, the interval includes every possible score of the exam. The interval is too wide to be helpful. Such interval is 100% reliable but with no precision at all.

Narrower intervals are more precise but less reliable, while wider intervals are more reliable but less precise. How can we get best of both worlds – high precision and high reliability/accuracy, meaning short interval with high level of confidence? What we need is larger sample size, given that the sample quality is good. It is a quite easy statement, but sometimes it’s hard to collect more samples.

A Confidence Interval Is for a Parameter

A confidence interval is for a parameter, NOT a statistic. Remember, a confidence interval is a way of doing estimation for a unknown parameter. For example, we use the sample mean to form a confidence interval for the population mean.

We NEVER say “The confidence interval of the sample mean,

In general, a confidence interval for

The

Formally, for

The confidence level can be any number between zero and one. Common choices for the confidence level include 90%

-

High reliability and Low precision: I am 100% confident that the mean height of Marquette students is between 3’0” and 8’0”.

- Duh…🤷

-

Low reliability and High precision: I am 20% confident that mean height of Marquette students is between 5’6” and 5’7”.

- This is far from the truth… 🙅

We’ve learned the general form of a confidence interval for

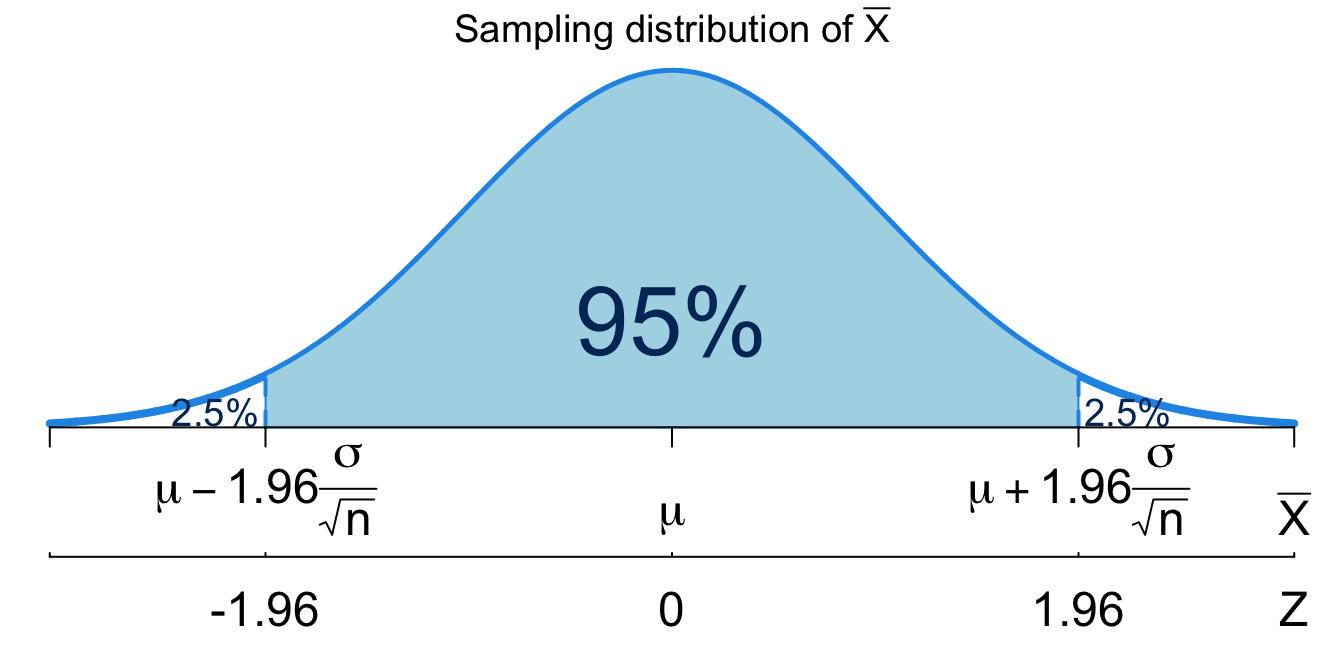

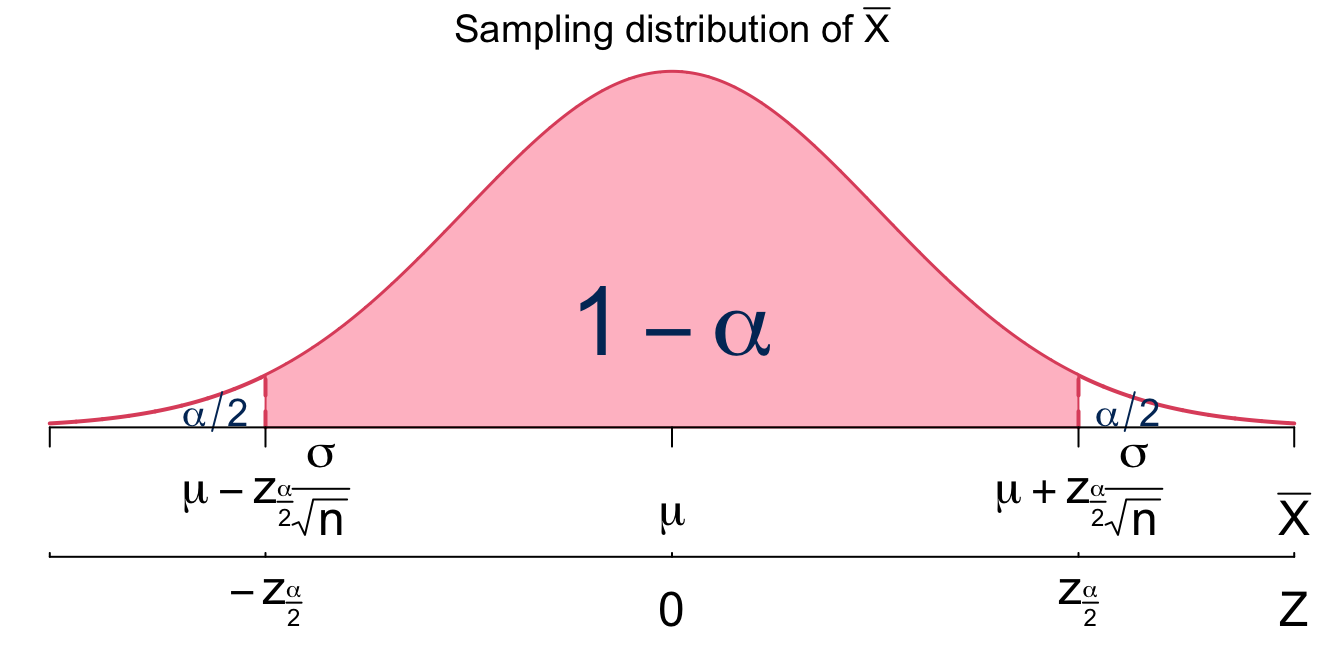

Suppose we want to obtain the

Here the

We learned that

The answer is No ❌! Remember that we don’t know

We can arrange the inequality in the probability so that

We are done! With sample data of size

14.3 Confidence Intervals for

We just obtained the 95% confident interval for

The requirements for estimating

- 👉 The sample should be a random sample, such that all data

- 👉 The population standard deviation,

- 👉 The population is either normally distributed,

The general

To sum up, we provide procedures for constructing a confidence interval for

Check that the requirements are satisfied.

Decide

Find the critical value,

Evaluate margin of error,

Construct the

Example

Suppose we want to know the mean systolic blood pressure (SBP) of a population. Assume that the population distribution is normal and has a standard deviation of 5 mmHg. We have a random sample of 16 subjects from this population with a mean of 121.5 mmHg. Estimate the mean SBP with a 95% confidence interval.

We construct the confidence interval step by step using the procedure.

- Requirements:

- Normality is assumed,

- Normality is assumed,

- Decide

-

- Find the critical value

-

- Evaluate margin of error

-

- Construct the

- The 95% CI for the mean SBP is

- The 95% CI for the mean SBP is

Below is a demonstration of how to find the 95% CI for SBP using R/Python

## save all information we have

alpha <- 0.05

n <- 16

x_bar <- 121.5

sig <- 5

## 95% CI

## z-critical value

(cri_z <- qnorm(p = alpha / 2, lower.tail = FALSE))

# [1] 1.96

## margin of error

(m_z <- cri_z * (sig / sqrt(n)))

# [1] 2.45

## 95% CI for mu when sigma is known

x_bar + c(-1, 1) * m_z

# [1] 119 124import numpy as np

from scipy.stats import norm

import matplotlib.pyplot as plt

# Given values

alpha = 0.05

n = 16

x_bar = 121.5

sig = 5 # Population standard deviation

# z-critical value

cri_z = norm.ppf(1 - alpha / 2)

cri_z

# 1.959963984540054

cri_z = norm.isf(alpha / 2) ## also works

cri_z

# 1.9599639845400545

# Margin of error

m_z = cri_z * (sig / np.sqrt(n))

m_z

# 2.4499549806750682

# 95% Confidence Interval for mu

x_bar + np.array([-1, 1]) * m_z

# array([119.05004502, 123.94995498])Interpreting the Confidence Interval

We have known how to construct a confidence interval. But what on earth is that? How do we interpret the interval correctly? This is pretty important because the interval is usually misinterpreted and inappropriately used in statistical analysis. Don’t blame yourself if you find it hard to understand the meaning. The confidence interval concept is not intuitive, and it does not really answer what we care about the unknown parameter. The confidence interval is a concept in the classical or frequestist point of view. Another way of interval estimation is to use the so called credible interval that uses Bayesian philosophy. We will discuss their difference in detail in Chapter 22.

Back to a 95% confidence interval. The following statements and interpretations are wrong. Please do not interpret the interval this way.

WRONG ❌ “There is a 95% chance/probability that the true population mean will fall between 119.1 mm and 123.9 mm.”

WRONG ❌ “The probability that the true population mean falls between 119.1 mm and 123.9 mm is 95%.”

Although those statements are often what we want, they are completely wrong. Let’s learn why. The sample mean is a random variable with a sampling distribution, so it makes sense to compute a probability of it being in some interval. The population mean is unknown and FIXED, so we cannot assign or compute any probability of it. If we were using Bayesian inference Chapter 22, a different inference method, we could compute a probability associated with

So how do we correctly interpret a confidence interval? Here is the answer.

“We are 95% confident that the mean SBP lies between 119.1 mm and 123.9 mm.”

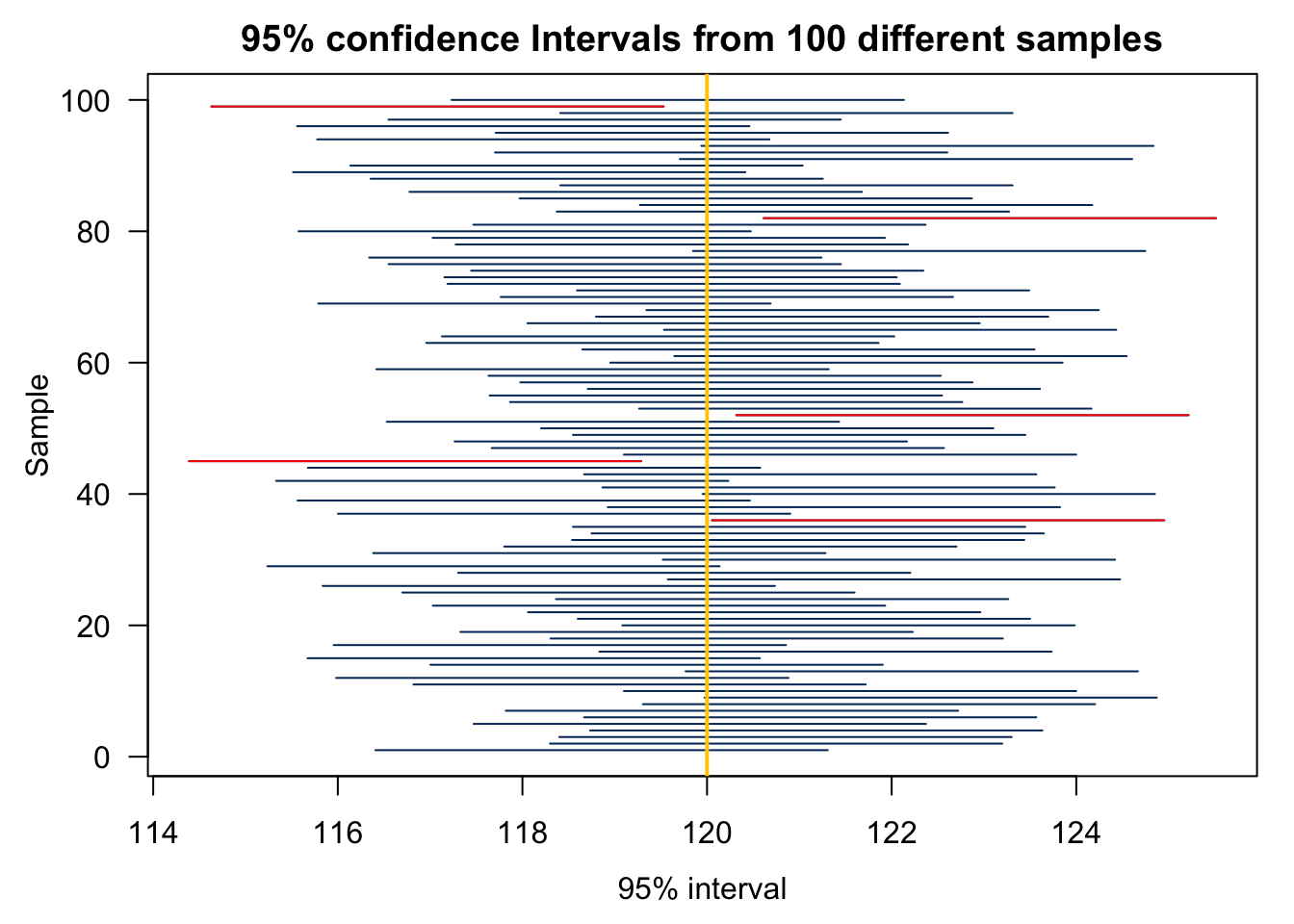

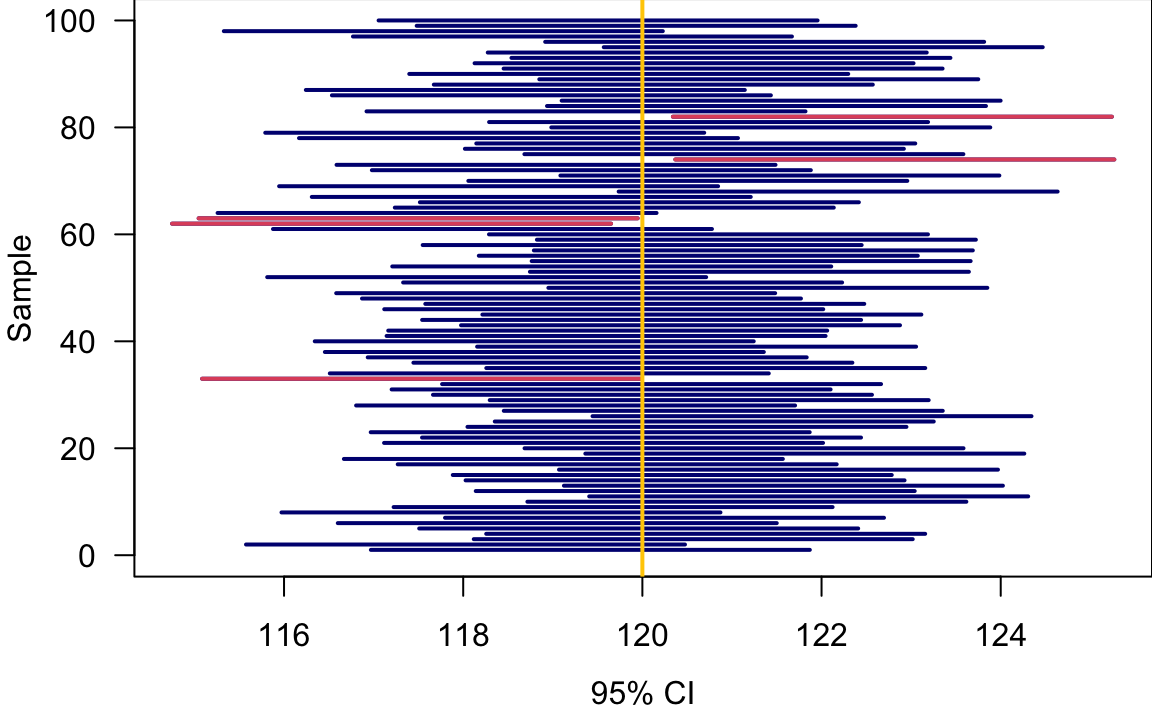

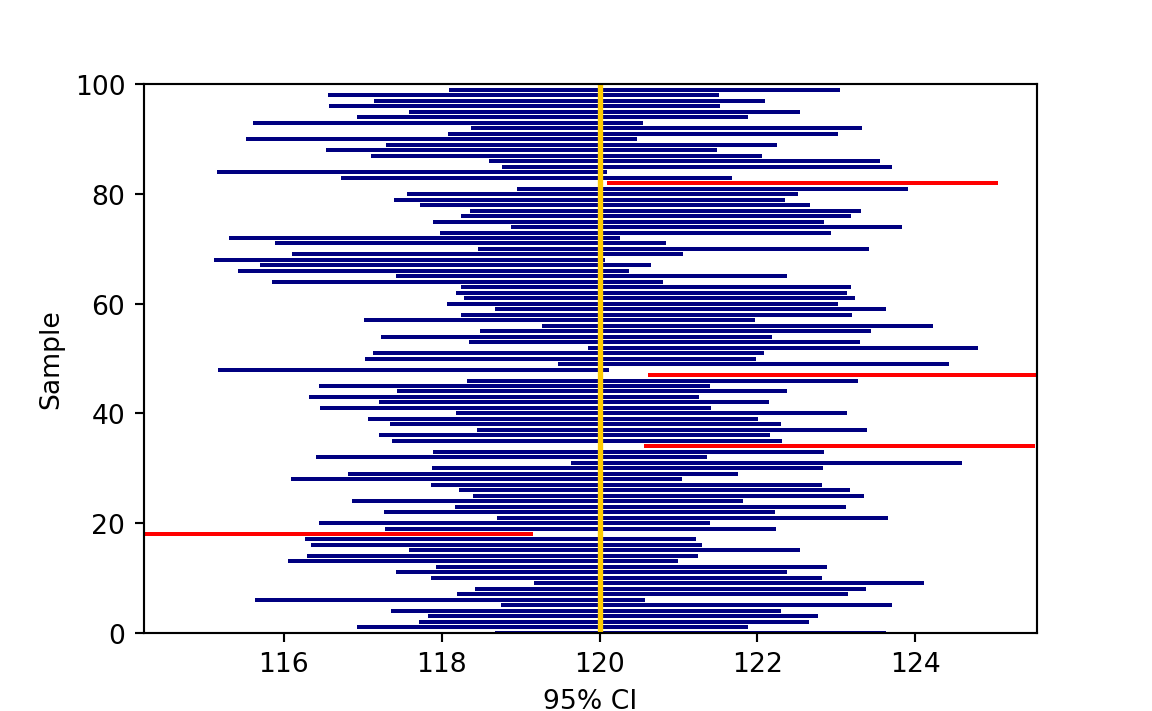

But still what does “95% confident” really mean? This means if we were able to collect our data many times and build the corresponding CIs, we would expect that about 95% of those intervals would contain the true population parameter, which, in this case, is the mean systolic blood pressure.

Remember that

Please keep the following ideas in mind.

A 95% CI does not mean that if 100 data sets are collected, there will be exactly 95 intervals capturing

We never know with certainty that 95% of the intervals, or any single interval for that matter, contains the true population parameter because again we never know what the true value of the parameter is.

In reality, we usually have only one data set, and we are not able to collect more data. We have no idea of whether our 95% confidence interval capture the unknown parameter or not. We are only “95% confident”.

Out of a total of 2 samples, 1 (50.000%) of the 90% confidence intervals contained the true population mean 1.

import {Scrubber as Scrubber} from "@mbostock/scrubber"

The procedure of generating 100 confidence intervals for

Algorithm

Generate 100 sampled data of size

Obtain 100 sample means

For each

mu <- 120; sig <- 5

al <- 0.05; M <- 100; n <- 16

set.seed(2024)

x_rep <- replicate(M, rnorm(n, mu, sig))

xbar_rep <- apply(x_rep, 2, mean)

E <- qnorm(p = 1 - al / 2) * sig / sqrt(n)

ci_lwr <- xbar_rep - E

ci_upr <- xbar_rep + E

plot(NULL, xlim = range(c(ci_lwr, ci_upr)), ylim = c(0, M),

xlab = "95% CI", ylab = "Sample", las = 1)

mu_out <- (mu < ci_lwr | mu > ci_upr)

segments(x0 = ci_lwr, y0 = 1:M, x1 = ci_upr, col = "navy", lwd = 2)

segments(x0 = ci_lwr[mu_out], y0 = (1:M)[mu_out], x1 = ci_upr[mu_out],

col = 2, lwd = 2)

abline(v = mu, col = "#FFCC00", lwd = 2)# (114.22947438766174, 125.53817008417028)

# (0.0, 100.0)

mu = 120

sig = 5

al = 0.05

M = 100

n = 16

np.random.seed(2024)

x_rep = np.random.normal(loc=mu, scale=sig, size=(M, n))

xbar_rep = np.mean(x_rep, axis=1)

E = norm.ppf(1 - alpha / 2) * sig / np.sqrt(n)

ci_lwr = xbar_rep - E

ci_upr = xbar_rep + E

for i in range(M):

col = 'red' if mu < ci_lwr[i] or mu > ci_upr[i] else 'navy'

plt.plot([ci_lwr[i], ci_upr[i]], [i, i], color=col, lw=1.5)

plt.xlim([min(ci_lwr), max(ci_upr)])

plt.ylim([0, M])

plt.xlabel("95% CI")

plt.ylabel("Sample")

plt.axvline(mu, color="#FFCC00", lw=2)

plt.show()14.3.1 Reducing margin of error and determining sample size*

We learn that the margin of error is

- Reduce

- Increase

- Reduce

However, given a confidence level

What we can do is to rewrite the margin of error, and represent

Clearly, to get the desired margin of error, say

Example

State tax advisory board wants to estimate the mean household income with a margin of error of $1,000 with 99% confidence level. Assume that the population standard deviation is $10,000. How many households they need to sample?

They need sample size 657.

14.4 Confidence Intervals for

We complete the discussion of confidence intervals for

When

- A random sample

- A population that is normally distributed and/or

The confidence interval when

When

Student’s t Distribution

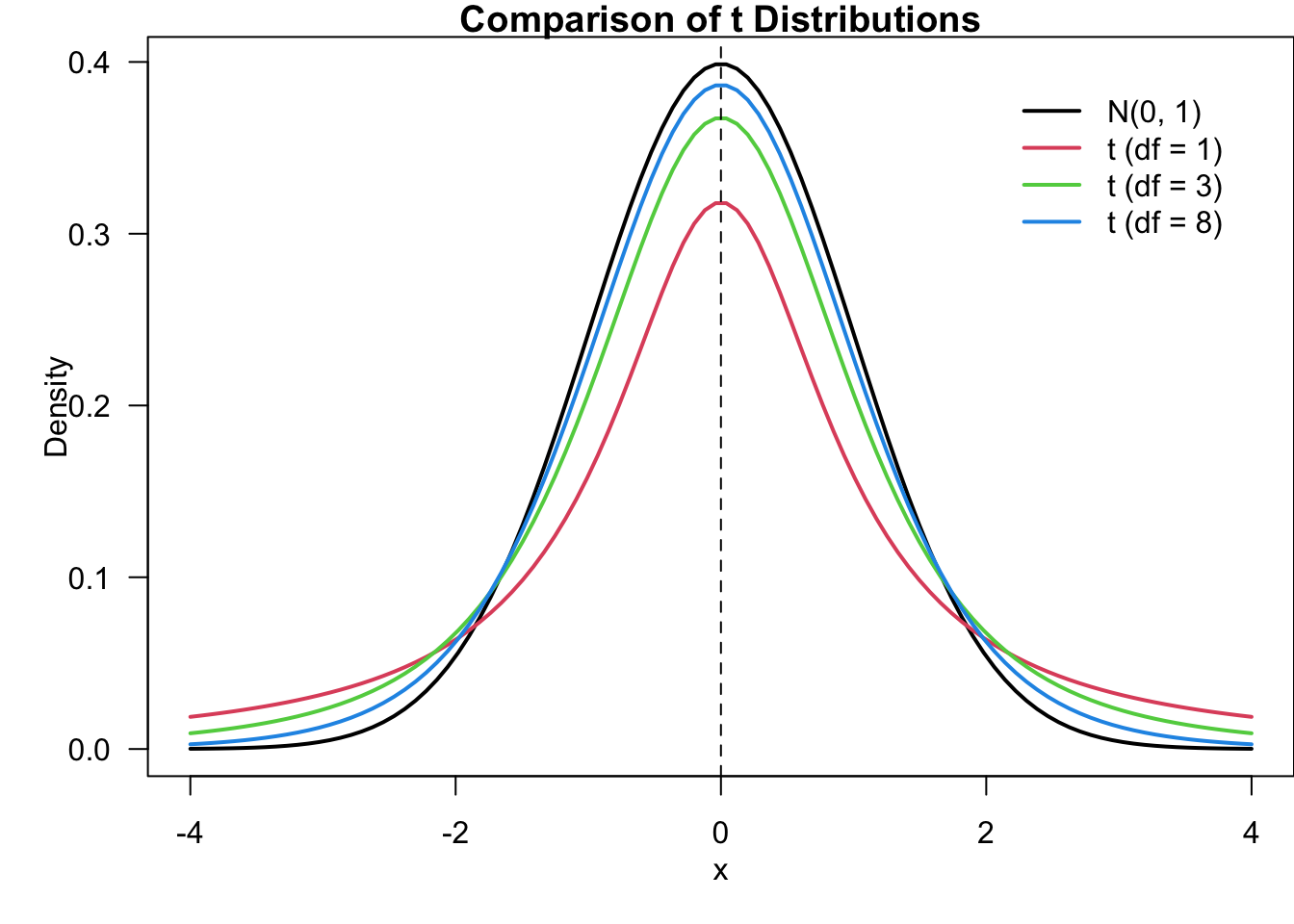

If the population is normally distributed or

Here the degrees of freedom is the parameter of the student’s t distribution.

Properties

The student’s t distribution, as shown in Figure 14.7, looks pretty similar to the standard normal distribution, but they are different. Some of the properties of the student’s t distribution are listed below.

For any degrees of freedom, the student’s t distribution is symmetric about the mean 0 and bell-shaped like

For any degrees of freedom, the student’s t distribution has more variability than

The the student’s t distribution has less variability for larger degrees of freedom (sample size).

As

Critical Values of

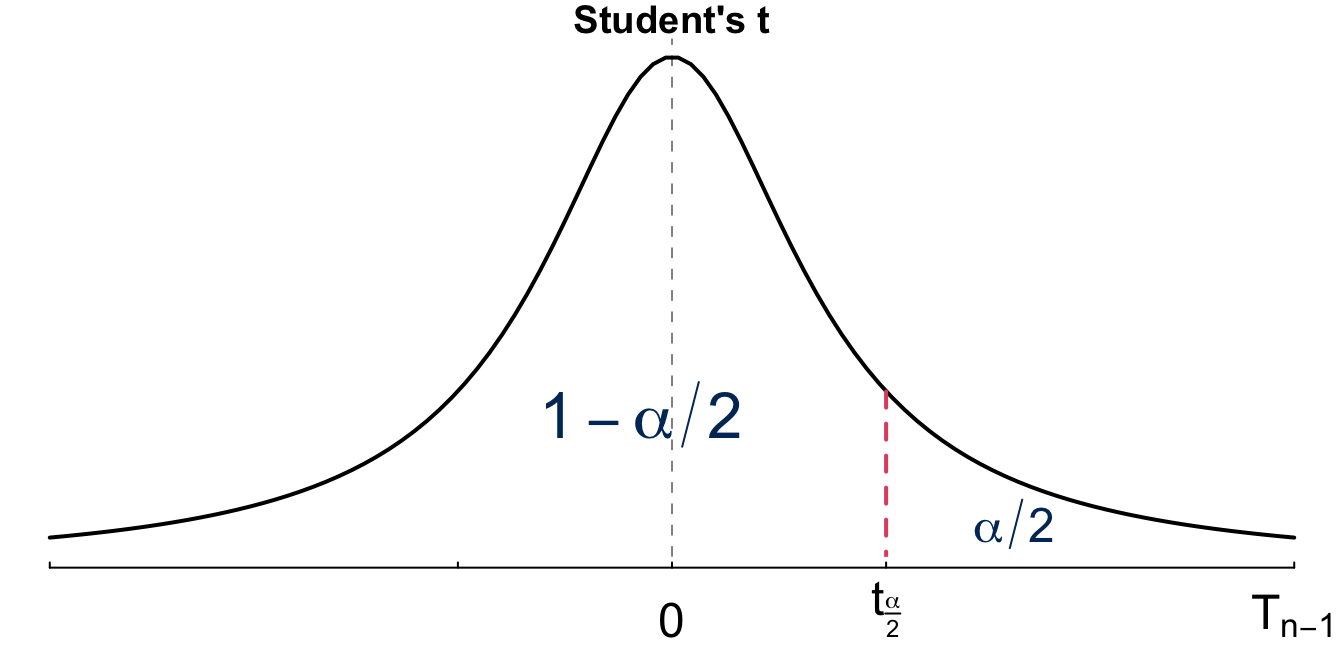

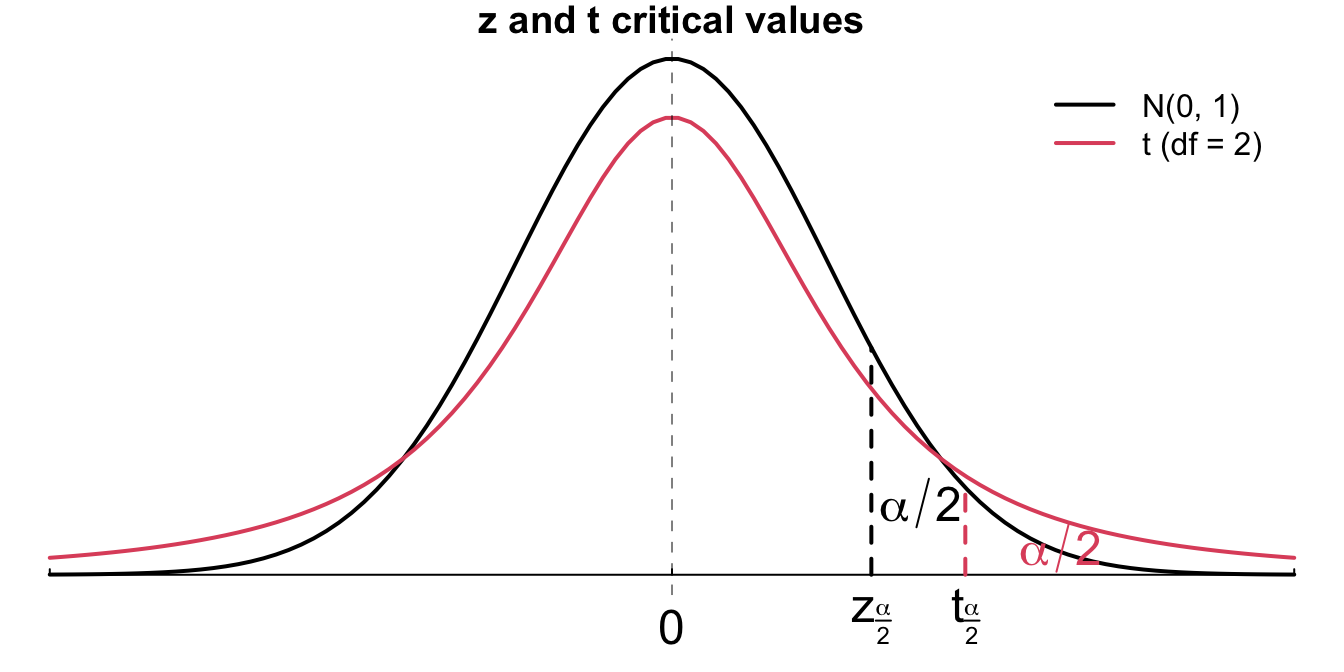

In the CI formula with known

You should be able to answer this question based on the fact that for any degrees of freedom, the student’s t distribution has more variability than

The table below shows

| Level | t df = 5 | t df = 15 | t df = 30 | t df = 1000 | t df = inf | z |

|---|---|---|---|---|---|---|

| 90% | 2.02 | 1.75 | 1.70 | 1.65 | 1.64 | 1.64 |

| 95% | 2.57 | 2.13 | 2.04 | 1.96 | 1.96 | 1.96 |

| 99% | 4.03 | 2.95 | 2.75 | 2.58 | 2.58 | 2.58 |

We have been equipped with everything we need for constructing

The interval form is the same as before. We have the sample mean plus and minus the margin of error. Comparing to the interval with known

Given the same confidence level

Back to the systolic blood pressure (SBP) example. We have qt() to find a quantile or critical value from the Student’s t distribution. In the function, the first argument is still the given probability, then we must specify the degrees of freedom, otherwise R cannot determine which

alpha <- 0.05

n <- 16

x_bar <- 121.5

s <- 5 ## sigma is unknown and s = 5

## t-critical value

(cri_t <- qt(p = alpha / 2, df = n - 1, lower.tail = FALSE))

# [1] 2.13

## margin of error

(m_t <- cri_t * (s / sqrt(n)))

# [1] 2.66

## 95% CI for mu when sigma is unknown

x_bar + c(-1, 1) * m_t

# [1] 119 124Back to the systolic blood pressure (SBP) example. We have t.ppf() (or t.isf()) to find a quantile or critical value from the Student’s t distribution. In the function, the first argument is still the given probability, then we must specify the degrees of freedom, otherwise Python cannot determine which

alpha = 0.05

n = 16

x_bar = 121.5

s = 5 # Sample standard deviation (sigma unknown)

from scipy.stats import t

## t-critical value

cri_t = t.ppf(1 - alpha/2, df=n-1)

cri_t

# 2.131449545559323

## margin of error

m_t = cri_t * (s / np.sqrt(n))

m_t

# 2.664311931949154

## 95% CI for mu when sigma is unknown

x_bar + np.array([-1, 1]) * m_t

# array([118.83568807, 124.16431193])14.5 Summary

To conclude this chapter, a table that summarizes the confidence interval for

| Numerical Data, |

Numerical Data, |

|

|---|---|---|

| Parameter of Interest | Population Mean |

Population Mean |

| Confidence Interval |

Remember to check if the population is normally distributed and/or

14.6 Exercises

-

Here are summary statistics for randomly selected weights of newborn boys:

- Compute a 95% confidence interval for

- Is the result in (a) very different from the 95% confidence interval if

- Compute a 95% confidence interval for

A 95% confidence interval for a population mean

A market researcher wants to evaluate car insurance savings at a competing company. Based on past studies he is assuming that the standard deviation of savings is $95. He wants to collect data such that he can get a margin of error of no more than $12 at a 95% confidence level. How large of a sample should he collect?

-

The 95% confidence interval for the mean rent of one bedroom apartments in Chicago was calculated as ($2400, $3200).

- Interpret the meaning of the 95% interval.

- Find the sample mean rent from the interval.

The standard normal random variable