17 Comparing Two Population Means

17.1 Introduction

Why Compare Two Populations?

We’ve discussed estimation (Chapter 14) and hypothesis testing (Chapter 16) for one single population mean

Comparing the mean annual income for Male and Female groups.

Testing if a diet used for losing weight is effective from Placebo samples and New Diet samples.

If these two samples are drawn from two target populations with means,

where

To compare two means, we need two samples, one for one mean. But the two samples may be dependent or independent, and the methods for comparing two means depend on whether the two samples are dependent or not. So let’s see what dependent and independent samples are.

Dependent and Independent Samples

The two samples used to compare two population means can be independent or dependent.

Two samples are dependent or matched pairs if the sample values are matched, where the matching is based on some inherent relationship. For example,

Height data of fathers and daughters, where the height of each dad is matched with the height of his daughter. Clearly, father and daughter share the same life style, genes, and other factors that affect both father and daughter’s height. So the taller the father is, the taller the daughter tends to be. Their heights are positively correlated. In the two sample data sets, the first father height in the father’s sample is paired with the first daughter height in the daughter’s sample. Same for the second pair, third pair, and so on.

Weights of subjects measure before and after some diet treatment, where the subjects are the same both before and after treatments. In this example, we again have two samples. The two samples are dependent because the subjects in the two samples are identical. Your weight today is of course related to your weight last week, right? In the two sample data sets, the first weight in the sample before diet treatment is paired with the first weight in the sample after treatment. The two sample values belong to the same person. Same for the second pair, third pair, and so on.

Dependent Samples (Matched Pairs)

From the two examples, we learn that subject 1 may refer to

- the first matched pair (dad-daughter)

- the same person with two measurements (before and after treatment)

If we have data with only one variable, in R the data is usually saved as a vector. When we have two samples, the two samples can be saved as a vector separately, or saved as a data matrix with the two samples combined by columns like the table below. Each row is for one matched pair, or the same subject. One column is for one sample data. Note that since every subject in dependent samples is paired, the two samples are of the same size

| Subject | (Dad) Before | (Daughter) After |

|---|---|---|

| 1 | ||

| 2 | ||

| 3 | ||

Independent Samples

Two samples are independent if the sample values from one population are not related to the sample values from the other. For example,

- Salary samples of men and women, where the two samples are drawn independently from the male and female groups.

We may want to compare the mean salary level of male and female. What we can do is to collect two samples independently, one for each group, from their own population. Any subject in the male group has nothing to do with any subject in the female group, and any subject in the male group cannot be paired with any subject in the female group in any way.

The independent samples can be summarized as the table below. Notice that the two samples can have different sample sizes,

| Subject of Group 1 (Male) | Measurement of Group 1 | Subject of Group 2 (Female) | Measurement of Group 2 |

|---|---|---|---|

| 1 | 1 | ||

| 2 | 2 | ||

| 3 | 3 | ||

Inference from Two Samples

The statistical methods are different for these two types of samples. The good news is the concepts of confidence intervals and hypothesis testing for one population can be applied to two-population cases.

Let’s quickly review the confidence interval and test statistic in the one-sample case.

- e.g.,

Margin of error = critical value

The 6 testing steps are the same, and both critical value and

- e.g.,

17.2 Inferences About Two Means: Dependent Samples (Matched Pairs)

In this section we talk about the inference methods for comparing two population means when the samples are dependent.

Hypothesis Testing for Dependent Samples

Suppose we would like to learn if the population means

The null hypothesis can also be written as

or more generally for any types of test,

For dependent samples, we just transform the two samples into one difference sample by taking the difference between paired measurements. We use the difference sample to do the inference about the mean difference. The data table below illustrate the idea. We create a new difference sample data

| Subject | Difference |

||

|---|---|---|---|

| 1 | |||

| 2 | |||

| 3 | |||

The sample

Inference for Paired Data

Here are the requirements for the inference for paired data. The sample differences

With this, the inference for paired data follows the same procedure as the one-sample

The critical value is either

| Paired |

Test Statistic | Confidence Interval for |

|---|---|---|

|

|

The test for matched pairs is called a paired

Example

Consider a capsule used to reduce blood pressure (BP) for individuals with hypertension. A sample of 10 individuals with hypertension takes the medicine for 4 weeks. The BP measurements before and after taking the medicine are shown in the table below. Does the data provide sufficient evidence that the treatment is effective in reducing BP?

| Subject | Before |

After |

Difference |

|---|---|---|---|

| 1 | 143 | 124 | 19 |

| 2 | 153 | 129 | 24 |

| 3 | 142 | 131 | 11 |

| 4 | 139 | 145 | -6 |

| 5 | 172 | 152 | 20 |

| 6 | 176 | 150 | 26 |

| 7 | 155 | 125 | 30 |

| 8 | 149 | 142 | 7 |

| 9 | 140 | 145 | -5 |

| 10 | 169 | 160 | 9 |

Given the data

Step 1

- If we let

Step 2

Step 3

For the

Step 4-c

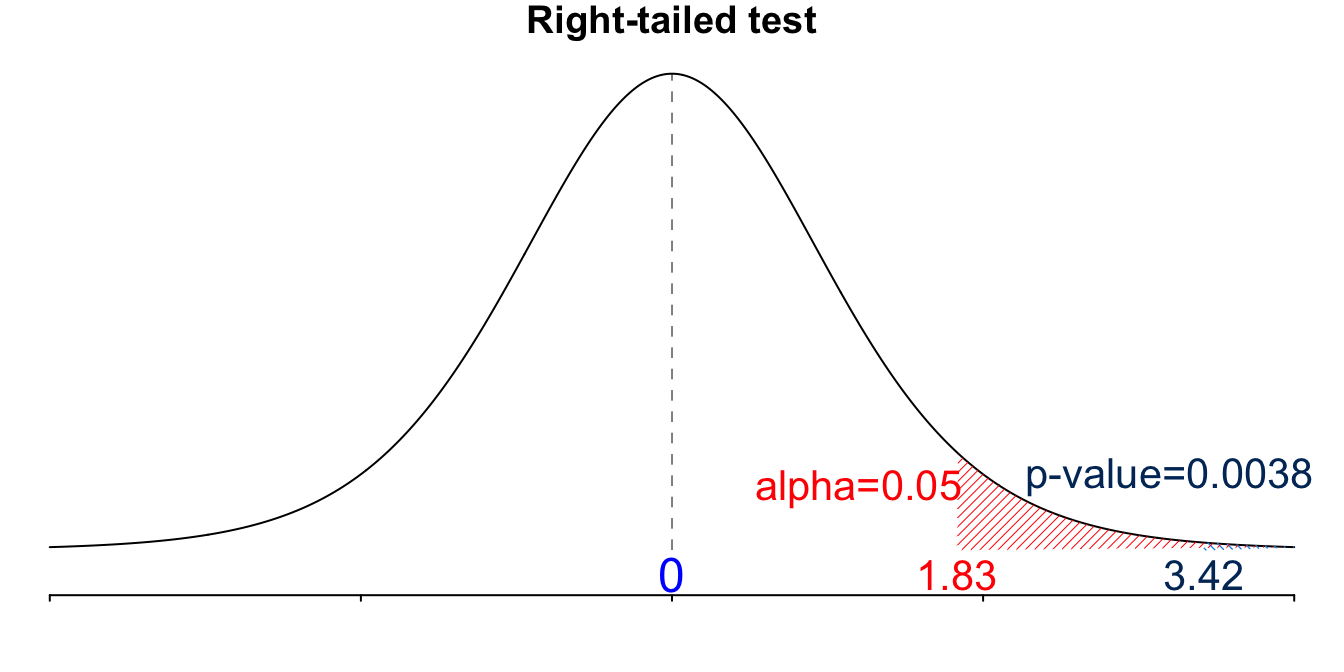

- This is a right-tailed test.

Step 5-c

- We reject

Step 6

- There is sufficient evidence to support the claim that the drug is effective in reducing blood pressure.

The 95% CI for

We are 95% confident that the mean difference in blood pressure is between 4.57 and 22.43. Since the interval does NOT include 0, it leads to the same conclusion as rejection of

Below is the same data as in the previous hypertension example. We load the R data pair_data.RDS into the R session using the load() function.

# Load the data set

load("../introstatsbook/data/pair_data.RDS")

pair_data before after

1 143 124

2 153 129

3 142 131

4 139 145

5 172 152

6 176 150

7 155 125

8 149 142

9 140 145

10 169 160## Create the difference data d

(d <- pair_data$before - pair_data$after) [1] 19 24 11 -6 20 26 30 7 -5 9## sample mean of d

(d_bar <- mean(d))[1] 13.5## sample standard deviation of d

(s_d <- sd(d))[1] 12.48332[1] 3.419823[1] 1.833113[1] 0.003815036Below is an example of how to calculate the confidence interval for the change in blood pressure.

## 95% confidence interval for the mean difference of the paired data

d_bar + c(-1, 1) * qt(p = 0.975, df = length(d) - 1) * (s_d / sqrt(length(d)))[1] 4.569969 22.430031We can see that performing these calculations in R leads us to the same conclusions we previously made. In fact, the R function t.test() does Student’s t-test for us, either one sample or two samples. To do the two sample paired x and y. The alternative argument is either “two.sided”, “less” or “greater”. We have \mu is the difference in means in paired argument should be set as TRUE in order to do the paired

## t.test() function

t.test(x = pair_data$before, y = pair_data$after,

alternative = "greater", mu = 0, paired = TRUE)

Paired t-test

data: pair_data$before and pair_data$after

t = 3.4198, df = 9, p-value = 0.003815

alternative hypothesis: true mean difference is greater than 0

95 percent confidence interval:

6.263653 Inf

sample estimates:

mean difference

13.5 Note that we get the same test statistic and p-value, as well as the test conclusion. However, the one-sided 95% confidence interval shown in the output is not what we want! We may have a one-sided or two-sided test, but we should always use the two-sided confidence interval. When you use the t.test() function to do a one-sided test as we do here, please do not use the confidence interval in the output.

import numpy as np

from scipy.stats import ttest_rel, t, norm

import pandas as pdBelow is the same data as in the previous hypertension example. We load the csv file pair_data.csv into the Python session using the pd.read_csv() function.

pair_data = pd.read_csv('./data/pair_data.csv')

pair_data before after

0 143 124

1 153 129

2 142 131

3 139 145

4 172 152

5 176 150

6 155 125

7 149 142

8 140 145

9 169 160# Create the difference data 'd'

d = pair_data['before'] - pair_data['after']

d0 19

1 24

2 11

3 -6

4 20

5 26

6 30

7 7

8 -5

9 9

dtype: int64# Sample mean of 'd'

d_bar = np.mean(d)

d_bar13.5# Sample standard deviation of 'd'

s_d = np.std(d, ddof=1)

s_d12.48332220738267# T-test statistic

t_test = d_bar / (s_d / np.sqrt(len(d)))

t_test3.4198226804580676# T critical value (one-tailed, 95% confidence level)

t.ppf(0.95, df=len(d)-1)1.8331129326536335# P-value

t.sf(t_test, df=len(d)-1)0.0038150362846879134Below is an example of how to calculate the confidence interval for the change in blood pressure.

# 95% confidence interval for the mean difference

d_bar + pd.Series([-1, 1]) * t.ppf(0.975, df=len(d)-1) * (s_d / np.sqrt(len(d)))0 4.569969

1 22.430031

dtype: float64We can see that performing these calculations in Python leads us to the same conclusions we previously made.

In fact, the Python function ttest_rel() from the scipy.stats module does two sample paired t-test for us. The word rel means “related” because it calculates the t-test on TWO RELATED samples. To do the two sample paired a and b. The alternative argument is either “two.sided”, “less” or “greater”. We have

# T-test using scipy's built-in function

ttest_rel_res = ttest_rel(a=pair_data['before'], b=pair_data['after'],

alternative='greater')

ttest_rel_res.statistic3.419822680458067ttest_rel_res.df9ttest_rel_res.pvalue0.0038150362846879134ttest_rel_res.confidence_interval<bound method TtestResult.confidence_interval of TtestResult(statistic=3.419822680458067, pvalue=0.0038150362846879134, df=9)>Note that we get the same test statistic and p-value, as well as the test conclusion. The function does not print the confidence interval out though.

17.3 Inference About Two Means: Independent Samples

Compare Population Means: Independent Samples

Frequently we would like to compare two different groups. For example,

Whether stem cells can improve heart function. Here the two samples are patients with the stem cell treatment and the ones without the stem cell treatment.

The relationship between pregnant women’s smoking habits and newborns’ weights. Here the two samples are women who smoke and women who don’t.

Whether one variation of an exam is harder than another variation. In this case, the two samples are students having exam A and students taking exam B.

In those examples, the two samples are independent. In this section, we are going to learn how to compare their population means. For example, whether the mean score of exam A is higher than the mean score of exam B.

Testing for Independent Samples

When we deal with two independent samples, we assume they are drawn from two independent populations which are assumed to be normally distributed in this chapter. We are interested in whether the two population means are equal or which is greater. But to do the inference, we need to take care of their standard deviation,

The requirements of testing for independent samples with

- The two samples are independent.

- Both samples are random samples.

-

We are interested in whether the two population means,

This is equivalent to testing if their difference is zero, or

Sampling Distribution of

Again, to do the inference we start with the associated sampling distribution. If the two samples are from independent normally distributed populations or

Because we use

Therefore by standardization, we have a standard normal variable

Test Statistic for Independent Samples

With

Often, we care about of the two means are equal, so

If

Then we are pretty much done. We find

What if

We simply replace the unknown

The degrees of freedom looks intimidating, but no worries you don’t need to memorize the formula, and we let the statistical software take care of it. To be conservative (tend to reject

Inference About Independent Samples

Below is a table that summarizes ways to make inferences about independent samples when

| Test Statistic | Confidence Interval for |

|

|---|---|---|

| known | ||

| unknown |

For unknown

Example: Two-Sample t-Test

Does an over-sized tennis racket exert less stress/force on the elbow? The relevant sample statistics are shown below.

-

Over-sized:

-

Conventional:

The two populations are known to be nearly normal, and because of the large difference in the sample standard deviation suggests

Step 1

- Let

Step 2

Step 3

-

-

-

If the computed value of

Step 4-c

- Because it is a left-tailed test, the critical value is

Step 5-c

- We reject

Step 6

- There is insufficient evidence to support the claim that the the oversized racket delivers less stress to the elbow.

The 95% CI for

We are 95% confident that the difference in the mean forces is between -20.02 and 2.62. Since the interval includes 0, it leads to the same conclusion as failing to reject

Two-Sample t-Test in R

## Prepare needed variables

n1 = 33; x1_bar = 25.2; s1 = 8.6

n2 = 12; x2_bar = 33.9; s2 = 17.4

A <- s1^2 / n1; B <- s2^2 / n2

df <- (A + B)^2 / (A^2/(n1-1) + B^2/(n2-1))

## Use floor() function to round down to the nearest integer.

(df <- floor(df))[1] 13## t_test

(t_test <- (x1_bar - x2_bar) / sqrt(s1^2/n1 + s2^2/n2))[1] -1.659894## t_cv

qt(p = 0.05, df = df)[1] -1.770933## p_value

pt(q = t_test, df = df)[1] 0.06042575# Prepare needed variables

n1 = 33; x1_bar = 25.2; s1 = 8.6

n2 = 12; x2_bar = 33.9; s2 = 17.4

A = s1**2 / n1; B = s2**2 / n2

df = (A + B)**2 / (A**2/(n1-1) + B**2/(n2-1))

# Round down to the nearest integer

df = np.floor(df)

df13.0# T-test statistic

t_test = (x1_bar - x2_bar) / np.sqrt(s1**2/n1 + s2**2/n2)

# T critical value

t.ppf(0.05, df=df)-1.7709333959867992# P-value

t.cdf(t_test, df=df)0.060425745501011804 Testing for Independent Samples (

We’ve done the case that

Sampling Distribution of

Again, we start with the sampling distribution of

Test Statistic for Independent Samples

Similar to the case that

If

The idea is to use the so-called pooled sample variance to estimate the common population variance,

As

If

Inference from Independent Samples

Below is a table that summarizes ways to make inferences about independent samples when

| Test Statistic | Confidence Interval for |

|

|---|---|---|

| known | ||

| unknown |

Use

The test from two independent samples with

Example: Weight Loss

A study was conducted to see the effectiveness of a weight loss program. Two groups (Control and Experimental) of 10 subjects were selected. The two populations are normally distributed and have the same standard deviation.

The data on weight loss was collected at the end of six months, and the revelant sample statistics

Control:

Experimental:

Is there a sufficient evidence at

Step 1

- Let

Step 2

Step 3

- From the question we know

Step 4-c

-

Step 5-c

- We reject

Step 4-p

- The

Step 5-p

- We reject

Step 6

- There is sufficient evidence to support the claim that the weight loss program is effective.

The 95% CI for

We are 95% confident that the difference in the mean weight loss is between -2.672 and -1.528. Since the interval does not include 0, it leads to the same conclusion as rejection of

Two-Sample Pooled t-Test

## Prepare values

n1 = 10; x1_bar = 2.1; s1 = 0.5

n2 = 10; x2_bar = 4.2; s2 = 0.7

## pooled sample standard deviation

sp <- sqrt(((n1 - 1) * s1 ^ 2 + (n2 - 1) * s2 ^ 2) / (n1 + n2 - 2))

## degrees of freedom

df <- n1 + n2 - 2

## t_test

(t_test <- (x1_bar - x2_bar) / (sp * sqrt(1 / n1 + 1 / n2)))[1] -7.719754## t_cv

qt(p = 0.05, df = df)[1] -1.734064## p_value

pt(q = t_test, df = df)[1] 2.028505e-07# Prepare values

n1 = 10; x1_bar = 2.1; s1 = 0.5

n2 = 10; x2_bar = 4.2; s2 = 0.7

# Pooled sample standard deviation

sp = np.sqrt(((n1 - 1) * s1**2 + (n2 - 1) * s2**2) / (n1 + n2 - 2))

# Degrees of freedom

df = n1 + n2 - 2

# T-test statistic

t_test = (x1_bar - x2_bar) / (sp * np.sqrt(1/n1 + 1/n2))

t_test-7.719753531984983# T critical value

t.ppf(0.05, df=df)-1.734063606617536# P-value

t.cdf(t_test, df=df)2.0285052120014635e-0717.3.1 Sample size formula

How large both sample sizes

Suppose we like to do a two-sample test (independent samples and equal variance

One-tailed test (either left-tailed or right-tailed):

Two-tailed test:

17.4 Exercises

- A study was conducted to assess the effects that occur when children are expected to cocaine before birth. Children were tested at age 4 for object assembly skill, which was described as “a task requiring visual-spatial skills related to mathematical competence.” The 187 children born to cocaine users had a mean of 7.1 and a standard deviation of 2.5. The 183 children not exposed to cocaine had a mean score of 8.4 and a standard deviation of 2.5.

- With

- Test the claim in part (a) by using a confidence interval.

- With

- Listed below are heights (in.) of mothers and their first daughters.

- Use

- Test the claim in part (a) by using a confidence interval.

- Use

| Height of Mother | 66 | 62 | 62 | 63.5 | 67 | 64 | 69 | 65 | 62.5 | 67 |

| Height of Daughter | 67.5 | 60 | 63.5 | 66.5 | 68 | 65.5 | 69 | 68 | 65.5 | 64 |